✅ Week 11 - Lab Solutions

Predicting true statements using the LIAR data set

This solution file follows the format of a Jupyter Notebook file .ipynb you had to fill in during the lab session.

👉 NOTE: We wanted to flag that this solution is far from optimal. Rather, it is more to serve as a demonstration of what kinds of analyses can be performed using this data set using the tools studied in DS202 (supervised learning part) or that extend them slightly (unsupervised part).

⚙️ Setup

Downloading the student solutions

Click on the below button to download the student notebook.

Loading libraries

import numpy as np

import pandas as pd

import spacy

from scipy.stats import mode

from sklearn.preprocessing import OneHotEncoder

from gensim.models.ldamodel import LdaModel

from gensim.models.coherencemodel import CoherenceModel

from sklearn.decomposition import LatentDirichletAllocation

import matplotlib.pyplot as plt

from gensim.models import Phrases

from gensim.models.phrases import Phraser

from gensim.corpora import Dictionary

from collections import Counter

from itertools import chain

from sklearn.model_selection import train_test_split, GridSearchCV

from sklearn.feature_extraction.text import CountVectorizer, TfidfVectorizer

from sklearn.metrics import confusion_matrix, f1_score, recall_score, precision_score

from sklearn.linear_model import LogisticRegression

from xgboost import XGBClassifier

from sklearn.decomposition import TruncatedSVD

import umap

from sklearn.cluster import SpectralClustering,AgglomerativeClustering

from sklearn.ensemble import IsolationForest

from sklearn.neighbors import LocalOutlierFactor

from sklearn.svm import OneClassSVM

from kmedoids import KMedoids

import plotly.express as px

from gower import gower_matrix

from tqdm import tqdm

import pyLDAvis

import networkx as nx

from scipy.sparse import csr_matrix

from skfuzzy.cluster import cmeans

from lets_plot import *

LetsPlot.setup_html()

import warnings

warnings.filterwarnings('ignore')

# Load spaCy stopwords

nlp = spacy.load("en_core_web_sm")

stopwords = nlp.Defaults.stop_words

%matplotlib inline

import os

from bertopic import BERTopic

from sentence_transformers import SentenceTransformerLoad the data set

We will start by loading liar and inspecting the distribution of the target. We find that approximately 42% of samples contain true statements, as defined in the Week 11 Lab Roadmap.

# Read the .csv

liar = pd.read_csv("../data/liar-dataset-cleaned-v2.csv")

# How is our target distributed?

liar.value_counts("true_statement").to_frame()

true_statement count

0 False 3304

1 True 2436Feature Selection: Statements

We’ll start by extracting features from the statements. The code for doing this is largely similar to the code provided in Week 10, but with two differences:

- We are creating n-grams, specifically unigrams and bigrams as features.

- We have specified a miminum document frequency of 15 to cut down on the number of features.

# Preprocess function: tokenize, remove punctuation, numbers, and stopwords

def preprocess_text(text):

doc = nlp(text.lower()) # Lowercase text

tokens = [

token.lemma_ for token in doc

if not token.is_punct and not token.is_digit and not token.is_space

and token.text.lower() not in stopwords

]

return " ".join(tokens) # Return cleaned text as a string

# Apply preprocessing to each review

liar["statement_cleaned"] = liar["statement"].apply(preprocess_text)

# Create document-feature matrix (DFM) using CountVectorizer

vectorizer = CountVectorizer(min_df=15, ngram_range=(1,2))

dfm = vectorizer.fit_transform(liar["statement_cleaned"])

# Convert to a DataFrame for inspection

dfm = pd.DataFrame(dfm.toarray(), columns=vectorizer.get_feature_names_out())Feature Selection: Contexts

Statements are made in many contexts, which might influence the statement maker’s propensity to tell the truth. We will build code that rationalises context by lumping all contexts that appear in 10 or less statements into an “other” category. To work with scikit-learn, we can transform this column into a one-hot encoded series of dummy variables.

# Create a count for each context

counts = liar.value_counts("context")

# Set the threshold

threshold = 10

# Create a variable that transforms the context column

liar["context_lumped"] = liar["context"].apply(lambda x: x if pd.notna(x) and counts.get(x, 0) >= threshold else "other")

# Convert the column to dummies

contexts = pd.get_dummies(liar["context_lumped"], columns=["context_lumped"], prefix="context")Feature Selection: Subjects

As some subjects are more contentious than others, this might also influence a public figure’s propensity to tell the truth. subjects has already been transformed into dummies, so all we need to do is isolate them.

subjects = liar[liar.columns[liar.columns.str.contains("subj_")]]Constructing the training and testing set

# Concatenate the features into one set of features

X = pd.concat([dfm,subjects,contexts], axis=1)

# Isolate the target

y = liar["true_statement"]

# Perform the train test split

X_train, X_test, y_train, y_test = train_test_split(X, y, stratify=y, random_state=123)Supervised Learning: Penalised logistic regression

Let’s try out some different combinations of hyperparameters. We will build a dictionary that has different kinds of penalties (l1 = Lasso, l2 = Ridge), along with different levels of penalty.

# Create a dictionary of hyperparameter choices

pl_params = {"penalty":["l1","l2"], "C": [0.5,0.1,0.01,0.001]}

# Instantiate a lasso classification model

logit_classifier = LogisticRegression(solver="liblinear",max_iter=1000,random_state=123)

# Instantiate a Grid Search

pl_grid = GridSearchCV(logit_classifier, param_grid=pl_params, scoring="f1", cv=10)

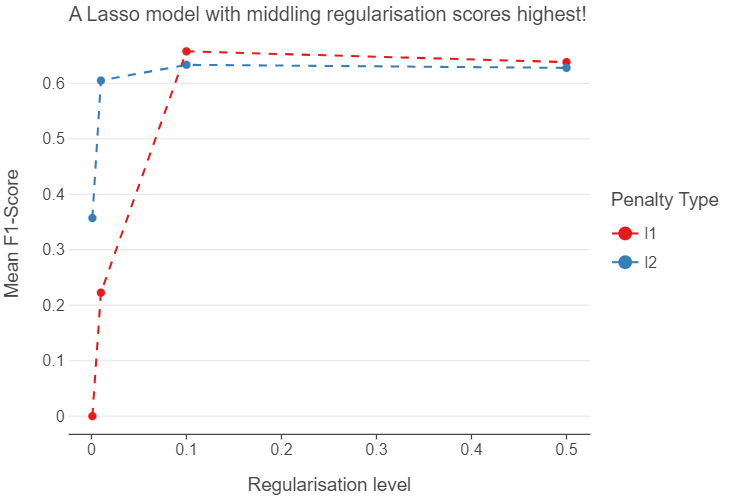

_ = pl_grid.fit(X_train, y_train)Below, we can visualise which hyperparameter combination words best. We can see that at high levels of regularisation, Ridge tends to outperform Lasso considerably. However, when regularisation is relaxed, Lasso starts to slightly outperform Ridge.

(

ggplot(pd.DataFrame(pl_grid.cv_results_), aes("param_C", "mean_test_score", color = "param_penalty")) +

geom_point() +

geom_line(linetype = "dashed") +

theme(panel_grid_major_x=element_blank()) +

labs(x = "Regularisation level", y = "Mean F1-Score", color = "Penalty Type",

title = "A Lasso model with middling regularisation scores highest!")

)

We can now apply our “optimal” hyperparameter combinations to the whole of the training set.

# Instantiate a lasso classification model

lasso_classifier = pl_grid.best_estimator_

# Fit the model to the training data

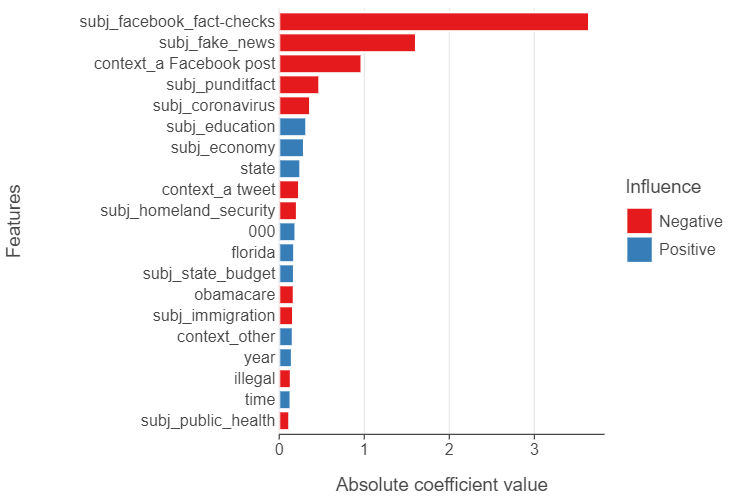

_ = lasso_classifier.fit(X_train, y_train)To peak “underneath the hood” of the Lasso model, we can explore which factors tend to predict truthful / untruthful statements. Unsurprisingly, subjects dealing with Facebook Fact-Checks and Fake News are the most indicative of untruthful statements. We see that topics such as education and the economy, however, are most indicative of truthful statements.

# Create a data frame of the top 20 features

top_20_feats = (

pd.DataFrame({"features": lasso_classifier.feature_names_in_,

"coefs": lasso_classifier.coef_.reshape(-1)})

.assign(abs_coefs = lambda x: np.abs(x["coefs"]),

sign = lambda x: np.where(x["coefs"] > 0, "Positive", "Negative"))

.sort_values("abs_coefs")

.tail(20)

)

# Plot the output

(

ggplot(top_20_feats, aes("abs_coefs", "features", fill = "sign")) +

geom_bar(stat = "identity", tooltips=layer_tooltips().line("Abs. coef. value: @abs_coefs").line("@sign")) +

theme(panel_grid_major_y=element_blank()) +

labs(x = "Absolute coefficient value", y = "Features", fill = "Influence")

)

We can now apply our insights to the test set, to see how well our “optimal” model performs. We have an F1-score of 0.66, which is better than flipping a coin. Let’s see if we can get better performance using an XGBoost.

# Apply class predictions to the test set

predictions = lasso_classifier.predict(X_test)

# f1-score

np.round(f1_score(y_test, predictions), 2)

0.66Supervised Learning: XGBoost

With XGBoost, we will vary the proportion of features sampled when building each tree in the model and the learning rate. To speed things up, we are going to take advantage of parallel processing by setting n_jobs = -1 which lets the algorithm run across

# Create a dictionary of hyperparameter values to try

xgb_params = {"n_estimators": [1000], "colsample_bytree":[0.3,0.6,0.9], "learning_rate": [0.001,0.01,0.1]}

# Instantiate an XGBoost classifier, utilising all cores in your laptop

xgb_classifier = XGBClassifier(n_jobs = -1)

# Create a 10-fold cross-validation algorithm

xgb_grid = GridSearchCV(xgb_classifier, param_grid=xgb_params, scoring="f1", cv=10)

# Fit the algorithm to the training data

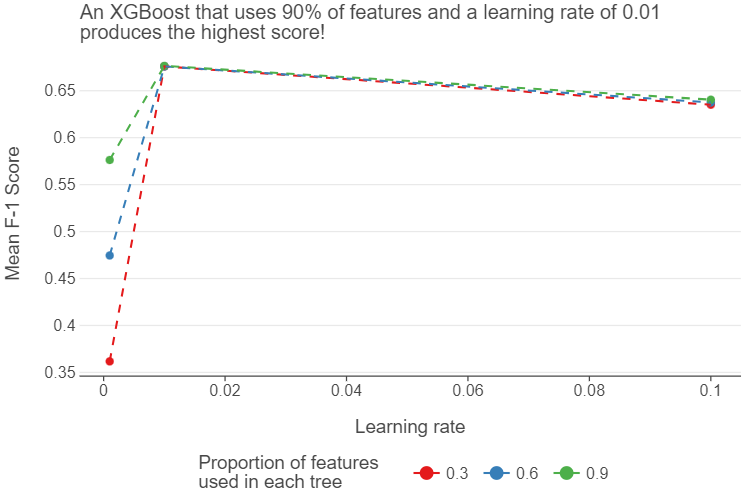

_ = xgb_grid.fit(X_train, y_train)We see that an XGBoost that uses 90% of features to build each decision tree and a learning rate of 0.01 produces the best results out of all the hyperparameter combinations we have tried.

xgb_grid.cv_results_["param_colsample_bytree"] = xgb_grid.cv_results_["param_colsample_bytree"].astype(str)

(

ggplot(pd.DataFrame(xgb_grid.cv_results_), aes("param_learning_rate", "mean_test_score", color = "param_colsample_bytree")) +

geom_point(tooltips=layer_tooltips().line("@mean_test_score")) +

geom_line(linetype = "dashed") +

theme(legend_position="bottom",

panel_grid_major_x= element_blank()) +

labs(x = "Learning rate", y = "Mean F-1 Score", color = "Proportion of features\nused in each tree",

title = "An XGBoost that uses 90% of features and a learning rate of 0.01\nproduces the highest score!")

)

After having evaluated the data on the test set, we find that our F1-score improves by ~ 2 percentage points - noticeable, but there is still obviously room for improvement.

# Pick the best XGBoost

xgb_classifier = xgb_grid.best_estimator_

# Fit the best model to the training data

_ = xgb_classifier.fit(X_train, y_train)

# Apply class predictions to the test set

predictions = xgb_classifier.predict(X_test)

# f1-score

np.round(f1_score(y_test, predictions), 2)

0.68Unsupervised learning approaches

In this part, we’ll extract insights from the LIAR datasets using a couple of different unsupervised techniques:

- clustering

- anomaly detection

- topic modeling

But, before we do that, we’ll pre-process the data again as the pre-processing here is a bit different from the pre-processing used in the supervised learning part (note that you could also have used the pre-processing performed here in the supervised learning part). The main reason for this pre-processing is to enhance the results of topic modeling (LDA performed on data pre-processed as in the supervised learning part doesn’t yield particularly meaningful results for this dataset!).

Pre-processing

texts = liar["statement"].astype(str).tolist()

def spacy_preprocess(texts):

processed = []

for doc in nlp.pipe(texts, batch_size=500):

tokens = []

for token in doc:

if (

token.is_punct or

token.like_num or

token.is_space or

not token.is_alpha

):

continue

# Keep all named entities intact (e.g., "Affordable Care Act")

if token.ent_type_ in {"PERSON", "ORG", "GPE", "LAW", "NORP", "EVENT"}:

tokens.append(token.text.lower())

continue

# Keep only relevant parts of speech: NOUN, VERB, ADJ, ADV

if token.pos_ in {"NOUN", "VERB", "ADJ", "ADV"}:

lemma = token.lemma_.lower()

tokens.append(lemma)

processed.append(tokens)

return processed

# Run preprocessor

tokenized_texts = spacy_preprocess(texts)

# Train bigram and trigram models

bigram_model = Phrases(tokenized_texts, min_count=3, threshold=5)

trigram_model = Phrases(bigram_model[tokenized_texts], threshold=5)

bigram_phraser = Phraser(bigram_model)

trigram_phraser = Phraser(trigram_model)

# Apply phrase detection

texts_bigrams = [bigram_phraser[doc] for doc in tokenized_texts]

texts_trigrams = [trigram_phraser[bigram_phraser[doc]] for doc in tokenized_texts]

# Final cleaned version

final_texts = [[w for w in doc if 2 < len(w) < 25] for doc in texts_trigrams]The goal of the pre-processing is to clean and structure political statements for downstream analysis — without stripping away politically meaningful expressions like “tax cut”, “Donald Trump”, “Affordable Care Act”. Here’s what’s happening:

1️⃣ Step 1: Load LIAR data

texts = liar["statement"].astype(str).tolist()The dataset is loaded and converted to a list of strings (statement column).

2️⃣ Step 2 (within the spacy_preprocess function): spaCy Preprocessing Function

def spacy_preprocess(texts):

processed = []

for doc in nlp.pipe(texts, batch_size=500):

tokens = []

for token in doc:- Batch processes texts using

spaCyfor speed. - Initializes a list of

tokensfor eachdoc(text).

3️⃣ Step 3 (within the spacy_preprocess function): Remove non-informative tokens

if (

token.is_punct or

token.like_num or

token.is_space or

not token.is_alpha

):

continueRemove (i.e skip) punctuation, digits, whitespace, and anything that isn’t a proper word (is_alpha).

4️⃣ Step 4 (within the spacy_preprocess function): Preserve Named Entities (Important!)

if token.ent_type_ in {"PERSON", "ORG", "GPE", "LAW", "NORP", "EVENT"}:

tokens.append(token.text.lower())

continue- If a token is part of a named entity (e.g., a law, person, organization, political event), we keep it as-is, lowercased.

- This ensures phrases like “Affordable Care Act” are preserved in the next step.

5️⃣ Step 5 (within the spacy_preprocess function): Filter POS tags (keep only meaningful words)

if token.pos_ in {"NOUN", "VERB", "ADJ", "ADV"}:

lemma = token.lemma_.lower()

tokens.append(lemma)- Keeps only nouns, verbs, adjectives, and adverbs.

- Lemmatizes them (e.g., “running” → “run”, “better” → “good”).

6️⃣ Step 6: Generate Bigrams and Trigrams

bigram_model = Phrases(tokenized_texts, min_count=3, threshold=5)

trigram_model = Phrases(bigram_model[tokenized_texts], threshold=5)

bigram_phraser = Phraser(bigram_model)

trigram_phraser = Phraser(trigram_model)

texts_bigrams = [bigram_phraser[doc] for doc in tokenized_texts]

texts_trigrams = [trigram_phraser[bigram_phraser[doc]] for doc in tokenized_texts]- Learns common bigrams like

["tax", "cut"] → "tax_cut"and trigrams like["affordable", "care", "act"] → "affordable_care_act". threshold=5ensures only semi-frequent phrases are merged.

7️⃣ Step 7: Final Cleanup

final_texts = [[w for w in doc if 2 < len(w) < 25] for doc in texts_trigrams]Filters out tokens that are too short (like “a”, “it”) or too long (usually garbage).

Why is this pre-processing suitable for political text such as the LIAR dataset?

- We’re only removing punctuation, numbers, spaces, and non-alphabetic characters, while retaining important content.

- In particular, we keep named entities in their original form (just lowercased) rather than lemmatizing them, which maintains their recognizable identity. And we’ve included named entity types that are highly relevant in political discourse.

- We made a sensible part-of-speech selection. By including nouns, verbs, adjectives, and adverbs, we capture the key content words that convey meaning and sentiment in political statements.

- The addition of bigram and trigram detection is particularly valuable for political text, as it will capture important phrases like “health_care_reform” or “tax_policy” rather than treating the individual words separately.

- The final step of removing very short (≤2 character) and very long (≥25 character) tokens helps eliminate potential noise while keeping meaningful content.

This approach should preserve the important semantic content needed for analyzing political statements, including entities, topics, and sentiment-bearing words, while still providing useful normalization and noise reduction.

We’ll now construct a TF-IDF DFM.

docs = [" ".join(tokens) for tokens in final_texts]

# TF-IDF: preserve important phrases by analyzing words and bigrams

vectorizer = TfidfVectorizer(

max_df=0.5,

min_df=5,

ngram_range=(1, 3), # can also try (2, 3) for stricter phrase focus

)

X_tfidf = vectorizer.fit_transform(docs)LSA for dimensionality reduction

We’ll use LSA to reduce dimensions before applying clustering or anomaly detection.

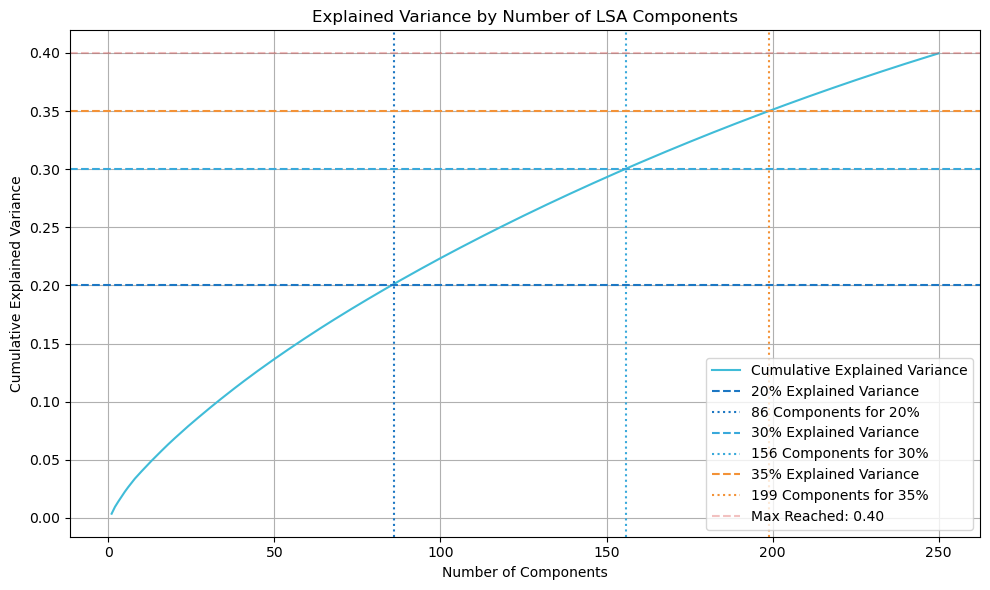

Let’s first start by choosing the number of components for LSA.

There are several methods to determine the number of components for LSA (some of which can also be used for LDA).

1. Explained Variance (LSA-specific)

| Method | Explained Variance (via TruncatedSVD) |

|---|---|

| Use Case | Latent Semantic Analysis (LSA) |

| Metric | Cumulative variance explained by components |

| How It Works | Selects the smallest number of components needed to explain a threshold (e.g., 90%) of variance in the TF-IDF matrix |

| Pros | Intuitive, quick to compute, gives a rough dimensionality estimate |

| Cons | May not reflect semantic coherence of topics, not meaningful for LDA or NMF |

| Outcome in Our Case | No clear elbow or plateau was observed. Explained variance increased gradually without a strong inflection point. (see plot below) |

Click to view the code

def plot_explained_variance(X_tfidf, max_components=100, thresholds=[0.4, 0.5, 0.6, 0.7, 0.8]):

"""

Plot cumulative explained variance from TruncatedSVD for LSA, and mark thresholds if they are reached.

Returns a dictionary mapping thresholds to number of components needed (if reached).

"""

n_components = min(max_components, X_tfidf.shape[1] - 1)

svd = TruncatedSVD(n_components=n_components, random_state=42)

svd.fit(X_tfidf)

explained_variance = svd.explained_variance_ratio_

cumulative_variance = np.cumsum(explained_variance)

max_variance = cumulative_variance[-1]

print(f"🔍 Max cumulative explained variance: {max_variance:.3f}")

plt.figure(figsize=(10, 6))

plt.plot(range(1, n_components + 1), cumulative_variance, color='#40BCD8', linestyle='-', label='Cumulative Explained Variance')

plt.grid(True)

plt.xlabel('Number of Components')

plt.ylabel('Cumulative Explained Variance')

plt.title('Explained Variance by Number of LSA Components')

threshold_components = {}

colors = ['#1C77C3', '#39A9DB', '#F39237', '#D63230', '#D63230']

for i, threshold in enumerate(thresholds):

if threshold <= max_variance:

n_required = np.argmax(cumulative_variance >= threshold) + 1

threshold_components[threshold] = n_required

plt.axhline(y=threshold, color=colors[i % len(colors)], linestyle='--',

label=f'{int(threshold * 100)}% Explained Variance')

plt.axvline(x=n_required, color=colors[i % len(colors)], linestyle=':',

label=f'{n_required} Components for {int(threshold * 100)}%')

else:

print(f"⚠️ Threshold {threshold} not reached (max = {max_variance:.2f})")

# Optional: mark max variance level

plt.axhline(y=max_variance, color='#D63230', linestyle='--', alpha=0.3,

label=f'Max Reached: {max_variance:.2f}')

plt.legend()

plt.tight_layout()

plt.show()

return threshold_components

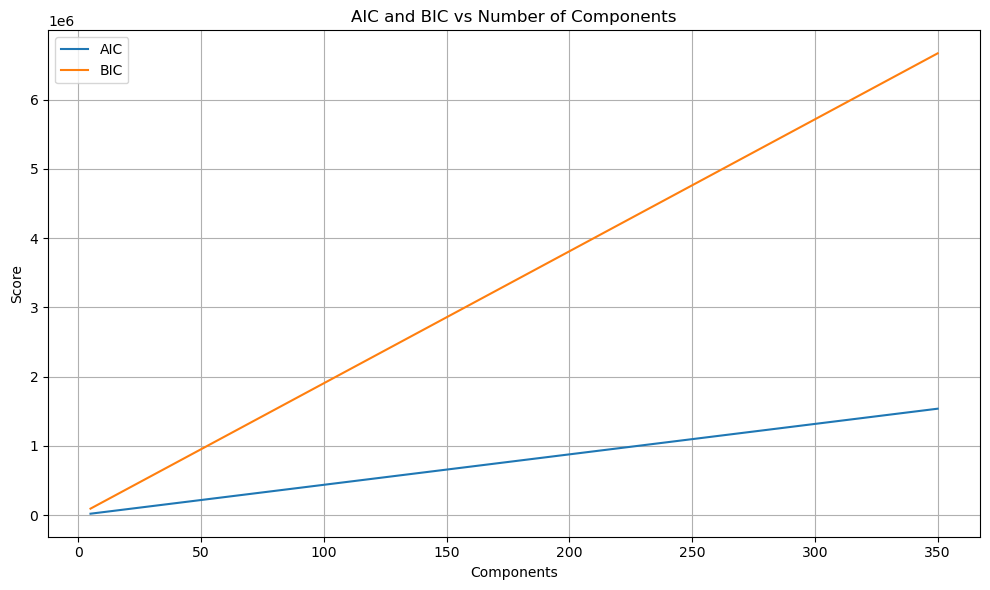

plot_explained_variance(X_tfidf, max_components=100, thresholds=[0.2,0.3,0.35]2. AIC/BIC (Information Criteria)

| Method | AIC / BIC (Adapted from PCA-style residual reconstruction error) |

|---|---|

| Use Case | LSA (non-probabilistic) - adapted approach |

| Metric | Tradeoff between model fit (reconstruction error) and complexity |

| How It Works | Penalizes models with more parameters to avoid overfitting |

| Pros | Formal criterion for model selection |

| Cons | Not designed for SVD or non-generative models, results may be noisy/inconsistent in high-dimensional sparse text |

| Outcome in Our Case | No clear minima detected in AIC/BIC curves; insufficient for topic selection here (see plot below) |

Click to view the code

def compute_information_criteria(X_tfidf, component_range):

n_samples, n_features = X_tfidf.shape

aic_scores, bic_scores = [], []

X_tfidf_dense = X_tfidf.toarray()

for n_components in component_range:

svd = TruncatedSVD(n_components=n_components, random_state=42)

X_trans = svd.fit_transform(X_tfidf)

X_approx = np.dot(X_trans, svd.components_)

rss = np.sum((X_tfidf_dense - X_approx) ** 2)

k = n_components * (n_features + 1)

aic = n_samples * np.log(rss / n_samples) + 2 * k

bic = n_samples * np.log(rss / n_samples) + k * np.log(n_samples)

aic_scores.append(aic)

bic_scores.append(bic)

plt.figure(figsize=(10, 6))

plt.plot(component_range, aic_scores, label='AIC')

plt.plot(component_range, bic_scores, label='BIC')

plt.xlabel('Components')

plt.ylabel('Score')

plt.title('AIC and BIC vs Number of Components')

plt.legend()

plt.grid(True)

plt.tight_layout()

plt.show()

best_aic = component_range[np.argmin(aic_scores)]

best_bic = component_range[np.argmin(bic_scores)]

return best_aic, best_bic

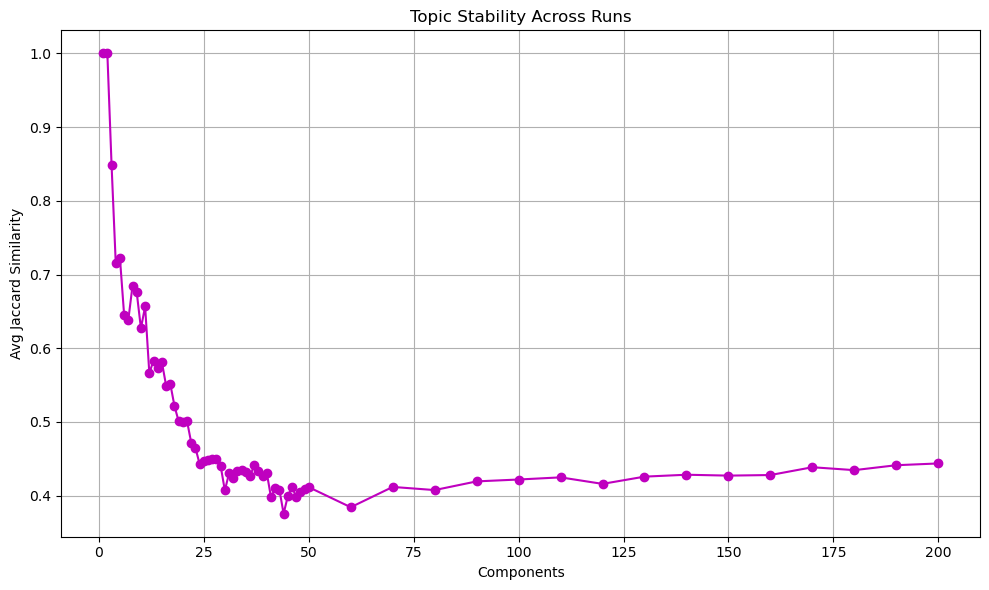

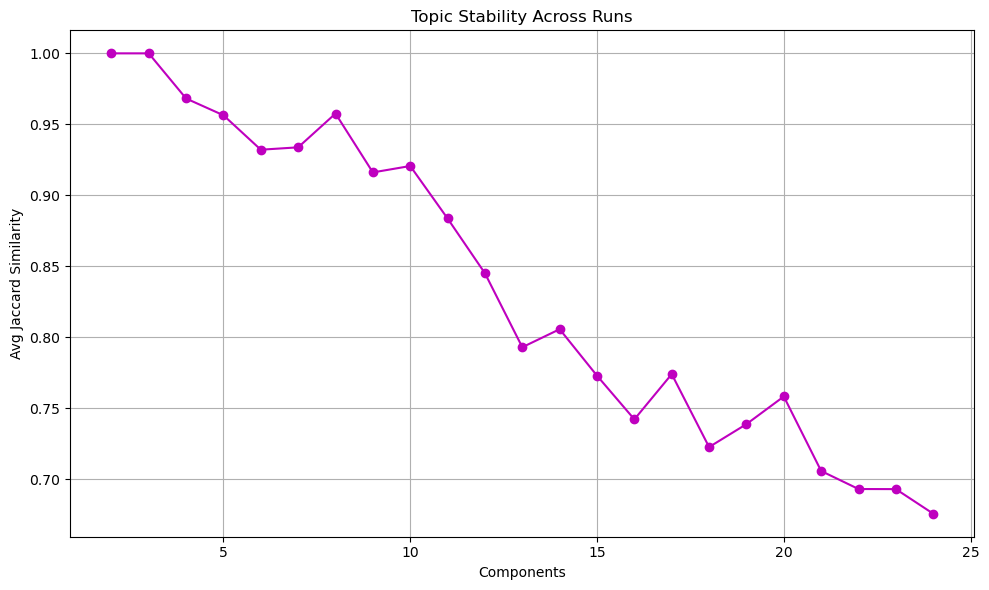

compute_information_criteria(X_tfidf,component_range)3. Topic Stability (LSA/LDA/NMF-compatible)

| Method | Topic Stability (Jaccard similarity across runs) |

|---|---|

| Use Case | Any model where randomness influences output |

| Metric | Average pairwise similarity of top terms per topic across multiple model runs |

| Pros | Robust, directly measures semantic consistency |

| Cons | Requires multiple model fits; computationally more expensive |

| Outcome in Our Case | This metric shows a sharp decline until about [50] components then stabilizes with a slight uptick after 150 components (see outcome of code chunk below) |

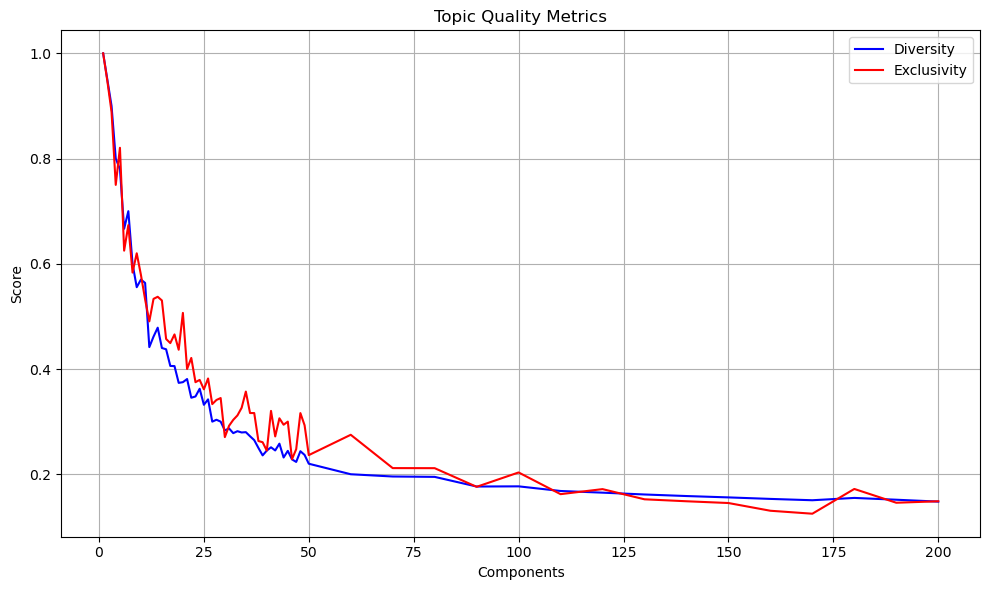

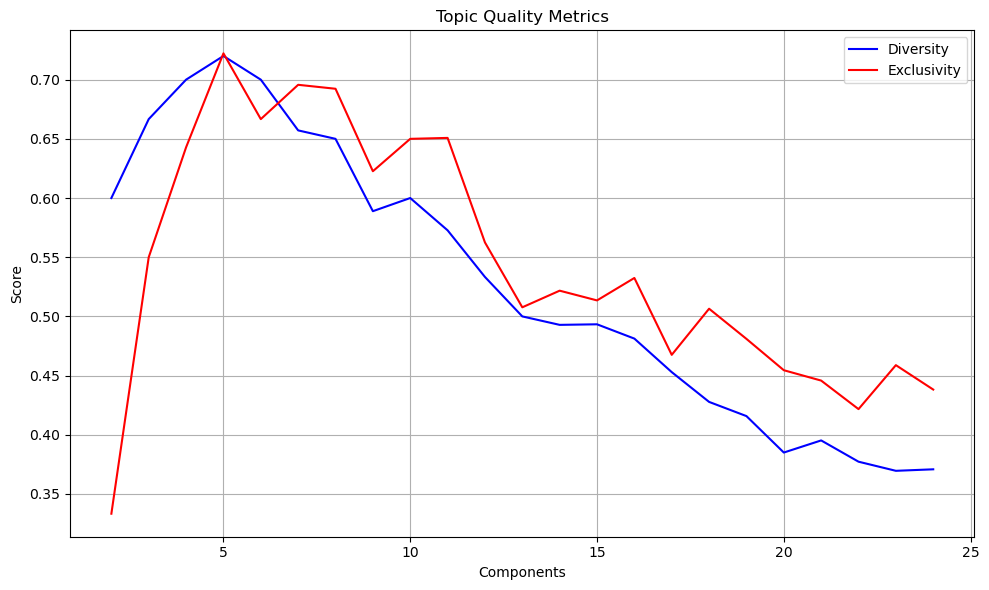

4. Topic Quality (Diversity & Exclusivity)

| Method | Topic Quality Metrics |

|---|---|

| Use Case | Any topic model |

| Metrics | Diversity: unique words across topics; Exclusivity: words unique to single topics |

| Pros | Directly measures topic interpretability and separability |

| Cons | Somewhat heuristic, though intuitive and easy to interpret |

| Outcome in Our Case | Clear “elbow” observed between [50-75] components for both diversity and exclusivity, indicating high-quality topics (see outcome of code chunk below) |

We’ll use topic stability and topic quality (i.e exclusivity+diversity) to select the number of LSA component in this particular case.

Why topic stability + topic quality?

In our case, traditional quantitative metrics like explained variance and AIC/BIC did not yield clear-cut or meaningful selection criteria. Instead, we observed visually and quantitatively well-defined optima (see the results of the code chunk below) using:

- Topic Stability: A strong indicator of model consistency across different initializations.

- Topic Quality (Diversity + Exclusivity): Highlighted a point where topics are both distinct and interpretable.

This dual-criterion approach is particularly well-suited to exploratory or unsupervised text analysis, where interpretability and robustness matter more than pure statistical fit.

Higher stability (Jaccard similarity) indicates more consistent topics across different runs, while quality metrics tell you how interpretable those topics are. When selecting the optimal number of components for LSA, we’re looking for:

- Stability (Jaccard similarity) as high as possible - indicating that our topic assignments are consistent across different runs rather than randomly changing.

- Quality metrics (Diversity and Exclusivity) that have reached an elbow point - where adding more components gives diminishing returns.

In our graphs (see results of code chunk below), around 50-75 components seems to be where both conditions start to be met. After this point:

- Stability levels off and even slightly improves

- Quality metrics stop their steep decline and flatten out

This “elbow point” represents a sweet spot where we have enough components to capture meaningful patterns in our data, but not so many that we’re just modeling noise or creating unstable, overlapping topics. A lower component count (e.g 10) might give higher absolute stability scores but at the cost of poor quality metrics, while very high component counts don’t meaningfully improve any of the metrics.

We choose 70 here.

fine_grained_range = list(range(1, 51))+list(range(60, 201, 10)) #iteration range (step of 1 from 1 to 50 and step of 10 between 50 and 200).

# Extract topics from SVD

def get_topics_from_svd(svd_model, feature_names, n_top_words=10):

word_indices = np.argsort(svd_model.components_, axis=1)[:, -n_top_words:]

return np.array(feature_names)[word_indices][:, ::-1]

# 1. Topic Stability

def evaluate_stability(X_tfidf, feature_names, component_range, n_runs=5, n_top_words=10):

stability_scores = []

for n_components in component_range:

runs_topics = []

for seed in range(n_runs):

svd = TruncatedSVD(n_components=n_components, random_state=seed)

svd.fit(X_tfidf)

topics = get_topics_from_svd(svd, feature_names, n_top_words)

runs_topics.append(topics)

sim_scores = []

for i in range(n_runs):

for j in range(i + 1, n_runs):

sim = [

len(set(t1) & set(t2)) / len(set(t1) | set(t2))

for t1, t2 in zip(runs_topics[i], runs_topics[j])

]

sim_scores.append(np.mean(sim))

stability_scores.append(np.mean(sim_scores))

plt.figure(figsize=(10, 6))

plt.plot(component_range, stability_scores, 'm-o')

plt.xlabel('Components')

plt.ylabel('Avg Jaccard Similarity')

plt.title('Topic Stability Across Runs')

plt.grid(True)

plt.tight_layout()

plt.show()

return stability_scores

# 2. Topic Quality

def calculate_topic_metrics(X_tfidf, feature_names, component_range, n_top_words=10):

diversity_scores, exclusivity_scores = [], []

for n_components in component_range:

svd = TruncatedSVD(n_components=n_components, random_state=42)

svd.fit(X_tfidf)

topics = get_topics_from_svd(svd, feature_names, n_top_words)

all_words = [word for topic in topics for word in topic]

diversity = len(set(all_words)) / len(all_words)

counts = Counter(all_words)

exclusivity = sum(1 for v in counts.values() if v == 1) / len(counts)

diversity_scores.append(diversity)

exclusivity_scores.append(exclusivity)

plt.figure(figsize=(10, 6))

plt.plot(component_range, diversity_scores, label='Diversity', color='blue')

plt.plot(component_range, exclusivity_scores, label='Exclusivity', color='red')

plt.xlabel('Components')

plt.ylabel('Score')

plt.title('Topic Quality Metrics')

plt.legend()

plt.grid(True)

plt.tight_layout()

plt.show()

return diversity_scores, exclusivity_scores

# 3. Component Analysis function

def analyze_components(X_tfidf, final_texts, vectorizer, component_range):

feature_names = vectorizer.get_feature_names_out()

dictionary = Dictionary(final_texts)

print("\n1. Evaluating Topic Stability...")

stability_scores = evaluate_stability(X_tfidf, feature_names, component_range)

print("\n2. Evaluating Topic Quality...")

diversity_scores, exclusivity_scores = calculate_topic_metrics(X_tfidf, feature_names, component_range)

print("\n=== Analysis Complete ===")

print("Based on the plots, manually set the optimal number of components.")

return None

# Run the analysis to generate plots

analyze_components(X_tfidf, final_texts, vectorizer, fine_grained_range)

# After viewing the plots, set your chosen number of components here:

selected_components = 70 # Change this value based on your analysis of the plots

print(f"\nSelected number of components: {selected_components}")1. Evaluating Topic Stability...

2. Evaluating Topic Quality...

=== Analysis Complete ===

Based on the plots, manually set the optimal number of components.

Selected number of components: 70After the number of components (“topics”) have been selected, we’re ready to run LSA.

svd = TruncatedSVD(n_components=selected_components, random_state=42)

X_lsa = svd.fit_transform(X_tfidf)

lsa_cols = [f'lsa_{i}' for i in range(selected_components)] # lsa_0, lsa_1, ..., lsa_n-1

X_lsa_df = pd.DataFrame(X_lsa, columns=lsa_cols)Clustering

For this part, we’ll combine both the matrix obtained with LSA and metadata from the original liar dataframe (the one-hot encoded subject columns i.e the columns prefixed with subj_, the column with information about the speaker i.e speaker, the date column i.e date and the column that contains information about how true the statement is i.e perc_true).

Before we proceed, let’s have a quick look at missing values in the metadata.

selected_columns = ['speaker', 'date', 'perc_true']

# Dynamically add all columns starting with 'subj_'

subj_columns = [col for col in liar.columns if col.startswith('subj_')]

# Combine the initial selection with the dynamically selected columns

final_columns = selected_columns + subj_columns

missing_counts = liar[final_columns].isna().sum()

# Convert to DataFrame for better visualization

missing_summary = missing_counts.reset_index()

missing_summary.columns = ['Column', 'Missing Values']

missing_summary| Column | Missing Values | |

|---|---|---|

| 0 | speaker | 7 |

| 1 | date | 7 |

| 2 | perc_true | 0 |

| 3 | subj_government_regulation | 0 |

| 4 | subj_polls_and_public_opinion | 0 |

| … | … | … |

| 177 | subj_katrina | 0 |

| 178 | subj_ohio | 0 |

| 179 | subj_nbc | 0 |

| 180 | subj_georgia | 0 |

| 181 | subj_missouri | 0 |

182 rows × 2 columns

There are a few missing values for speaker and date (and not obvious way to impute them). So we’ll just drop those rows before we proceed with clustering.

# Identify valid (non-missing) rows based on speaker and date

valid_rows = liar["speaker"].notna() & liar["date"].notna()

# Filter liar and X_lsa accordingly

liar_clean = liar[valid_rows].copy()

liar_clean.reset_index(drop=True, inplace=True)We drop the rows from the LSA matrix that correspond to missing values of speaker and date.

X_lsa_clean_df = X_lsa_df[valid_rows].copy()

# Reset the index for consistency

X_lsa_clean_df.reset_index(drop=True, inplace=True)Now, we’re ready to pre-process the metadata before going on with clustering:

- the

subj_columns are already one-hot encoded and don’t require further pre-processing - similarly,

perc_trueis already scaled (it’s in a scale between 0-1) and doesn’t require further pre-processing - for speakers, we only keep the top 20 speakers and replace the values of the others by “Other” (this prevents having data that is too sparse) before one-hot encoding the

speakercolumn - for the

datecolumn, we only keep the year, bin years into discrete intervals (e.g., 1999-2005, 2005-2010, etc.) then encode these intervals as numerical labels for modeling

# 1. Use subj_ columns as-is

subj_cols = liar_clean.filter(regex=r'^subj_').columns

X_subj = liar_clean[subj_cols] # already binary, no processing needed

# 2. Use perc_true as-is

X_truth = liar_clean[["perc_true"]]

# 3. Process speaker — limit to top N, encode rest as "Other", then one-hot

top_speakers = liar_clean["speaker"].value_counts().nlargest(20).index

liar_clean["speaker_grouped"] = liar_clean["speaker"].where(liar_clean["speaker"].isin(top_speakers), "Other")

speaker_encoder = OneHotEncoder(sparse_output=False, handle_unknown='ignore')

speaker_encoded = speaker_encoder.fit_transform(liar_clean[["speaker_grouped"]])

speaker_cols = speaker_encoder.get_feature_names_out(["speaker_grouped"])

df_speaker = pd.DataFrame(speaker_encoded, columns=speaker_cols, index=liar_clean.index).astype(int)

# 4. Process date — bin by year

liar_clean["year"] = pd.to_datetime(liar_clean["date"]).dt.year

df_year = pd.cut(liar_clean["year"], bins=[1999, 2005, 2010, 2015, 2020, 2025], labels=False)

df_year = pd.DataFrame({"year_encoded": df_year.astype(int)}, index=liar_clean.index)

df_meta = pd.concat([X_subj, X_truth, df_speaker, df_year], axis=1)

meta_cols = df_meta.columns.tolist()We’re now ready for clustering. Since we are combining both numeric and categorical features in our input matrix, our metric of choice is the Gower distance/similarity, which supports mixed data types. This rules out some standard algorithms:

- KMeans is incompatible because it assumes Euclidean space and doesn’t handle categorical features.

- DBSCAN works with Gower but fails on this dataset due to variable densities — we tested it but omit the results for clarity (the same is true for other density-based techniques that extend DBSCAN such as HDBSCAN and OPTICS: our tests, also not shown for concision, reveal the same pattern as with DBSCAN i.e clustering the whole data into a single cluster).

Instead, we explore three algorithms: - KMedoids, which is robust and Gower-compatible

- Spectral Clustering, ideal for complex structures

- Fuzzy C-Means, a soft clustering method that offers probabilistic assignments

🧩 KMedoids

KMedoids is a partitioning-based clustering method related to KMeans, but it selects actual data points (medoids) as cluster centers rather than computing means. This makes it more interpretable and robust to noise, especially when using arbitrary distance metrics like Gower.

How it works:

- Initialize

kmedoids randomly - Assign each point to the nearest medoid using Gower distance

- Swap medoids to minimize overall intra-cluster dissimilarity

- Repeat until convergence

| Feature | KMeans | KMedoids |

|---|---|---|

| Cluster center | Mean | Actual data point (medoid) |

| Distance metric | Euclidean | Any (e.g., Gower) |

| Noise sensitivity | High | Lower |

| Mixed data support | ❌ | ✅ (with Gower) |

| Interpretability | Lower | Higher |

✅ Pros & ❌ Cons

| Pros | Cons |

|---|---|

| Works with arbitrary distances (e.g., Gower) | Slower than KMeans on large datasets |

| More robust to noise and outliers | Still requires k to be known |

| Medoids are real data points (interpretable) | Less scalable than centroid-based methods |

🌈 Spectral Clustering

Spectral Clustering is a graph-based method that uses the eigenstructure of a similarity matrix to uncover clusters. It excels in detecting non-convex, manifold-shaped, or globally-connected clusters — useful in complex datasets combining text and metadata.

How it works:

- Convert Gower distances to similarities (e.g.,

1 - Gower) - Build a graph Laplacian from the similarity matrix

- Compute eigenvectors (spectral embedding)

- Apply clustering (typically KMeans) in the embedded space

| Feature | Spectral Clustering | KMedoids |

|---|---|---|

| Graph-based? | ✅ | ❌ |

Requires k? |

✅ | ✅ |

| Handles mixed data? | ✅ (via Gower similarity) | ✅ (via Gower distance) |

| Works with complex shapes? | ✅ | Moderate |

✅ Pros & ❌ Cons

| Pros | Cons |

|---|---|

| Captures global data structure | Requires precomputed similarity matrix |

| Ideal for complex, non-linear cluster shapes | Memory-intensive on large datasets |

| Supports Gower-derived similarity | Requires number of clusters (k) |

| Good for clustering on text + metadata | Sensitive to scale in similarity values |

🌫️ Fuzzy C-Means

Fuzzy C-Means (FCM) is a soft clustering algorithm that assigns partial membership to clusters — useful when cluster boundaries are ambiguous, as with political statements or nuanced text.

⚠️ Limitation: FCM requires Euclidean distance, so we must convert all features to numeric, typically through one-hot encoding for categoricals. This allows the algorithm to run but slightly changes the nature of the data.

How it works:

- Initialize cluster centers

- Compute fuzzy membership scores for each point

- Update cluster centers using weighted averages

- Repeat until convergence

✅ Pros & ❌ Cons

| Pros | Cons |

|---|---|

| Captures ambiguity and overlapping clusters | Requires numeric-only input (not compatible with Gower) |

| Soft assignments give richer interpretation | Sensitive to outliers and initialization |

| Useful when clusters are not crisply defined | Assumes Euclidean space → categorical encoding may distort data |

🧮 Clustering Comparison

| Feature | KMedoids / Spectral | Fuzzy C-Means |

|---|---|---|

| Assignment type | Hard (1 cluster per point) | Soft (multiple memberships per point) |

| Input requirement | Precomputed distances (e.g., Gower for mixed data) | Numeric matrix (e.g., one-hot encoded categoricals) |

| Distance metric | Arbitrary (via Gower) | Euclidean (assumes numeric structure) |

| Handles ambiguity? | ❌ | ✅ |

| Works with Gower? | ✅ (direct Gower compatibility) | ❌ (not directly — requires transformation) |

| Handles mixed data? | ✅ (via Gower) | ⚠️ Indirectly — requires numeric encoding |

⚠️ Note: While Fuzzy C-Means doesn’t natively handle categorical variables, it can be applied after transforming mixed data into a fully numeric matrix (e.g., via one-hot encoding). However, doing so introduces Euclidean assumptions that may not reflect true semantic distances, especially with sparse features.

# Function to sanitize text for plotting

def sanitize_text(statement, max_length=100):

sanitized = statement.replace('$', r'\$').replace('"', r'\"').replace("'", r"\'")

return sanitized[:max_length] + '...' if len(sanitized) > max_length else sanitized

df_combined = pd.concat([X_lsa_clean_df.reset_index(drop=True), df_meta.reset_index(drop=True)], axis=1)

# --- Set correct dtypes ---

# subj_ columns: binary categorical → set to object for Gower to detect them

for col in subj_cols:

df_combined[col] = df_combined[col].astype("object")

# perc_true: numeric

df_combined["perc_true"] = df_combined["perc_true"].astype(float)

# speaker columns (already one-hot encoded): also binary categorical

for col in df_speaker.columns:

df_combined[col] = df_combined[col].astype("object")

# year_encoded: ordinal categorical

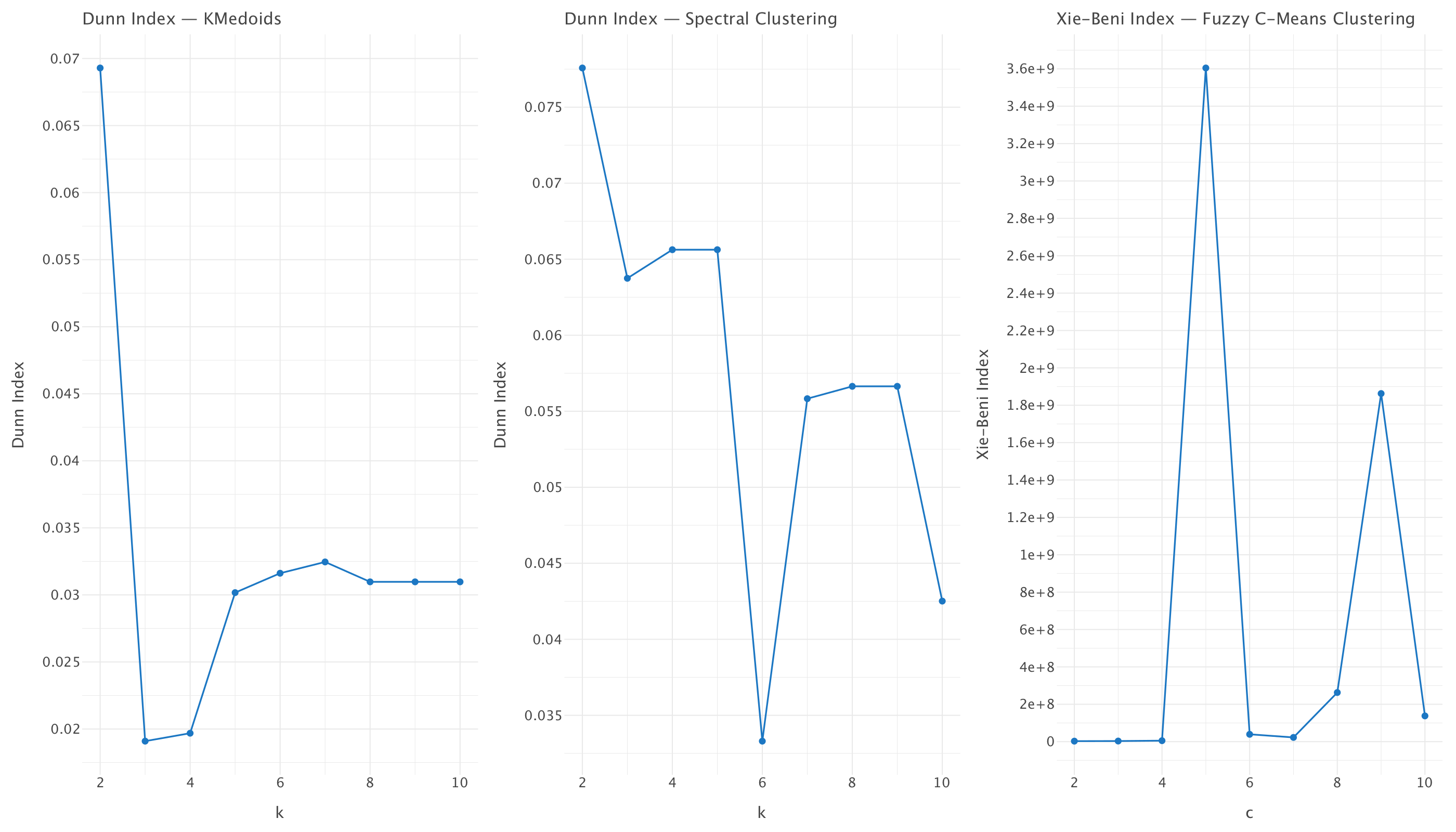

df_combined["year_encoded"] = df_combined["year_encoded"].astype("object")We need to determine the number of clusters for Spectral Clustering, KMedoid, and Fuzzy C-Means. For Spectral and KMedoid, we use the Dunn Index. For Fuzzy C-Means, which produces soft cluster memberships, we use the Xie-Beni Index, a metric specifically designed for fuzzy clustering.

The Dunn Index is more robust than the Silhouette score when working with Gower distance for mixed data types. While both metrics can technically work with precomputed distance matrices, the Dunn Index’s focus on cluster separation rather than compactness makes it better suited for the non-Euclidean nature of Gower distances. Other metrics like Calinski-Harabasz and Davies-Bouldin require Euclidean spaces and cannot be directly applied to Gower distances without transformation.

📊 Dunn Index

What is it?

The Dunn Index is an internal clustering validation metric defined as:

Dunn Index = (Minimum inter-cluster distance) / (Maximum intra-cluster distance)

- Encourages tight, well-separated clusters

- Used across different

kvalues to select the best clustering

Use in Practice

- Higher Dunn Index = better clustering

- Used to choose

kfor Spectral Clustering and KMedoids

| Aspect | Value |

|---|---|

| Best when | Inter-cluster distance is high, intra-cluster tight |

| Output range | ≥ 0 (higher is better) |

| Pros | Simple, interpretable |

| Cons | Sensitive to noise |

🌀 Xie-Beni Index

What is it?

The Xie-Beni Index is a validity metric tailored to fuzzy clustering (like Fuzzy C-Means). It considers both fuzzy membership strength and cluster separation, offering a balance between compactness and separation in soft clustering contexts.

Xie-Beni Index = (Total weighted intra-cluster variance) / (Minimum cluster center distance²)

Why not Dunn or other indices?

Unlike Spectral or KMedoid, Fuzzy C-Means doesn’t assign each point to a single cluster. Instead, each point has degrees of membership across all clusters. Traditional validation metrics like Dunn, Silhouette, Calinski-Harabasz, or Davies-Bouldin are designed for hard (crisp) clustering, where every point belongs to exactly one cluster. Applying these to fuzzy results would require forced binarization (e.g. via argmax), which discards the soft nature of the clustering and leads to misleading evaluations.

The Xie-Beni Index, by contrast:

- Accounts for soft assignments directly (using membership weights)

- Evaluates the balance between compactness and separation in the fuzzy space

- Naturally penalizes overlapping clusters and under-separation

Use in Practice

- Lower Xie-Beni Index = better clustering

- Used to choose

c(number of clusters) for Fuzzy C-Means

| Aspect | Value |

|---|---|

| Best when | Clusters are compact and well-separated with low overlap |

| Output range | ≥ 0 (lower is better) |

| Pros | Designed for fuzzy clustering, interprets soft labels |

| Cons | Sensitive to outliers and high-dimensional noise |

def dunn_index(distance_matrix, labels):

unique_clusters = np.unique(labels)

intra_dists = []

for i in unique_clusters:

cluster_indices = np.where(labels == i)[0]

if len(cluster_indices) > 1:

intra_cluster_dist = np.max(distance_matrix[np.ix_(cluster_indices, cluster_indices)])

else:

intra_cluster_dist = 0

intra_dists.append(intra_cluster_dist)

inter_dists = []

for i in unique_clusters:

for j in unique_clusters:

if i < j:

idx_i = np.where(labels == i)[0]

idx_j = np.where(labels == j)[0]

if len(idx_i) > 0 and len(idx_j) > 0:

inter_cluster_dist = np.min(distance_matrix[np.ix_(idx_i, idx_j)])

inter_dists.append(inter_cluster_dist)

if not intra_dists or not inter_dists or np.max(intra_dists) == 0:

return np.nan

return np.min(inter_dists) / np.max(intra_dists)

def dunn_evaluation(distance_matrix, mode="kmedoids", values=None):

scores = []

labels_all = []

if values is None:

values = range(2, 11)

for v in tqdm(values, desc=f"Dunn Index ({mode})"):

try:

match mode:

case "kmedoids":

model = KMedoids(n_clusters=v, metric='precomputed', random_state=42)

model.fit(distance_matrix)

labels = model.labels_

case "spectral":

sim = 1 - distance_matrix

model = SpectralClustering(n_clusters=v, affinity='precomputed', random_state=42).fit(sim)

labels = model.labels_

case _:

raise ValueError(f"Unsupported mode: {mode}")

score = dunn_index(distance_matrix, labels)

scores.append(score)

labels_all.append(labels)

except Exception as e:

print(f"{mode} {v} failed: {e}")

scores.append(np.nan)

labels_all.append(None)

return values, scores, labels_all

def plot_index(values, scores, title, x_label="k",index="Dunn"):

df = pd.DataFrame({'value': values, 'dunn': scores}).dropna()

return (

ggplot(df, aes(x='value', y='dunn')) +

geom_line(color='#1C77C3') +

geom_point(color='#1C77C3', size=3) +

ggtitle(title) +

xlab(x_label) + ylab(index+" Index") +

theme_minimal()

)

def xie_beni_index(X, u, centers, m=2):

n_clusters = centers.shape[0]

N = X.shape[0]

um = u ** m

dist = np.zeros((n_clusters, N))

for k in range(n_clusters):

dist[k] = np.linalg.norm(X - centers[k], axis=1) ** 2

compactness = np.sum(um * dist)

min_dist = np.min([

np.linalg.norm(centers[i] - centers[j]) ** 2

for i in range(n_clusters) for j in range(n_clusters) if i != j

])

return compactness / (N * min_dist)

def xie_beni_evaluation(X, values=range(2, 11)):

scores = []

labels_all = []

for c in tqdm(values, desc="Xie-Beni Index (Fuzzy C-Means)"):

try:

cntr, u, _, _, _, _, _ = cmeans(

X.T, c, m=2.0, error=0.005, maxiter=1000, init=None

)

xb = xie_beni_index(X, u, cntr)

scores.append(xb)

labels_all.append(np.argmax(u, axis=0)) # hard labels

except Exception as e:

print(f"Fuzzy c={c} failed: {e}")

scores.append(np.nan)

labels_all.append(None)

return values, scores, labels_all

#Computing the Gower distance matrix

gower_dist = gower_matrix(df_combined)

# KMedoids

ks_k, dunn_k, labels_k = dunn_evaluation(gower_dist, mode="kmedoids", values=range(2, 11))

plot_kmedoid = plot_index(ks_k, dunn_k, title="Dunn Index — KMedoids", x_label="k",index="Dunn")

# Spectral

ks_s, dunn_s, labels_s = dunn_evaluation(gower_dist, mode="spectral", values=range(2, 11))

plot_spectral = plot_index(ks_s, dunn_s, title="Dunn Index — Spectral Clustering", x_label="k",index="Dunn")

# Fuzzy

fuzzy_input = df_combined.copy()

for col in fuzzy_input.columns:

if fuzzy_input[col].dtype == 'object':

# Try to convert to numeric

try:

fuzzy_input[col] = pd.to_numeric(fuzzy_input[col], errors='raise')

except:

# If that fails, use factorize (for true categorical text)

fuzzy_input[col] = pd.factorize(fuzzy_input[col])[0]

# At this point, all columns should be numeric

fuzzy_input = fuzzy_input.fillna(0).astype(np.float64)

X_fuzzy = fuzzy_input.values

c_means_input = X_fuzzy.T

ks_f, xie_beni_f, labels_f = xie_beni_evaluation(c_means_input, values=range(2, 11))

plot_fuzzy = plot_index(ks_f, xie_beni_f, title="Xie-Beni Index — Fuzzy C-Means Clustering", x_label="c",index="Xie-Beni")Dunn Index (kmedoids): 100%|██████████| 9/9 [00:04<00:00, 2.06it/s]

Dunn Index (spectral): 100%|██████████| 9/9 [00:45<00:00, 5.09s/it]

Xie-Beni Index (Fuzzy C-Means): 100%|██████████| 9/9 [00:04<00:00, 2.09it/s]g=gggrid([

plot_kmedoid,

plot_spectral,

plot_fuzzy

], ncol=3)

g+=ggsize(1400,800)

g

Looking at these plots, each clustering method shows different patterns for determining the optimal number of clusters:

KMedoids (Dunn Index): Shows the highest value at k=2, then drops significantly and gradually increases until k=6 before flattening. While k=2 has the highest Dunn Index, this could be creating overly broad clusters. The “elbow” appears around k=5, which might represent a better balance between cluster separation and meaningful groupings.

Spectral Clustering (Dunn Index): Shows the highest value at k=2, with another peak at k=4-5, then a drop at k=6, followed by another stable period at k=7-9. The interesting feature here is the local maximum at k=4-5, suggesting these might be meaningful cluster counts.

Fuzzy C-Means (Xie-Beni Index): For Xie-Beni Index, lower values indicate better clustering. There’s a significant spike at c=5, which should be avoided. The lowest values appear at c=2,c=3 and c=7, with c=2-3 showing the absolute minimum.

To select the optimal number of clusters, we should compare across methods and look for agreement between methods. \(K=7\) appears to be reasonable across all three plots (rising in KMedoids, local peak in Spectral, local minimum in Fuzzy C-Means).

Given the evidence from these plots, k=7 appears to be a reasonable choice that’s supported across methods while avoiding the extreme values that might represent either too few or too many clusters.

# Try a fixed number of clusters

gower_sim = 1-gower_dist

n_clusters = 7

# --- Spectral Clustering ---

spec_7clusters = SpectralClustering(n_clusters=n_clusters, affinity='precomputed', random_state=42)

labels_spec_7clusters = spec_7clusters.fit_predict(gower_sim)

kmedoids_7clusters = KMedoids(n_clusters=n_clusters, metric='precomputed',random_state=42,max_iter=300)

# Fit the model

kmedoids_7clusters.fit(gower_dist)

# Get cluster labels

labels_7clusters = kmedoids_7clusters.labels_

# Run Fuzzy C-means

cntr_7clust, u_7clust, _, _, _, _, _ = cmeans(

c_means_input, c=n_clusters, m=2.0, error=0.005, maxiter=1000, init=None

)

labels_fuzzy_7clust = np.argmax(u_7clust, axis=0)Since our clustering is performed on the high dimensional matrix directly (5733 by 5733 Gower distance matrix for Spectral clustering and KMedoid and original 5733 by 272 matrix for Fuzzy C-means!), we need to reduce the dimensionality of our data with UMAP to be able to visualise it.

umap_model_2d = umap.UMAP(n_components=2, metric='precomputed', random_state=42)

umap_embedding_2d = umap_model_2d.fit_transform(gower_dist)

umap_model_fuzzy = umap.UMAP(n_components=2, metric='euclidean', random_state=42)

umap_embedding_fuzzy = umap_model_fuzzy.fit_transform(X_fuzzy)

# Plotting helper

def plot_clusters(xy, labels, title, meta):

df_plot = pd.DataFrame({

"x": xy[:, 0],

"y": xy[:, 1],

"cluster": labels.astype(str), # 👈 Cast to string

"statement": meta["statement"],

"perc_true": meta["perc_true"],

"speaker": meta["speaker"],

"date": meta["date"]

})

fig = px.scatter(df_plot, x='x', y='y', color='cluster',

hover_data=["statement", "perc_true", "speaker", "date"],

opacity=0.7, title=title,

color_discrete_sequence=px.colors.qualitative.T10) # optional: better color palette

fig.show()

X_combined_index = liar_clean.index

# Plot Spectral

plot_clusters(umap_embedding_2d, labels_spec_7clusters, "Spectral Clustering (n=7)", liar_clean.loc[X_combined_index])

# Plot KMedoid

plot_clusters(umap_embedding_2d, labels_7clusters, "KMedoid Clustering (n=7)", liar_clean.loc[X_combined_index])

plot_clusters(umap_embedding_fuzzy, labels_fuzzy_7clust, "Fuzzy C-Means Clustering (n=7)", liar_clean.loc[X_combined_index])What does this clustering tell us?

Looking at our clustering results with n=7 across the three different methods (Fuzzy C-Means, KMedoid, and Spectral Clustering), alongside differences in data preprocessing, we can draw several important insights regarding our dataset’s underlying structure and the suitability of this cluster count.

Fuzzy C-Means Clustering (n=7 on raw mixed data)

Despite specifying 7 clusters, the visualization reveals only two distinct clusters (labeled 3 and 4), with cluster 4 showing some internal structure. This outcome suggests:

- The algorithm has effectively collapsed the data into a binary structure, assigning almost all points to just two major groups.

- This may indicate that our original feature space contains a dominant binary signal, possibly due to a few influential features.

- Membership values are largely concentrated, which implies that the fuzzy clustering doesn’t detect 7 meaningful divisions in this raw representation.

This aligns with our validation metrics (Dunn Index and Xie-Beni), both of which also pointed to 2–3 clusters as potentially optimal.

KMedoid Clustering (n=7 on Gower distance matrix)

This method produced well-separated clusters across the 2D UMAP projection, with all 7 clusters (0–6) clearly represented:

- Some clusters (e.g., 0, 1, 2) are distinctly separated, particularly on the left side of the visualization.

- Others (3, 4, 6) show more overlap in the center-right region, suggesting either fuzzier boundaries or latent substructure.

- Clusters generally appear coherent and well-shaped, indicating meaningful groupings in the transformed space.

Using the Gower distance matrix here proves advantageous, as it allows for balanced handling of both categorical and numeric features in your dataset.

Spectral Clustering (n=7 on Gower distance matrix)

Spectral clustering yielded a similar structure to KMedoid, supporting the validity of the Gower representation:

- All 7 clusters are visible, with cluster 3 forming a distinct band.

- While the overall shapes differ slightly from KMedoid (especially in the right half), the core structural patterns are consistent.

- There is some overlap between clusters, but also clear regions of separation, especially on the left.

Comparison Across Methods

- Consistency:

- KMedoid and Spectral clustering show similar global patterns, validating the structure uncovered via the Gower matrix.

- Fuzzy C-Means deviates significantly, emphasizing a binary grouping — this difference stems from working directly on the raw feature space.

- Data Representation Matters:

- Fuzzy C-Means is sensitive to dominant features in the raw data and may be overpowered by a few strong signals.

- The Gower distance matrix enables more nuanced group detection by normalizing contributions across mixed feature types.

- Cluster Separability and Interpretability:

- Fuzzy C-Means suggests a high-level binary division might be most natural.

- KMedoid and Spectral offer finer-grained subgroups, useful for in-depth exploration or downstream tasks like classification or profiling.

Conclusions

- If our goal is high-level categorization (e.g., separating broad truth/falsity groupings or political leanings):

- Fuzzy C-Means with 2–3 clusters might be optimal.

- If our goal is detailed segmentation of our dataset that respects the complex interplay of text and metadata:

- KMedoid or Spectral clustering with n=7, using the Gower matrix, provides better differentiation and structure.

Digging a bit further into Fuzzy C-Means

A peculiarity of Fuzzy C-Means is that it doesn’t quite assign a definitive cluster label to each data point. Instead, it produces a membership matrix \(U\), where each entry \(u_{ij}\) indicates the degree to which point \(j\) belongs to cluster \(i\). These degrees sum to 1 across clusters for each point, reflecting a soft assignment.

The typical practice of assigning each data point to a single cluster is done a posteriori by selecting the cluster with the highest membership score — mathematically, by taking the argmax over the membership matrix. This effectively converts the soft assignment into a hard label, creating a crisp partition similar to what you’d get from KMeans or KMedoids. While this makes comparison across clustering methods easier, it discards the soft assignment information — and in doing so, may obscure meaningful ambiguity or uncertainty in the data.

One way to better understand this ambiguity is to visualize the maximum membership strength each point has. A value near 1 indicates a confident assignment, while lower values suggest that the point lies closer to a boundary between clusters, or shares affinities with multiple groupings.

Below is a UMAP projection of the data used in the fuzzy clustering step, where points are colored by their maximum membership value:

# Max membership value per point (degree of "confidence")

max_membership = u_7clust.max(axis=0)

# Create a DataFrame for plotting

df_membership_plot = pd.DataFrame({

"x": umap_embedding_fuzzy[:, 0],

"y": umap_embedding_fuzzy[:, 1],

"max_membership": max_membership

})

# Plot: color by maximum membership value

fig = px.scatter(df_membership_plot, x='x', y='y',

color='max_membership',

color_continuous_scale='viridis',

title="Fuzzy C-Means: Maximum Membership Strength (UMAP projection)",

opacity=0.75)

fig.show()This plot gives a more nuanced view of the Fuzzy C-Means clustering result: regions with high membership indicate strong, unambiguous cluster identity, while more diffuse or mixed-color areas highlight zones of uncertainty — where Fuzzy C-Means acknowledges that the data doesn’t neatly separate.

Key Observations

Cluster Layout - A large, elongated central mass stretches horizontally around ( y ), spanning from roughly ( x = -5 ) to ( x = 7 ) - Several more compact clusters are visible on the far right (( x > 8 )), some of which show more distinct structure - A scattering of isolated points and micro-clusters appears at the edges

Membership Strength Distribution - The color gradient ranges from approximately 0.143 (deep purple) to 0.144 (yellow-green) - This extremely narrow range of maximum membership values suggests that nearly all points have roughly equal partial membership across all 7 clusters - Points in the rightmost clusters tend to have slightly higher confidence (brighter coloring), whereas the central mass is uniformly ambiguous (darker tones)

Interpretation and Implications

High Ambiguity in Cluster Assignments

The theoretical maximum membership value for 7 equally overlapping clusters is about 1/7 ≈ 0.143, which matches the observed range. This strongly suggests that most points are not confidently assigned to any single cluster — an indicator of high overlap and fuzzy boundaries.Possible Overclustering

The absence of high membership values indicates that 7 clusters may be too many for the structure present in the data. Previous validation metrics like the Xie-Beni and Dunn index pointed to 2–5 clusters as more optimal. The current setting may be splitting natural groupings unnecessarily, resulting in soft, indistinct divisions.Heterogeneous Data Space

The combination of textual features (via LSA) and metadata likely produces a high-dimensional, mixed-type space. In such settings, fuzzy clustering can struggle to identify compact, well-separated groups, especially when dominant features dilute signal from weaker but meaningful ones.Localized Certainty in Some Regions

The right-side clusters in the UMAP projection show slightly higher membership values, suggesting that some portions of the data do form clearer, more self-contained clusters — even if the overall structure remains diffuse.

Conclusion

The Fuzzy C-Means result, viewed through the lens of maximum membership strength, reveals that the clustering model sees the dataset as highly ambiguous — with few points belonging clearly to a single group. This, combined with visual cues and clustering metrics, suggests that:

- Fewer clusters (e.g. 2–5) might better reflect the natural structure

- Fuzzy C-Means is sensitive to the representation used; preprocessing and distance choice matter

- For this dataset, methods that better accommodate mixed data types (like KMedoids or Spectral Clustering with Gower distance) may offer sharper partitions and more interpretable structure

This visualization offers a valuable diagnostic tool: it doesn’t just show where points fall in space, but how certain the algorithm is about their group identity — and that uncertainty speaks volumes.

🧠 Consensus Clustering: What is it and why might we need it?

Instead of relying on just one clustering algorithm, we can go a step further: what if we combined the results from all the methods we’ve tested — like KMedoid, Spectral Clustering, and Fuzzy C-Means — into a unified solution?

This is the idea behind consensus clustering. It’s particularly useful when clustering outputs are noisy, diverging, or hard to interpret. A consensus can consolidate differing outputs, reduce variance, and often improve robustness by integrating complementary perspectives on the data.

When multiple clustering algorithms yield divergent results, consensus clustering offers a principled way to combine these perspectives into a single, unified clustering. Here, we explore three common methods:

1. 🧮 Hard Voting (Majority Rule)

How it Works: Each clustering method “votes” on the label for each data point. The most frequent label across methods is chosen as the consensus assignment.

- If there is a tie, it can be broken randomly or resolved by a priority scheme.

Example:

| Data Point | KMedoid | Spectral | Fuzzy (argmax) | Consensus Label |

|---|---|---|---|---|

| A | 0 | 1 | 0 | 0 (2 votes) |

| B | 1 | 1 | 2 | 1 (2 votes) |

| C | 2 | 2 | 2 | 2 (3 votes) |

✅ Pros vs ❌ Cons

| Pros | Cons |

|---|---|

| Simple, fast, and interpretable | Ignores uncertainty and cluster proximity |

| Doesn’t require distance metrics | Cannot handle ambiguity or soft clustering |

| Useful for clearly separable data | Fails if all methods strongly disagree (e.g. all labels different) |

2. 🔁 Reclustering on One-Hot Encoded Assignments

How it Works: - Each method’s cluster assignments are converted into one-hot encoded vectors. - These vectors are concatenated into a new feature matrix (per data point). - A clustering algorithm (e.g., Agglomerative Clustering) is then run on this matrix to find a consensus.

Example:

Say we have 3 clustering methods (each assigning labels for 3 clusters):

| Data Point | KMedoid One-Hot | Spectral One-Hot | Fuzzy Argmax One-Hot | Combined Vector |

|---|---|---|---|---|

| A | [1, 0, 0] | [0, 1, 0] | [1, 0, 0] | [1, 0, 0, 0, 1, 0, 1, 0, 0] |

| B | [0, 1, 0] | [0, 1, 0] | [0, 0, 1] | [0, 1, 0, 0, 1, 0, 0, 0, 1] |

| C | [0, 0, 1] | [1, 0, 0] | [0, 0, 1] | [0, 0, 1, 1, 0, 0, 0, 0, 1] |

This matrix becomes the input to a new clustering.

✅ Pros vs ❌ Cons

| Pros | Cons |

|---|---|

| Captures voting patterns across methods | Still treats each assignment as binary (no ambiguity) |

| Works well when clusters partially align | Sensitive to label encoding inconsistencies across methods |

| Doesn’t require original data or distance metrics | High-dimensional if many methods or clusters are used |

3. 🌈 Reclustering on One-Hot + Fuzzy Memberships

How it Works: This extends the one-hot strategy by appending the soft cluster membership scores from Fuzzy C-Means to the one-hot encoded vectors. This allows the consensus clustering to also consider the confidence levels in Fuzzy assignments.

Example:

| Data Point | Combined One-Hot (from above) | Fuzzy Memberships | Final Vector |

|---|---|---|---|

| A | [1, 0, 0, 0, 1, 0, 1, 0, 0] | [0.70, 0.20, 0.10] | [1, 0, 0, 0, 1, 0, 1, 0, 0, 0.70, 0.20, 0.10] |

| B | [0, 1, 0, 0, 1, 0, 0, 0, 1] | [0.35, 0.33, 0.32] | [0, 1, 0, 0, 1, 0, 0, 0, 1, 0.35, 0.33, 0.32] |

| C | [0, 0, 1, 1, 0, 0, 0, 0, 1] | [0.05, 0.15, 0.80] | [0, 0, 1, 1, 0, 0, 0, 0, 1, 0.05, 0.15, 0.80] |

This approach creates a richer, more expressive representation per point.

✅ Pros vs ❌ Cons

| Pros | Cons |

|---|---|

| Incorporates soft assignments → better reflects ambiguity | Slightly more complex to implement |

| Richer, high-dimensional representation with more nuance | Sensitive to differences in scale (needs normalization) |

| Can differentiate between ambiguous and confident clusterings | May require more sophisticated reclustering algorithms |

🧭 Which to Use?

| Use Case | Recommended Strategy |

|---|---|

| You want fast, simple consensus | Hard Voting |

| You want a method that reflects patterns across methods | One-Hot Reclustering |

| You want to incorporate ambiguity and confidence | One-Hot + Fuzzy Memberships |

First approach: Voting approach

# Stack labels from different methods (all should be of shape (n_samples,))

all_labels = np.vstack([

labels_spec_7clusters,

labels_7clusters, # KMedoids

labels_fuzzy_7clust # Fuzzy C-Means

])

# Compute the mode along axis=0 (i.e., majority vote for each point)

voted_labels, _ = mode(all_labels, axis=0, keepdims=False)

# Visualize using the same UMAP embedding (e.g., the Gower-based one)

plot_clusters(umap_embedding_2d, voted_labels, "Consensus Clustering (Voting Majority)", liar_clean.loc[X_combined_index])This first method predictably fails, since the labels of the three methods are not aligned.

Second approach : one-hot reclustering approach

# Combine cluster labels as categorical features

labels_matrix = np.vstack([

labels_spec_7clusters,

labels_7clusters,

labels_fuzzy_7clust

]).T # shape: (n_samples, 3)

# One-hot encode the cluster labels for each method

encoder = OneHotEncoder(sparse_output=False)

labels_onehot = encoder.fit_transform(labels_matrix)

# Perform Agglomerative Clustering on this feature matrix

agg_cluster = AgglomerativeClustering(n_clusters=n_clusters, metric='euclidean', linkage='ward')

labels_consensus_agg = agg_cluster.fit_predict(labels_onehot)

# Visualize

plot_clusters(umap_embedding_2d, labels_consensus_agg, "Consensus Clustering (One-Hot Reclustering approach)", liar_clean.loc[X_combined_index])The clusters are more defined with this approach but still quite noisy.

Third approach: One-hot + Fuzzy Memberships reclustering approach

# One-hot encode KMedoid and Spectral labels

labels_matrix = np.vstack([

labels_spec_7clusters,

labels_7clusters

]).T # shape (n_samples, 2)

from sklearn.preprocessing import OneHotEncoder

encoder = OneHotEncoder(sparse_output=False)

labels_onehot = encoder.fit_transform(labels_matrix) # shape (n_samples, 14) if 7 clusters each

# Concatenate fuzzy membership matrix (transpose to shape (n_samples, 7))

combined_features = np.hstack([

labels_onehot, # Hard cluster assignments

u_7clust.T # Soft assignments from FCM

])

# Agglomerative reclustering on combined cluster representations

agg_cluster_soft = AgglomerativeClustering(n_clusters=n_clusters, metric='euclidean', linkage='ward')

labels_consensus_soft = agg_cluster_soft.fit_predict(combined_features)

# Visualize

plot_clusters(umap_embedding_2d, labels_consensus_soft, "Consensus Clustering (One-hot + Fuzzy Memberships reclustering approach)", liar_clean.loc[X_combined_index])Quite predictably, this method performs best out of the consensus methods and the clusters are much more distinct.

Anomaly detection

After performing clustering, we take our analysis a step further by identifying anomalies i.e statements that don’t fit in with the rest/stand out the most.

Since anomaly detection often involves uncertainty and noisy signals, we’ll apply three different algorithms to get multiple perspectives: - Isolation Forest - Local Outlier Factor (LOF) - One-Class SVM

Just like with clustering, the results may differ. So we’ll combine them via consensus voting to identify strongly agreed-upon anomalies.

🌲 Isolation Forest

Isolation Forest works by isolating points in the data through recursive partitioning — anomalies are isolated quickly and thus have shorter average path lengths.

Key Parameter:

contamination=0.05: Specifies the expected proportion of outliers in the data (5%). This is important — the algorithm will “force” itself to label that fraction of points as anomalous.

🧭 Local Outlier Factor (LOF)

LOF measures local density deviation. Points that have significantly lower density compared to their neighbors are considered anomalies.

Key Parameters:

n_neighbors=20: Number of neighbors to use when estimating local density. Larger values make the model less sensitive to local fluctuations.contamination=0.05: Again, this sets the expected proportion of anomalies.

🧠 One-Class SVM (OC-SVM)

What is it?

One-Class SVM is a variant of the traditional SVM algorithm, adapted for unsupervised anomaly detection.

While standard SVMs are trained on labeled data to separate known classes (e.g. cat vs. dog), One-Class SVM takes only unlabeled data (assumed to be mostly “normal”) and tries to learn the boundary of this normal region.

✅ This makes it unsupervised — it requires no prior labeling of anomalies during training.

Imagine trying to wrap a tight boundary around all the “normal” data points. Any point falling outside this boundary is flagged as anomalous. This is especially helpful when anomalies are rare or not well-defined in advance.

How It Works:

- Learns a decision function that best encloses the data in a high-dimensional space.

- Uses the RBF (Radial Basis Function) kernel to capture non-linear boundaries.

- Points outside the learned hypersphere are labeled as anomalies.

Key Parameters:

kernel='rbf': Allows the model to find curved, non-linear boundaries in feature space.nu=0.05: An upper bound on the fraction of anomalies (outliers). Also acts as a regularizer.

Strengths

- Good for tight, compact clusters of normal data.

- Effective when anomalies are far away from the main distribution.

Weaknesses

- Can struggle with sparse or noisy data.

- Sensitive to feature scaling — data needs to be well-preprocessed.

- Not great if normal data is spread out or multi-modal.

🆚 One-Class SVM vs. Isolation Forest vs. LOF

| Feature | One-Class SVM | Isolation Forest | Local Outlier Factor (LOF) |

|---|---|---|---|

| Supervision | Unsupervised | Unsupervised | Unsupervised |

| Assumption | Most data is “normal” | Anomalies are easier to isolate | Anomalies have lower local density |

| Boundary Type | Tight enclosing boundary (global) | Random partitions (tree-based) | Local density comparison |

| Sensitivity | Global (sensitive to scaling) | Robust to high-dimensional noise | Local context-dependent |

| Interpretability | Moderate (abstract boundary) | High (tree-paths & feature splits) | Moderate (density comparison) |

| Best Use Case | Small-to-medium data; subtle anomalies | High-dimensional or noisy datasets | When local density variation is key |

🌳 Isolation Forest

# Step 1: Prepare data

df_isolation_ready = df_combined.copy()

# Convert categorical columns to integers

for col in df_isolation_ready.select_dtypes(include="object").columns:

df_isolation_ready[col] = df_isolation_ready[col].astype(int)

# Safe float conversion

X_isolation = df_isolation_ready.values.astype(float)

# Step 2: Dimensionality Reduction (UMAP)

umap_model = umap.UMAP(n_components=2, random_state=42)

X_umap = umap_model.fit_transform(X_isolation)

# Step 3: Fit Isolation Forest

iso_forest = IsolationForest(contamination=0.05, random_state=42)

y_pred_isolation = iso_forest.fit_predict(X_isolation)

# Step 4: Create a DataFrame for plotting

df_plot = pd.DataFrame({

"x": X_umap[:, 0],

"y": X_umap[:, 1],

"anomaly": y_pred_isolation

}, index=liar_clean.index)

df_plot["statement"] = liar_clean["statement"]

df_plot["perc_true"] = liar_clean["perc_true"]

df_plot["speaker"] = liar_clean["speaker"]

df_plot["date"] = liar_clean["date"]

# Step 5: Convert anomaly labels to string for discrete coloring

df_plot["anomaly"] = df_plot["anomaly"].map({1: "normal", -1: "anomaly"})

# Step 6: Interactive plot

fig = px.scatter(

df_plot,

x='x',

y='y',

color='anomaly',

hover_data=["statement", "perc_true", "speaker", "date"],

opacity=0.7,

title="Isolation Forest Anomaly Detection (UMAP projection)",

color_discrete_sequence=px.colors.qualitative.T10

)

fig.show()🎯 Local Outlier Factor

lof = LocalOutlierFactor(n_neighbors=20, contamination=0.05)

y_pred_lof = lof.fit_predict(X_isolation)

# Step 4: Create a DataFrame for plotting

df_plot = pd.DataFrame({

"x": X_umap[:, 0],

"y": X_umap[:, 1],

"anomaly": y_pred_lof

}, index=liar_clean.index)

df_plot["statement"] = liar_clean["statement"]

df_plot["perc_true"] = liar_clean["perc_true"]

df_plot["speaker"] = liar_clean["speaker"]

df_plot["date"] = liar_clean["date"]

# Step 5: Convert anomaly labels to string for discrete coloring

df_plot["anomaly"] = df_plot["anomaly"].map({1: "normal", -1: "anomaly"})

# Step 6: Interactive plot

fig = px.scatter(

df_plot,

x='x',

y='y',

color='anomaly',

hover_data=["statement", "perc_true", "speaker", "date"],

opacity=0.7,

title="LOF Anomaly Detection (UMAP projection)",

color_discrete_sequence=px.colors.qualitative.T10

)

fig.show()🧠 OneClassSVM

svm = OneClassSVM(kernel='rbf', gamma='scale', nu=0.05) # nu = approx fraction of anomalies

y_pred_svm = svm.fit_predict(X_isolation)

df_plot = pd.DataFrame({

"x": X_umap[:, 0],

"y": X_umap[:, 1],

"anomaly": y_pred_svm

}, index=liar_clean.index)

df_plot["statement"] = liar_clean["statement"]

df_plot["perc_true"] = liar_clean["perc_true"]

df_plot["speaker"] = liar_clean["speaker"]

df_plot["date"] = liar_clean["date"]

# Step 5: Convert anomaly labels to string for discrete coloring

df_plot["anomaly"] = df_plot["anomaly"].map({1: "normal", -1: "anomaly"})

# Step 6: Interactive plot

fig = px.scatter(

df_plot,

x='x',

y='y',

color='anomaly',

hover_data=["statement", "perc_true", "speaker", "date"],

opacity=0.7,

title="OneClassSVM Anomaly Detection (UMAP projection)",

color_discrete_sequence=px.colors.qualitative.T10

)

fig.show()Overlaying the results of Isolation Forest and OneClassSVM

We overlay the results of two methods (Isolation Forest and One-Class SVM) to explore areas of agreement or disagreement:

| Color | Meaning |

|---|---|

| Red | Detected by both |

| Orange | Isolation Forest only |

| Purple | One-Class SVM only |

| Light gray | Neither |

# Step 2: Create overlay DataFrame

overlay_df = pd.DataFrame({

"x": X_umap[:, 0],

"y": X_umap[:, 1],

"isolation": y_pred_isolation, # Isolation Forest predictions

"ocsvm": y_pred_svm # One-Class SVM predictions

}, index=liar_clean.index)

# Map labels to binary (1 = normal, -1 = anomaly)

overlay_df["status"] = overlay_df.apply(

lambda row: "both anomaly" if row["isolation"] == -1 and row["ocsvm"] == -1

else "isolation forest only" if row["isolation"] == -1

else "one class SVM only" if row["ocsvm"] == -1

else "normal",

axis=1

)

# Add metadata

overlay_df["statement"] = liar_clean["statement"]

overlay_df["perc_true"] = liar_clean["perc_true"]

overlay_df["speaker"] = liar_clean["speaker"]

overlay_df["date"] = liar_clean["date"]

# Step 3: Plot with Plotly

fig = px.scatter(

overlay_df,

x='x',

y='y',

color='status',

hover_data=["statement", "perc_true", "speaker", "date"],

opacity=0.7,

title="Anomaly Detection Overlay: Isolation Forest vs One-Class SVM",

color_discrete_map={

"both anomaly": "red",

"isolation forest only": "orange",

"one class SVM only": "purple",

"normal": "lightgray"

}

)

fig.show()🗳️ Consensus Anomaly Detection

Since each method captures different types of outliers, we apply a voting strategy: - Each method outputs a binary prediction (anomaly or not). - We tally how many methods voted for each point being an anomaly: - 1 method → Weak anomaly - 2 methods → Moderate anomaly - 3 methods → Strong consensus

This gives a graded view of anomalousness and reduces reliance on any single method.

✅ This consensus approach mirrors our earlier strategy with clustering — acknowledging ambiguity and uncertainty by aggregating multiple signals.

df_anomaly_votes = pd.DataFrame(index=liar_clean.index)

df_anomaly_votes["iforest"] = y_pred_isolation # From earlier

df_anomaly_votes["svm"] = y_pred_svm

df_anomaly_votes["lof"] = y_pred_lof

# Convert to binary (1 = anomaly)

binary = lambda x: 1 if x == -1 else 0

df_anomaly_votes = df_anomaly_votes.applymap(binary)

# Count how many methods flagged as anomaly

df_anomaly_votes["votes"] = df_anomaly_votes.sum(axis=1)

# Inspect most agreed-upon anomalies

df_strong_outliers = df_anomaly_votes[df_anomaly_votes["votes"] >= 2] # Step 1: Combine anomaly labels from all methods

df_votes = pd.DataFrame(index=liar_clean.index)

df_votes["IsolationForest"] = y_pred_isolation

df_votes["OneClassSVM"] = y_pred_svm

df_votes["LOF"] = y_pred_lof

# Step 2: Convert to binary (1 = anomaly, 0 = normal)

df_votes = df_votes.applymap(lambda x: 1 if x == -1 else 0)

# Step 3: Count how many methods flagged each point as an anomaly

df_votes["consensus"] = df_votes.sum(axis=1)

# Step 4: Create plot DataFrame with UMAP coordinates

df_consensus_plot = pd.DataFrame({

"x": X_umap[:, 0],

"y": X_umap[:, 1],

"consensus": df_votes["consensus"],

"statement": liar_clean["statement"],

"perc_true": liar_clean["perc_true"],

"speaker": liar_clean["speaker"],

"date": liar_clean["date"]

})

# Step 5: Map consensus values to categories for color

def label_consensus(v):

if v == 0: