🛣️ Week 10 - Lab Roadmap

Introduction to Natural Language Processing in Python

⚙️ Setup

Downloading the student solutions

Click on the below button to download the student notebook.

Loading libraries

import numpy as np

import pandas as pd

import seaborn as sns

import matplotlib.pyplot as plt

import spacy

import gensim

import shap

from gensim import corpora

from gensim.models.ldamodel import LdaModel

from gensim.models.coherencemodel import CoherenceModel

from sklearn.feature_extraction.text import CountVectorizer

from sklearn.linear_model import LogisticRegression

from sklearn.ensemble import RandomForestClassifier

from sklearn.metrics import confusion_matrix, f1_score

from sklearn.model_selection import train_test_split

from wordcloud import WordCloud

from lets_plot import *

LetsPlot.setup_html()

# Load spaCy stopwords

nlp = spacy.load("en_core_web_sm")

stopwords = nlp.Defaults.stop_wordsDownloading the data

Click on the below button to download the data set.

Working with spaCy and gensim

You should install spaCy, gensim and shap using conda i.e:

conda install spaCy gensim shapIf you are having trouble installing the above packages, it may be that you are using a later version of Python that is currently not compatible with them.

If you are using Python 3.12, you should also install the following libraries using

conda install -c conda-forge spacy-model-en_core_web_smIf you want to create a separate virtual environment in conda with an earlier version of Python, you can use the following commands

conda create --name myenv python=3.11

conda activate myenv👉 NOTE: For the second option, you will need to reinstall all of the libraries used in this lab.

🥅 Learning objectives

- Develop new data structures needed for NLP (e.g. document feature matrix, corpus)

- Visualise text output using wordclouds and keyness plots

- Perform feature selection to aid in classifying sentiment

- Create and validate topic models using Latent Dirichlet Allocation

Introducing a new data set (10 minutes)

We’ll begin by loading data on 2,563 statements on economic matters by the European Central Bank (ECB), available here. Statements by the ECB offer valuable insights into the economic policies and decisions that shape the financial landscape of the European Union. The ECB plays a critical role in regulating monetary policy, controlling inflation, managing interest rates, and ensuring economic stability across member states. Understanding how the ECB communicates its strategies and decisions helps students grasp broader economic concepts like central banking, economic growth, and market reactions. Additionally, ECB statements can reveal the political dynamics at play within the European Union, highlighting how economic policies influence social issues such as unemployment, inequality, and public welfare.

There are two variables:

text: Economic statements.sentiment: Whether the statement is positive (1) or negative (0).

# Load data

# Print the data frame👉 NOTE: As you can see, we have a series of statements that convey information about a specific economic topic and an attached sentiment. However, the text data, as it stands, is not very useful but, with a few Python commands can be transformed into useful features.

Creating our first document feature matrix (20 minutes)

A document feature matrix (DFM) has an identical number of rows to the text column used to create it, and a given number of features, consisting of n-grams (n words seperated by white space) specified by the user. The below code turns the text column into a document feature matrix.

🎯 Action Point: Try your best to understand the code - don’t worry if you don’t, we will go over the code together.

# Preprocess function: tokenize, remove punctuation, numbers, and stopwords

def preprocess_text(text):

doc = nlp(text.lower()) # Lowercase text

tokens = [

token.lemma_ for token in doc

if not token.is_punct and not token.is_digit and not token.is_space

and token.text.lower() not in stopwords

]

return " ".join(tokens) # Return cleaned text as a string

# Apply preprocessing to each review

# Create document-feature matrix (DFM) using the fit_transform method of CountVectorizer

# Convert to a DataFrame for inspection👉 NOTE: - We create a count of each token in a given document, specifying that terms should occur in at least 10 documents in order to be included in the final DFM. - We have used a pre-selected list of stopwords, however, you may need to employ a more nuanced list depending on your topic. For example, gender pronouns are included in the stopwords list which, in this case, could make sense but if you are studying topics relating to gender may not.

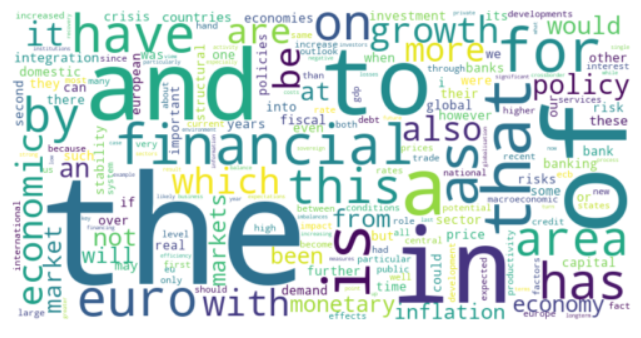

Some visualisations

Here is a wordcloud, which visualises word frequencies by size in an aesthetically appealing format.

# Create a corpus

corpus = statements["text"].to_list()

# Collapse the corpus into a single list of words

words = [word for text in corpus for word in text.split()]

# Create value counts

word_freq = pd.Series(words).value_counts()

# Generate word cloud

wordcloud = WordCloud(width=800, height=400, background_color='white').generate_from_frequencies(word_freq)

# Display the word cloud

plt.imshow(wordcloud, interpolation='bilinear')

plt.axis("off")

plt.show()

🗣️ CLASSROOM DISCUSSION: What went wrong here? Edit the code to get more appropriate output.

# Create a corpus

corpus = statements["text_cleaned"].to_list()

# Collapse the corpus into a single list of words

words = [word for text in corpus for word in text.split()]

# Create value counts

word_freq = pd.Series(words).value_counts()

# Generate word cloud

wordcloud = WordCloud(width=800, height=400, background_color='white').generate_from_frequencies(word_freq)

# Display the word cloud

plt.imshow(wordcloud, interpolation='bilinear')

plt.axis("off")

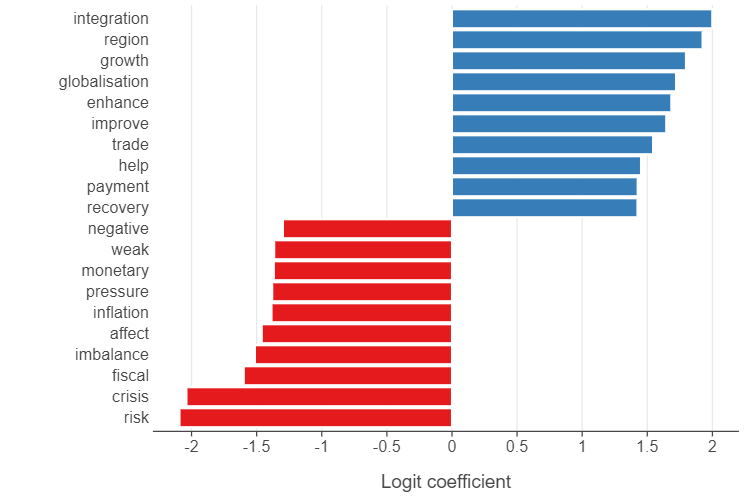

plt.show()To understand which words are most indicative of positive and negative sentiment, we can use dfm to predict our sentiment column using logistic regression. We can then plot the top 10 largest absolute coefficients for the data.

# Create our target using the sentiment column

y = statements["sentiment"]

# Train a logistic regression model

logit_model = LogisticRegression()

logit_model.fit(dfm, y)

# Get word importance (coefficients)

feature_names = np.array(vectorizer.get_feature_names_out())

coefficients = logit_model.coef_.flatten()

# Create a data frame for plotting purposes

coef_df = (pd.DataFrame(

{

"feature": feature_names,

"coefficient": coefficients,

"abs_coefficient": np.abs(coefficients),

"sign": np.where(coefficients > 0, "positive", "negative")

}

)

.groupby("sign")

[["feature","coefficient","sign","abs_coefficient"]]

.apply(lambda x: x.nlargest(10, "abs_coefficient"))

.sort_values("coefficient", ascending=True)

)

# Plot results

(

ggplot(coef_df, aes("coefficient","feature",fill="sign")) +

geom_bar(stat="identity") +

theme(legend_position="none",

panel_grid_major_y = element_blank()) +

labs(x = "Logit coefficient", y = "")

)

🗣️ CLASSROOM DISCUSSION: Which words are most indicative of positive / negative sentiment?

A supervised learning application of NLP - predicting ECB sentiment (30 minutes)

Let’s print the first 5 rows of dfm.

# Code hereWe have 712 features that can be used for building a classification model.

👉 NOTE: In doing this, we are using a Bag of Words (BoW) model - whereby a text (such as a sentence or document) is treated as an unordered collection (or “bag”) of individual words, ignoring grammar and word order. Each word in the text is represented by its frequency (or presence/absence) in the document. This means that the text is converted into a vector where each dimension corresponds to a unique word in the vocabulary (a list of all words across the dataset). The value in each dimension represents how often a word appears in the document. The BoW model assumes that the context or syntax (word order, grammatical structure) does not matter. It only focuses on which words appear and how often.

Next, let’s create a train / test split, making sure to set a random seed and stratify the split by the outcome. We will use dfm as comprising the totality of the features.

# Code hereWe can now specify a classification algorithm. We will opt for a Lasso model here, using an instantiation of LogisticRegression.

# Instantiate a lasso classification model

# Fit the model to the training dataNext, we can build a data frame of the top 10 absolute lasso coefficients and plot the results.

# Create a data frame of the top 20 features

# Plot the output🗣️ CLASSROOM DISCUSSION:

Which features are most important?

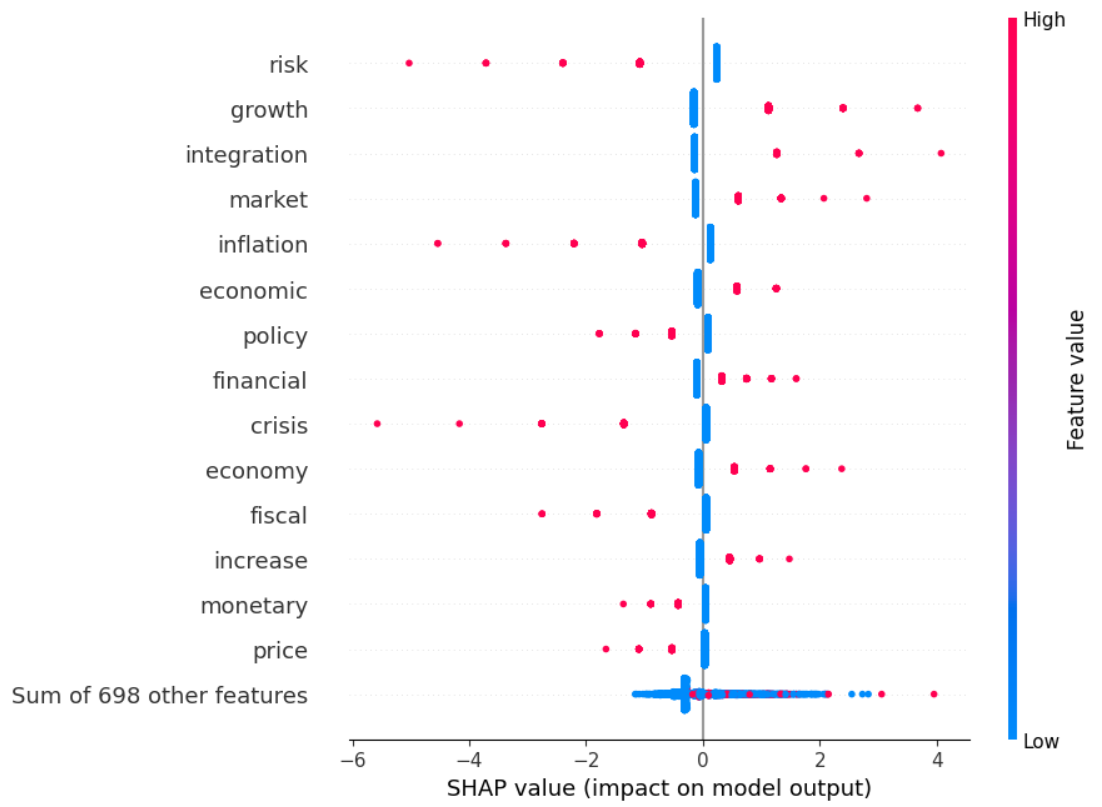

We can also use Shapley values, both for each observation and in the aggregate.

ℹ️ Information:

Shapley values have a game theoretical underpinning. The basic idea is that we want to assign “credit” to a given feature for improving the performance of a model when compared to all possible sets (or “coalitions”) of features. Instead of looking at the global contribution of each feature, as with variable importance, we compute the marginal contribution feature j makes to the prediction of an outcome for each observation, relative to the potential combination of features had the jth feature not been present. The Shapley value is then calculated as the average of all these marginal contributions across all observations. Shapley values can be calculated for all kinds of model, which makes them highly useful quantities of interest to calculate.

For a more in-depth look at Shapley values, check out “Interpretable Machine Learning” from Christoph Molnar (click here for the relevant section).

We can calculate Shapley values easily in Python.

# Instantiate a Shapley values calculator, using the model and the training set features

explainer = shap.Explainer(lasso_classifier, X_train)

# Apply the calculator to the traing set features

shap_values = explainer(X_train)

# Create a "bee swarm" plot

shap.plots.beeswarm(shap_values, max_display=15)

👉 NOTE:

The “beeswarm” plot displays SHAP values per row of data.

🗣️ CLASSROOM DISCUSSION:

Which features are most important? Which make the most sense?

Let’s apply this model to the test set. Present a confusion matrix and evaluation metric of your choice.

# Apply class predictions to the test set

# Confusion matrix

# Display confusion matrix

# Print the evaluation metric of your choice An unsupervised application of NLP - Finding ECB topics using Latent Dirichlet Allocation (30 minutes)

Topic models are often used in social sciences to understand text data. These algorithms cluster words that are frequently used together across different documents into distinct topics (the number of which is set by the user). Each word is then ranked by how indicative they are of a given topic using some kind of score, of which there are many.

We will pick 2 topics to begin with. We will need to create 2 new data structures:

- A corpus dictionary, which assigns a unique token ID to all tokens across the corpus.

- A count of the number of times a token in the corpus dictionary appears in a given document.

Let’s create a corpus, which consists of a collection of documents.

# Create a list of statements

corpus = statements["text"].to_list()

# Print the first 10 entries in the corpus

corpus[0:10]We can then tokenise our corpus, meaning (in this case) that we can break our cleaned documents into distinct words that are not included in the stopwords list.

# Create a tokenised corpus using a nested list comprehension

corpus_tokenised = [

[word for word in document.split() if word not in stopwords]

for document in corpus

]

# Print the first tokenised corpus

corpus_tokenised[0]We can now create the corpus dictionary.

# Create a corpus dictionary

corpus_dictionary = corpora.Dictionary(corpus_tokenised)

# Print the dictionary

print(corpus_dictionary)And the corpus count, which returns a list of tuples for each document, where the first element consists of the token ID and the second reports the count of that token in each document.

# Create a statement bag of words (bow)

corpus_count = [corpus_dictionary.doc2bow(text) for text in corpus_tokenised]

# Print the count for the first two documents

corpus_count[0:2]We can now compile our LDA model.

👉 NOTE: LDA is one of many methods that can be used for topic modelling and text clustering. For the sake of time, we have focused on LDA but nevertheless encourage you to take more in-depth courses on NLP if you find it interesting and / or useful.

# Train an LDA model

lda_model = LdaModel(corpus=corpus_count,

id2word=corpus_dictionary,

num_topics=2,

random_state=123,

passes=10,

iterations=50)After having compiled our 2-topic model, we can now try to understand what each topic signifies “under the hood”. To do this, we can use a nested list comprehension where we employ the get_topic_terms method of lda_model to create a list of top 7 words for each topic.

# List comprehension to isolate topics

top_words = [

[corpus_dictionary[word_id] for word_id, _ in lda_model.get_topic_terms(topic_id, topn=7)]

for topic_id in range(lda_model.num_topics)

]

# Print results

for i, words in enumerate(top_words):

print(f"Topic {i+1}: {', '.join(words)}")🗣️ CLASSROOM DISCUSSION:

Can we intuitively intepret the topics?

👉 NOTE:

- The top 7 words are decided upon as the LDA model assigns a probability of each word appearing in a given topic. The words that have the highest probabilities are therefore the most important.

- We use a trusty throwaway variable (

_) in this context asget_topic_termscreates a tuple, with the word ID and word probability. Since we do not want the word probability, we tell Python to ignore this.

How many topics?

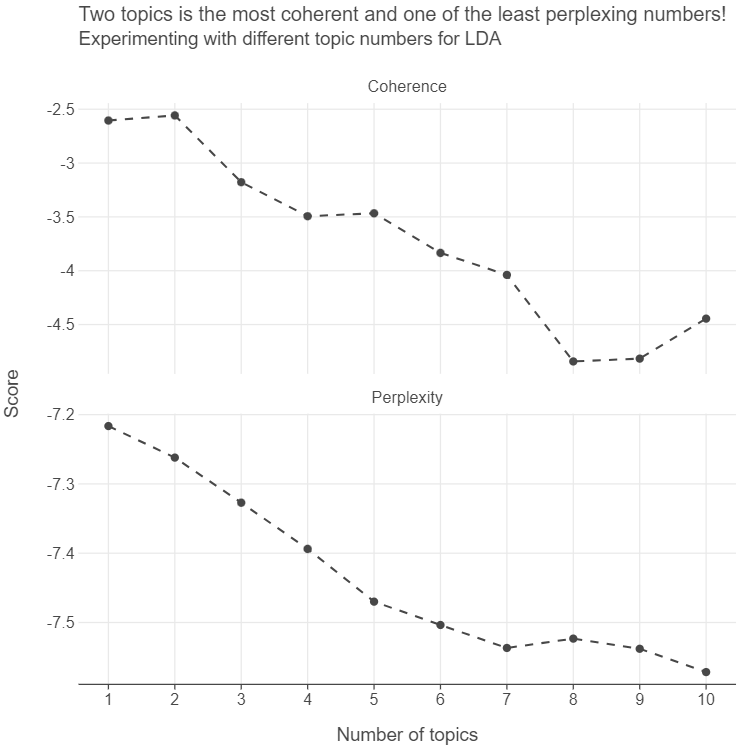

How did we arrive at only 2 topics? Why not, say, 10? We can calculate performance metrics such as semantic coherence and perplexity:

- Semantic coherence: a measure of how commonly the most probable words in a topic co-occur. Note that one limitation of semantic coherence is that it is highest when the number of topics is low

- Perplexity: a measure of how well a model predicts a set of documents.

We can set up a for loop over a range of topic numbers like so.

# Instantiate empty lists for perplexity and coherence scores

perplexities = []

coherences = []

# Create a range of topic numbers from 1 to 10

topic_numbers = range(1,11)

for topic in topic_numbers:

# Train LDA model

lda_model = LdaModel(corpus=corpus_count,

id2word=corpus_dictionary,

num_topics=topic,

random_state=123,

passes=10,

iterations=50)

# Calculate perplexity

perplexity = lda_model.log_perplexity(corpus_count)

# Calculate coherence

coherence = CoherenceModel(model=lda_model, corpus=corpus_count, coherence='u_mass').get_coherence()

# Append values to list

perplexities.append(perplexity)

coherences.append(coherence) After this, we can create a data frame of the results by passing a dictionary to pd.DataFrame. All we then need to do is stack our scores on top of one another, for plotting purposes.

# Create a data frame of results

tm_df = pd.DataFrame(

{

"topics": topic_numbers,

"Coherence": coherences,

"Perplexity": perplexities

}

)

# Pivot the data to a long data frame

tm_melted_df = pd.melt(tm_df, id_vars="topics", var_name = "metric", value_name= "score")

# Plot the results

(

ggplot(tm_melted_df, aes("topics", "score")) +

geom_point() +

geom_line(linetype="dashed") +

facet_wrap(facets="metric", scales="free_y") +

labs(x = "Number of topics", y = "Score",

title = "Two topics is the most coherent and one of the least perplexing numbers!",

subtitle = "Experimenting with different topic numbers for LDA\n")

)