🧐 Comments of the W05 Summative and a model solution

2023/24 Autumn Term

This page contains an analysis of your submissions for the ✏️ W05 Summative.

📊 Submission statistics

| Total | Accepted Assignment |

Not pushed to GitHub (empty repo) |

% of enrolled students who submitted |

|---|---|---|---|

| 67 | 65 | 3 | 92.54% |

I hope the four of you who didn’t submit are preparing yourselves for the next summative! 🤞

Score distribution

🚧 WIP 🚧

- We have not yet finished marking all submissions

- I will update this part of the page later

📝 Solution

Sadly, I didn’t have that much time to write a very comprehensive ‘statistical analysis’ of your notebooks, but I can share the most common mistakes I found in your submissions.

The majority of submissions I inspected managed to solve the problem of scraping the data from the website, specially Part 1. Not every one managed to solve Part 2. I will update this page later with more stats on completion of the assignment.

There was one submission that really stood out to me when Kevin and I were anonymising submissions to give to the markers. I will share it here as a model solution. The notebook is so beautifully organised and all the steps are so clearly explained that I think it’s worth sharing it with you.

Model solution

Click on the button below to download the model solution.

I added a few comments throughout (marked with (Jon comments)).

📌 Common mistakes

I cannot yet comment on mistakes you made in the code itself, as we haven’t finished marking all submissions, but here is a list of the most common mistakes related to the organisation of the notebook and to the failure in following the instructions in the assignment:

CM1: CSV files were not in the data folder

Many forgot to move the CSV files to a data folder as instructed in the assignment!

Why did we ask you to do that?

- It is a way to reinforce the notion of paths, files, directories and the Terminal.

- You would have to create the

./data/folder yourself, usingmkdiror simply your OS’s file explorer. - Placing the CSV files in a

datafolder makes it easier to find them later on and doesn’t ‘pollute’ your working directory.

Markers were instructed to:

- If the CSV files are not in a

data/folder, deduce 10 marks from Criterion 02

CM2: Not using relative paths

The instruction to ‘not identify yourself’ had a double purpose:

- To treat this as anonymous submission, so that markers would not be biased by knowing who the student is.

- To make sure you were using relative paths to save/load the CSV files! ⭐️

The second purpose is super relevant, given how important it is to understand paths, files, directories in the Terminal but also in Python.

Markers were instructed to:

- If notebook does not use relative path and/or the student identified themselves when informing the path to files, deduce 10 marks from Criterion 02.

CM3: Poor Markdown vs Code separation

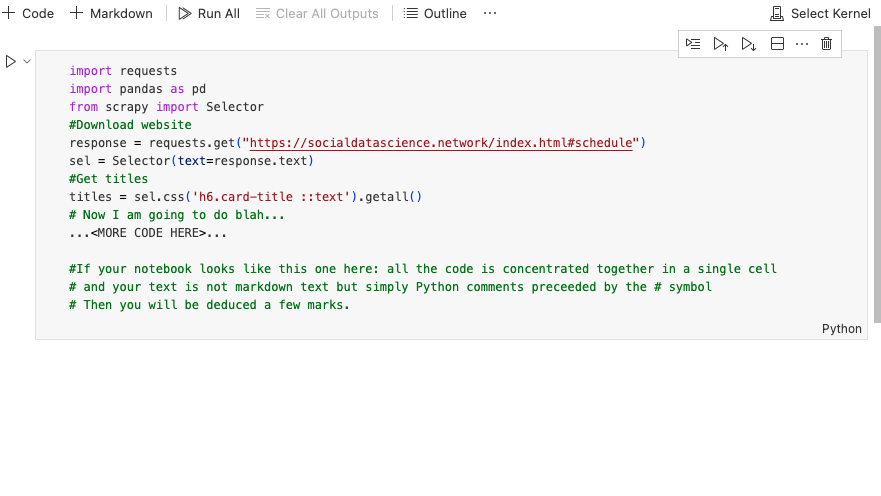

The most common formatting and organisation issue I encountered was the lack of separation between Markdown and Code cells, as shown in the following screenshot:

Some submissions had code and markdown cells, but there was no clear separation between sections. This is a problem because it makes it harder for the reader to understand the structure of the notebook.

There is no universally agreed way to organise a Jupyter Notebook, but you should aim for clarity and consistency. Take a look at the model solution I shared above to see an example of a well organised notebook.

Markers were instructed to:

- Deduce 5 marks from Criterion 1 if there’s not a good markdown vs code cell separation.

- Deduce 3-5 marks from Criterion 1 if submission has markdown headers but there’s barely any accompanying text.

- Deduce 5-10 marks from Criterion 1 if documentation is very poor. That is, if the notebook is not self-explanatory and the reader has to guess what the student is doing.

CM4: Poor acknowledgement of generative AI tools.

By our 🤖 Generative AI Policy, we ask you to disclose the use of any generative AI tools when it comes to assignment. This is so we adhere to LSE’s current regulation on generative AI and mititage concerns about academic misconduct.

However, many of those who acknowledged the use of generative AI tools did not do so in a clear way and that will result in a deduction of marks.

Our markers are also instructed to spot any use of generative AI tools that was not acknowledged. Sometimes it is clear by context (instead of requests, use of BeautifulSoup, a package that we did not explore in this course). We are collecting such cases and will be contacting students to ask for clarification.

Markers were instructed to:

- If the genAI (ChatGPT) acknowledgement was super vague (‘I used ChatGPT in this assignment’) and therefore not in line with our policy, deduce 5 marks from Criterion 02.

- If the solution looks like a puzzle, the sort of thing that would have been concocted with the help of ChatGPT but it’s not clear if the student themselves understand what their code does, flag it here for further analysis by putting this submission as ‘In Review’ on Moodle. We’ll discuss those cases together.

🎉 Good surprises

Now, let’s talk about the most common good surprises I found in your submissions!

By default, you would have earned 0/30 points in Criterion 3 even if you did everything correctly as instructed. (In the UK higher-ed, specially here at LSE, 70% is typically the highest mark you can get in an assignment. I mentioned before how I don’t like this and that’s why we had this ‘extra criterion’.)

Markers were instructed to:

The reasoning here is different. This criterion exists to highlight distinction cases, so by default, if the student just did what was asked of them, they shouldn’t earn any marks here. However, we can award ‘best practices’ as follows:

- If they used custom functions, give them +3 marks. (not strictly required in this assignment, but it’s a good practice)

- If they used list comprehensions whenever possible, give them +3 marks. (not strictly required in this assignment, but it’s a good practice)

- If they took extra care into making sure we could reproduce their code, by adding pip install or mkdir instructions, give them +3 marks. (great practice)

- Did they do some awesome analysis using other python packages that we wouldn’t dream of asking them to use at this stage in the course and it was super nice? Give them +10 marks.