✍️ Problem Set 1 (20%): Online Groceries and the NOVA Classification

2025/26 Winter Term

This is your first graded summative assignment, worth 20% of your final mark. Like most things in DS205, Problem Set 1 is a collaborative project where you will work in pairs: one student builds a web scraper, then hands it off to another student who wraps it in an API. However, rest assured that you will be graded as an individual on both parts of the project.

| ⏳ | Scraper Deadline | Monday, 9 February 2026 at 8 pm UK time |

| ⏳ | Final Deadline | Thursday, 26 February 2026 (Reading Week) at 8 pm UK time |

| 💎 | Weight | 20% of final grade (10% scraper + 10% API) |

Start building!

After accepting, a personalised GitHub repository will be created for you. Grab the SSH URL from there.

Auditing students: you are allowed to accept the assignment and engage with it, but remember that we won’t give you any form of formal feedback on your work.

📝 The Driving Question

As you know, ultra-processed foods (NOVA group 4) are increasingly linked to health concerns, and understanding their prevalence in UK supermarkets is a genuine research question. So, suppose we wanted to build a data pipeline that could help answer:

What proportion of groceries and food items available on a UK supermarket website is ultra-processed (UPF)? And for any given UPF item, what is its closest item that is non-UPF?

If we were to build a data product to answer that question, we would need to collect the live data from the supermarket website, enrich it with information about the NOVA classification of the products (a concept you encountered in 🖥️ W01 with the Open Food Facts API), then make that data available to others through an API.

This is just the motivating question for those who want challenge themselves!

👉 Note: Although I hope the driving question serves as a great ambitious target, I don’t expect you to collect all the data available on the website or to be able to match all products to their respective entries on the NOVA classification list. In terms of assessment, I would like to see you build the infrastructure that would make answering the question possible within the time frame we have, using the techniques explored in class, combined with the common rules we are devising during lectures and to get you thinking about the challenges of working with real-world data.

That is, in practice: you’ll build the infrastructure that would make answering it possible: a scraper to collect product data from at least one grocery category on the Waitrose website and an API that enriches that data with NOVA classifications from Open Food Facts. Importantly, you mustn’t use the Waitrose website’s search functionality or anything else that infringes on their robots.txt file to find the products but to start from the groceries category and ‘click’ through to the products as it is intended by the website.

🤝 How the Collaboration Works

This assignment uses a collaborative handoff model that mirrors elements of professional software development of data products:

| Role | Student | Responsibility |

|---|---|---|

| Part A | Student A | Build a web scraper for Waitrose products |

| Part B | Student B | Wrap A’s data in a FastAPI system with NOVA enrichment |

You will be graded individually. Everyone will work on both parts of the project, but as this is an individual assignment, you will be graded separately on each part.

The quality of A’s work affects B’s experience, and this is intentional. When you start working as Student B, you will inherit the code that someone else has written and there might be elements you will not like about their implementation. This is part of the learning process and ideally, you will need to work with what you are given! Only if the code is seriously incomplete or buggy that we will intervene and help you to get started.

Timeline

| Week | Milestone |

|---|---|

| W02-W04 | You work as Student A, building your scraper on the main branch of your designated GitHub repository |

| W04 Lecture | Pairings announced |

| End of W04 | Jon provides formative feedback on the current state of your scrapers via GitHub Issues |

| W04-W06 | You invite another person to your repository as a collaborator, and ask them to do the same for you. You build an API for their scraper data in their repository and they, in turn, build an API for your scraper data in your repository. |

| W06 Reading Week | Deadline: Thursday 26 Feb, 8pm. You submit a Pull Request before the 8pm deadline, marking your submission as complete. |

You can still improve your scraper after W04

On Week 04, I will give you formative feedback on the current state of your scraper with indication of how you would be graded on that work, but that is not your final grade! You can still make improvements until the W06 deadline if you wish and get a better grade.

Note however, that whoever inherits your code will have to work with it as it is, so you should make sure it is in a good state before the handoff, including having good documentation and testing.

The Handoff Process

- You work on the

mainbranch until W04 - Pairings announced at W04 Lecture (I’ll make them randomly)

- You invite your assigned Student B as a collaborator to your repository

- Your assigned Student B creates a

feature/apibranch and works there - Your assigned Student B submits a Pull Request to merge

feature/apiintomain - The PR is your deliverable which we use to grade your work

What if Student A’s code doesn’t work?

When building the API: You are expected to try to work with what you receive. If A’s documentation is unclear, ask them via GitHub comments on the repository. If A’s code has bugs, document what you found and how you worked around it.

If A’s scraper genuinely produces no usable data, contact Jon via Slack. In extreme cases, we may provide a template dataset so you can proceed with Part B.

🤔 Think about it: This is why documentation and testing matter. Make sure your scraper actually works before W04.

📋 Project Structure

Your repository MUST follow this structure:

<your-github-repo>/

├── scraper/ # Student A's domain

│ ├── README.md # How to run, link to pre-scraped data

│ └── ... # Your scraper code (organisation up to you)

├── api/ # Student B's domain

│ ├── README.md # How to run the API

│ └── ... # Your API code (organisation up to you)

├── data/

│ ├── scraped/ # Student A's output (raw or cleaned, your choice)

│ └── enriched/ # Student B's output (products + NOVA data)

├── environment.yml # Shared conda environment (initial template provided)

└── README.md # Project overview (template provided)You have freedom to organise files within scraper/, api/, and data/ subfolders as you see fit, but the top-level structure must remain as shown.

📌 Essential Requirements

Let the driving question guide your decisions. The rubric tells you what we value. Here are the non-negotiable constraints:

Data Collection (Part A)

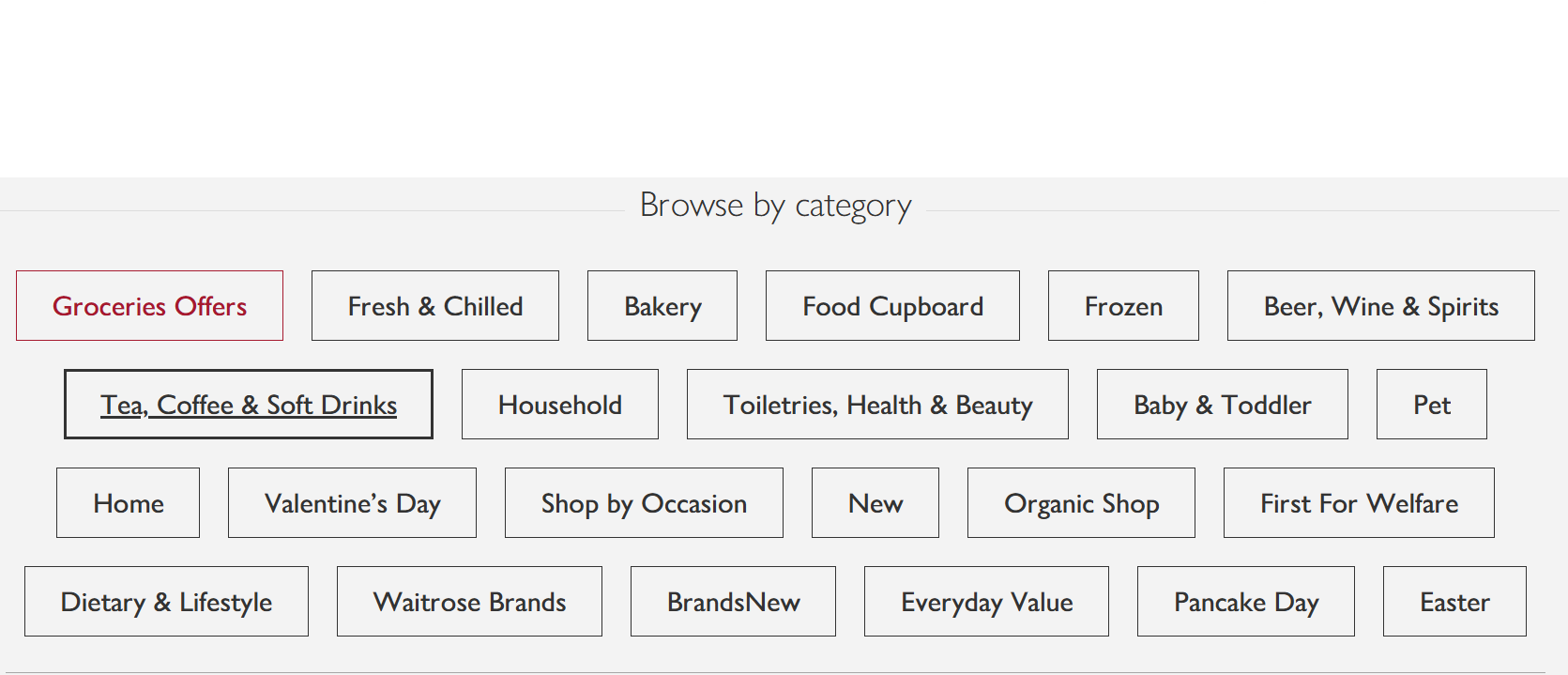

Scrape all food items from at least one food category using the Waitrose’s groceries page as a starting point.

Figure 1. Waitrose categories (note that not all of them are food categories!). When going through the categories, navigate through them as intended by the website. Do not use their search functionality or violate

robots.txtHandle duplicates thoughtfully (some products can appear in multiple categories)

Save your output to

data/scraped/. Feel free to organise it however makes sense for your approach (raw HTML, cleaned JSON, or both)Use Scrapy or Selenium (❌ beautifulsoup is not permitted in this course)

Justify your code architecture and data storage decisions in

scraper/README.md

API Development (Part B)

Your FastAPI application must include the following endpoints:

| Endpoint | Returns |

|---|---|

GET /products |

Return all products available in the API (JSON) |

GET /products/{id} |

Return a single product with its NOVA classification (JSON) |

GET /products?nova_group=4 |

Return products filtered by NOVA group (JSON) |

- Enrich products with NOVA data from Open Food Facts (pre-enrich rather than querying live per request)

- Save enriched data to

data/enriched/ - Use Pydantic v2 for data models

- Work on

feature/apibranch and submit a Pull Request as your deliverable

For Both Parts

- Write code that is as short as possible while remaining clear

- Write documentation that helps others use your work

- Commit regularly with meaningful messages

💡 Tips and details

Scrapy or Selenium? Check whether product data appears in “View Page Source” or only after JavaScript runs. Document what you discover.

Large data files? GitHub isn’t for large files. Upload to LSE OneDrive and include a sharing link in your README.

Open Food Facts matching is fuzzy. “Waitrose Essential Semi-Skimmed Milk 2L” might match to “Semi-skimmed milk” in Open Food Facts, or might not match at all. Handle non-matches gracefully.

Working with inherited code: Read A’s README first. If something is unclear, ask via GitHub comments. Document friction — what made your job harder?

🏆 Going Further

Not required, but recognised: scraping multiple categories, implementing similarity matching for “closest non-UPF alternative”, meaningful pytest tests, exceptional code elegance.

✔️ How We Will Grade Your Work

You are graded separately on your component. Student A’s grade does not directly affect Student B’s grade (though A’s work quality affects B’s experience).

Coherence matters: We assess whether your documentation matches your implementation. If you claim your scraper handles pagination elegantly but the code shows otherwise, this affects your mark.

Process matters: We look at your git history. Regular commits with meaningful messages demonstrate steady progress. A single massive commit the night before suggests last-minute work.

Brevity matters: We value code that is as short as possible while remaining clear. Over-engineered solutions, unnecessary abstractions, and verbose documentation will be marked down, not up.

Part A: Web Scraper (50 marks)

📥 Technical Implementation (0-25 marks)

| Marks | Level | Description |

|---|---|---|

| 0-5 | Poor | Scraper doesn’t run or produces no usable data. Major errors throughout. |

| 6-10 | Weak | Scraper runs but has significant problems: wrong data extracted, poor error handling, code is difficult to follow. Also applies to working scrapers buried under unnecessary complexity. |

| 11-15 | Fair | Scraper works for basic cases. Some issues with edge cases, error handling, or code organisation. |

| 16-20 | Good | Scraper works reliably. Handles pagination and errors reasonably. Code is clear, concise, and follows course patterns. No unnecessary abstractions. |

| 21-25 | Excellent | Exceptionally elegant scraper that solves the problem in the fewest lines possible without sacrificing readability. Every function earns its place. Handles edge cases without bloating the codebase. The kind of code that makes a reviewer think “I wish I’d written this.” |

📝 Documentation & Handoff Readiness (0-25 marks)

| Marks | Level | Description |

|---|---|---|

| 0-5 | Poor | README missing or useless. No instructions for running. Data inaccessible. |

| 6-10 | Weak | README exists but incomplete or bloated. Missing critical information, or buries it under unnecessary content. Walls of text count as weak, not thorough. |

| 11-15 | Fair | README covers basics but lacks clarity or contains filler. Student B could figure it out with some effort. |

| 16-20 | Good | Clear, concise README. Environment setup, run instructions, data access — nothing more, nothing less. Student B can start immediately without wading through prose. |

| 21-25 | Excellent | Documentation so precise it feels inevitable. Every sentence earns its place, and there are no fillers or unnecessary redundancy. Achieves clarity that most professional documentation fails to reach. |

Part B: API System (50 marks)

📥 Technical Implementation (0-25 marks)

| Marks | Level | Description |

|---|---|---|

| 0-5 | Poor | API doesn’t run or endpoints don’t work. Major errors throughout. |

| 6-10 | Weak | API runs but has significant problems: endpoints return wrong data, missing type hints, Pydantic misused. Also applies to functional APIs wrapped in unnecessary layers of abstraction. |

| 11-15 | Fair | API works for basic requests. Some issues with edge cases, validation, or code organisation. |

| 16-20 | Good | API works reliably. Proper use of FastAPI, Pydantic, type hints. Lean codebase: no wrapper functions that just call other functions, no premature abstractions. |

| 21-25 | Excellent | API implementation that achieves full functionality with minimal code. Pydantic models are tight and there are no redundant fields. Endpoints do exactly what they should, nothing more. Error handling is present but not theatrical (i.e. not over-engineered). The entire API could be understood in a single reading. |

🔗 Data Enrichment & API Design (0-25 marks)

| Marks | Level | Description |

|---|---|---|

| 0-5 | Poor | No NOVA enrichment attempted or completely broken. Endpoints don’t serve useful data. |

| 6-10 | Weak | Some enrichment attempted but poorly executed. Many products missing NOVA data with no explanation. Overly complex matching pipelines that don’t improve results also fall here. |

| 11-15 | Fair | Enrichment works for some products. Non-matches handled but not elegantly. API design is functional. |

| 16-20 | Good | Thoughtful matching strategy that balances accuracy with simplicity. Non-matches handled gracefully with clear rationale. API design serves the driving question without unnecessary endpoints. |

| 21-25 | Excellent | Matching strategy that is both effective and economical; there are no excessive multi-stage pipelines unless each stage demonstrably improves results. API design is minimal yet sufficient to answer the driving question completely. Documentation explains choices in a few sentences, not paragraphs. The rare submission that makes us reconsider what’s possible in the time given. |

♟️ Tactical Plan

Your Scraper (W02-W04)

| Week | Focus |

|---|---|

| W02 | Accept assignment, explore Waitrose site structure, determine whether you need Scrapy or Selenium |

| W03 | Build your scraper for one category, save initial data, iterate |

| W04 | Refine, document, ensure data is accessible — aim to finish by W04 Lecture |

Your API (W04-W06)

| Week | Focus |

|---|---|

| W04 | Receive pairing, clone their repo, review their code and data, create feature/api branch |

| W05 | Build FastAPI endpoints, implement Open Food Facts matching, save enriched data |

| W06 | Polish, document, submit Pull Request by Thursday 8pm |

📮 Need Help?

- Post questions in the

#helpSlack channel - Check office hours (Barry hosts them on Mondays, 2-4pm and Jon hosts them on Wednesdays 2-5pm, bookable via StudentHub)

- For pairing issues or emergencies, DM Jon on Slack

Remember: the peer handoff is meant to teach you something about collaborative development. Some friction is expected. How you handle it is part of the learning.

📚 Appendix: NOVA Classification Refresher

| Group | Description | Examples |

|---|---|---|

| 1 | Unprocessed or minimally processed | Fresh fruit, vegetables, eggs, milk |

| 2 | Processed culinary ingredients | Oils, butter, sugar, salt |

| 3 | Processed foods | Canned vegetables, cheese, bread |

| 4 | Ultra-processed foods (UPF) | Soft drinks, packaged snacks, instant noodles |

The Open Food Facts API returns NOVA classification in the nova_group field (1-4) or nova_groups_tags field.

📚 See Wikipedia: Nova classification for more detail.