📝 Problem Set 2: Climate Policy Information Extraction with NLP (40%)

2024/25 Winter Term

Overview

In this assignment, you will create a Natural Language Processing (NLP) system to extract specific climate policy information from Nationally Determined Contributions (NDC) documents.

Your goal is to build a data pipeline that can automatically retrieve information from unstructured data (the NDC PDFs) and answer the following question:

“What emissions reduction target is each country in the NDC registry aiming for by 2030?”

Motivation: You will take on the role of a TPI analyst who has just begun working on the EP2.a.i indicator of the ASCOR assessment. (In practice, the analyst will need to gather additional data beyond this assignment, as they will compare against the 2019 emissions levels.) Your aim is to automate the process as much as possible and create a pipeline that not only answers the question but also identifies the specific page, paragraph, or section of the PDF that contains the relevant piece(s) of information.

NOTE: We value your process leading to a strong final product more than the product itself. You can still achieve a high score even if only a few questions are automatically answered, as long as you demonstrate the use of the right skills.

💡 Tip: By the time this assignment is published, you will have been introduced to most of the necessary elements. The upcoming lectures and labs in Weeks 09 and 10 will cover the remaining topics, and we will also set aside time to help you make progress.

📚 Preparation

- Click on this GitHub Classroom link 1 to create your designated repository.

- Follow the setup instructions in the starter code README to get your environment ready.

📤 Submission Details

📅 Due Date: Friday 28 March 2025, 8pm UK time

📤 Submission Method: Push your work to your allocated GitHub repository. If you can see your work on GitHub, we can see it too and that’s what we mark.

💡 Tip: Start early and make regular commits. This helps track your progress and ensures you have a working solution by the deadline.

⚠️ Note: Late submissions will receive penalties according to LSE’s policy.

📚 Required Tasks

This assignment consists of three main components:

- Document Analysis and Annotation (25%)

- Embedding Generation and Comparison (35%)

- Information Extraction System (40%)

The table below breaks down these three main components into what we expect you to do.

| Requirement | Details | Course Connection |

|---|---|---|

| Data Annotation Document Analysis |

- Process PDF files to a structured data format - Label sections of the extracted text with location data (e.g. page number, section heading, etc.) - Document annotation methodology |

💻 W07 Lab Text extraction methods + 💻 W08 Lab Document chunking |

| Quality Assurance Document Analysis |

- Validate consistency (🏆) - Handle edge cases (🏆) |

💻 W08 Lab Exploratory quality assurance |

| Implementation Embeddings |

- Compare keyword search (creative use of Word2Vec or of things like bag-of-words and TF-IDF) - Compare it with Transformer-generated embeddings |

🗣️ W07 Lecture Word2Vec models + 🗣️ W08 Lecture Transformer models |

| Analysis Embeddings |

- Exploratory analysis of embedding spaces (e.g., pairwise similarity, visualisation) - Document discovery of insights (or lack of) from exploration - Document performance differences |

🗣️ W08 Lecture Embedding visualization + 💻 W08 Lab HuggingFace ecosystem |

| Vector Search Extraction |

- Implement similarity search - Apply thresholds or other relevant filters (🏆) |

🗣️ W09 Lecture PostgreSQL with pgVector + 💻 W09 Lab Vector search implementation |

| Pipeline Extraction |

- Design effective prompts - Extract relevant information to a structured data format |

🗣️ W10 Lecture Collaborative extraction techniques + 💻 W10 Lab Extraction workshops |

| Evaluation Extraction |

- Document & page-level precision - Paragraph-level precision (🏆) - Compare results against manually-curated ground-truth annotations (🏆) |

💻 W09 Lab Location precision techniques + 🗣️ W10 Lecture Precision evaluation |

Everything that is NOT marked with a 🏆 is considered a core requirement. The 🏆 marks are intended to reward those who can go above and beyond and deserve a distinction.

🛠️ Getting Started: Starter Code & Project Structure

You won’t start completely from scratch. I’m giving you a good starting point to help you focus on the NLP components rather than boilerplate. Here’s what you’ll find:

climate-policy-extractor/

├── climate_policy_extractor/

│ ├── __init__.py

│ ├── items.py

│ ├── settings.py

│ └── spiders/

│ ├── __init__.py

│ └── ndc_spider.py

├── notebooks/ # Just a suggestion. Remove/add as needed

│ ├── NB01-pdf-extractor.ipynb

│ ├── NB02-embedding-comparison.ipynb

│ ├── NB03-information-retrieval.ipynb

│ └── NB04-evaluation.ipynb

├── README.md

├── REPORT.md

├── requirements.txt

└── scrapy.cfg What’s Already Implemented:

Web scraping pipeline that collects NDC documents and saves the PDFs in the

data/pdfsfolderRun with

scrapy crawl ndc_spider

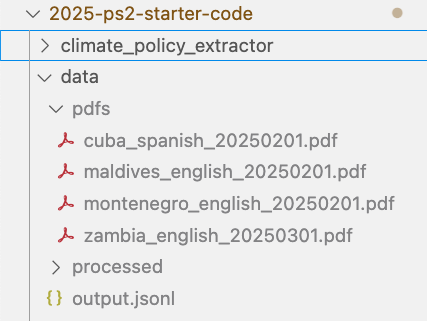

You should see the following in yourdata/folder when you run the spider:

Search for

TODOin the code to find the places you need to implements

Basic file management

Skeleton for document extraction using the

unstructuredlibrary- The relevant code is in the

notebooks/NB01and on thenotebooks/utils.pyfile.

- The relevant code is in the

Empty notebooks with suggested workflow structure

Documentation templates

README with initial setup instructions

What You Need to Implement:

- Complete the document extraction and annotation process

- Build embedding generation and comparison functionality

- Develop the information retrieval system

- Create evaluation metrics and performance analysis

The starter code handles the tedious parts of data collection, allowing you to focus on the NLP components that form the core of this assessment.

✔️ Marking Guide

In line with the unwritten but widely-used UK marking conventions, grades must be awarded as follows:

- 40-49: Basic implementation with significant room for improvement (typically missing many core requirements)

- 50-59: Working implementation but one that meets only the very basic requirements (it looks very incomplete)

- 60-69: Good implementation demonstrating solid understanding with small caveats and minor improvements possible

- 70+: Excellent implementation going beyond expectations, showing creativity and depth of understanding without being overly verbose or over-engineered

Note from Jon: I find this artificial ‘cap’ at 70+ marks silly and unnecessary and it clashes with what I understand to be the pedagogical purposes of an undergraduate course that is all about demonstrating hands-on experience. If I can show that your work is of a high standard and clearly demonstrates that you are truly and meaningfullyengaged with the material beyond a shallow level, I’ll be happy to award disctions.

📋 Deliverables

| Deliverable | Details |

|---|---|

| GitHub Repository | - Complete implementation code (not just starter code) - Documentation - Requirements file |

| Technical Report | - Annotation methodology - Embedding performance analysis - System evaluation results - Design decisions and trade-offs |

| Interactive Demo Notebook(s) | - System workflow - Example queries - Performance metrics - Summary of results (tables and/or charts) |

⚠️ Note: Your implementation should be reproducible. Include clear setup instructions and handle dependencies appropriately.

👂 Feedback

You will receive:

- Detailed feedback on your implementation

- Suggestions for improvement

- Justification for marks awarded

- Specific suggestions for potential contributions to the public repository

By the way, you should receive feedback on your previous assignment by Week 09.

👂 Show you can act on feedback

In Spring Term 2025, we will evaluate all submissions and select the best ones to create a public repository. All students will then have the opportunity to contribute through pull requests (PRs) based on the feedback received on their individual submissions.

These contributions can earn you additional marks beyond your initial grade. This approach allows you to:

- Improve your work based on detailed feedback

- Gain experience with collaborative development workflows

- Build a public portfolio piece that showcases your NLP skills

The specific details about the public repository and contribution process will be shared later.

👉 We understand this timeline might not work for everyone, given a potential clash with group projects of this course as well as exams period. However, as this is essentially extra marks, don’t feel pressured to take part in it.

🔗 Connection to Future Work

The skills developed in this assignment directly support your upcoming group project where you’ll:

- Work in teams to build more complex information extraction systems

- Implement automated pipelines with CI/CD (Week 11)

- Scale solutions to handle larger document collections

- Address real-world information needs for climate policy analysis

💡 Tip: Attending Weeks 09-11 will provide critical knowledge for both completing this assignment effectively and preparing for your group project.

Footnotes

Visit the Moodle version of this page to get the link. The link is private and only available for formally enrolled students.↩︎