Week 08

SQLite Foundations for Data Projects

DS105A – Data for Data Science

🗓️ 20 Nov 2025

Today’s Goals

- Learn: Database fundamentals and SQLite storage

- Understand: Why organisations normalise data into multiple tables

- Practice: Schema design,

pd.merge(), and basic SQL queries

Why this matters: These skills enable structured data storage and support your ✍️ Mini-Project 2 work, where you can optionally use SQLite for organising TfL and ONS data.

1️⃣ Intro to Databases

The Scenario

If you work for a company, you will discover that they likely already have a lot of data in their internal systems.

You might find data in Excel spreadsheets (which you can easily read into Python using

pandas) or convert them to CSV files.At larger organisations, you will likely find that this operational data is stored in databases.

Files vs Databases

Files

| Common formats: | TXT, CSV, TSV, JSON, JSONL, XML, Excel |

| Most used when: |

|

Databases

| Popular systems: | SQLite 🆓, MySQL 🆓, PostgreSQL 🆓, Oracle 💰, Microsoft SQL Server 💰, cloud-based databases |

| Most used when: |

|

Remember Binary Files?

In W03, we used the cat command to view files.

📋 NOTE:

You also won’t be able to view the contents of a database file with cat because it’s not a plain text file. Internally, databases use data structures more advanced than the ones we learn in this course to store and guarantee data integrity.

🔗 Link: (Advanced) Click here to learn of how one database system (SQLite) stores its data in its internal file format.

Database as a collection of tables

A relational database is a collection of tables connected by relationships.

Tables store data in rows and columns, similar to DataFrames.

We can connect tables through information they share.

(I labelled the relationships in this imaginary diagram just for the sake of clarity. In reality, the type of databases I am talking about don’t allow you to name the relationships.)

Tables are like enhanced DataFrames

Columns in the pandas DataFrame are like columns in the database table.

Pandas DataFrame (movies)

| movie_id (int64) |

title (object) |

rating (float64) |

votes (int64) |

|---|---|---|---|

| 1 | Carmencita | 5.7 | 1335 |

| 2 | Le clown | 5.4 | 842 |

| 3 | Pauvre Pierrot | 6.2 | 1971 |

But in pandas, you can:

- add or remove columns at will

- change the types of columns anytime

- have missing values in any column

- rely on row indices instead of enforcing keys (like foreign keys in databases)

But database tables are stricter

You can’t just do anything you want with a database table. You have to follow the rules.

Database table (movies)

| movie_id (PK) (INTEGER) |

title (TEXT NOT NULL) |

average_rating (REAL) |

num_votes (INTEGER NOT NULL) |

|---|---|---|---|

| 1 | Carmencita | 5.7 | 1335 |

| 2 | Le clown | 5.4 | 842 |

| 3 | Pauvre Pierrot | 6.2 | 1971 |

Database table (ratings)

| movie_id (PK, FK) (INTEGER) |

average_rating (REAL) |

num_votes (INTEGER) |

|---|---|---|

| 1 | 5.7 | 1335 |

| 2 | 5.4 | 842 |

| 3 | 6.2 | 1971 |

You need to know that:

- tables must be defined before you insert data, and changing them later is harder

- a column can only have missing values if it is declared

NULLwhen the table is created - tables have primary keys that uniquely identify each row (PK = Primary Key)

- tables can have foreign keys that reference other tables (FK = Foreign Key)

2️⃣ Reading from databases (IMDb exploration)

There are many databases out there. We’ll use one called SQLite because it’s free, lightweight, and easy to use.

What is IMDb?

- IMDb (Internet Movie Database) powers millions of lookups.

- We’re examining the real production schema, not a toy example.

- Same normalisation principles you’ll apply to TfL + ONS data.

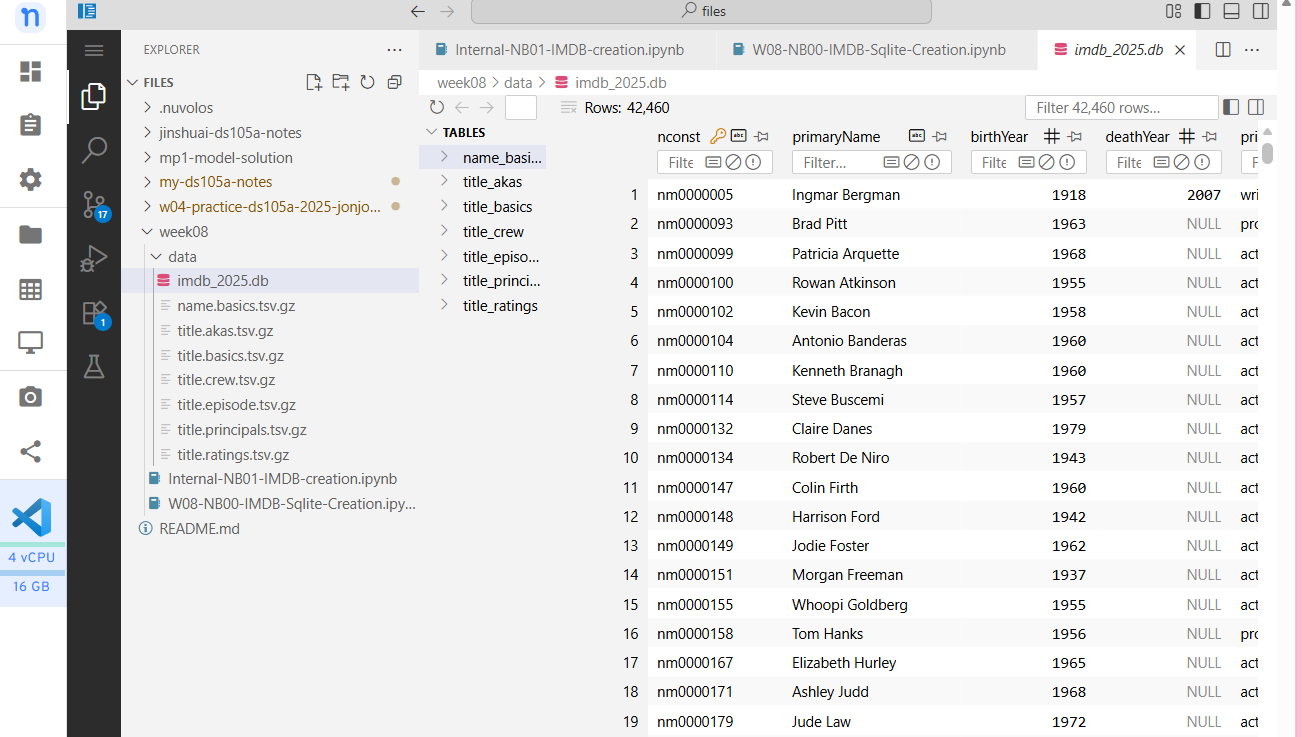

To keep VS Code responsive we created imdb_2025.db, a curated slice of titles whose endYear = 2025.

You should see a copy of it on your workspace at /files/week08/data/imdb_2025.db.

Explore a database visually (1/2)

Install the SQLite Viewer extension in VS Code.

- Access

VS Code on

![]() Nuvolos

Nuvolos - Click on the Extensions icon in the left sidebar

- Search for “SQLite Viewer”

- Click on the “Install” button

- Access

Explore a database visually (2/2)

Open the database file in the SQLite Viewer.

- Go back to the file explorer in VS Code

- Navigate to

imdb_2025.dbinside your Week 08 data folder - Right-click the file and select “Open with SQLite Viewer”

- You should see the database file open in the SQLite Viewer

Explore database using Python

From within /files/week08/ you will find a W08-NB01-Lecture notebook which has code like the one below. We need to add the sqlite3 library to the notebook.

Then we can connect to the database and read the first 5 rows of the title_basics table:

(Alt) Connect via Nuvolos Tables

Note from Jon: Nuvolos has this particular feature where it lets us all access the same database. Listen closely during the lecture to see if this is enabled. It if is, you can use the code below to connect to the database.

The Nuvolos version (if available) is much bigger than the imdb_2025.db file you have on your workspace and has all the historical data.

SQL Basics Preview

SQL stands for Structured Query Language. It is the language used to query databases and the inspiration for many of pandas’s DataFrame methods.

Tomorrow’s lab builds SQL skills progressively:

SELECTspecific columnsWHEREto filter rowsCOUNTandCOUNT(DISTINCT)for aggregationsGROUP BYto split-apply-combine- Combine everything to recreate plot_df

Today, we will use the accompanying notebook to explore these patterns. Listen carefully during the lecture.

Explore the Structure

IMDb has several tables. Look at a few:

# Titles and basic information

pd.read_sql("SELECT * FROM title_basics LIMIT 5", con)

# Ratings for those titles

pd.read_sql("SELECT * FROM title_ratings LIMIT 5", con)

# Actors and their roles

pd.read_sql("SELECT * FROM title_principals LIMIT 5", con)

# Names of actors

pd.read_sql("SELECT * FROM name_basics LIMIT 5", con)Data split across multiple tables.

How IMDb Tables Connect

Look at tconst. It appears in both title_basics and title_ratings.

title_basics:

| tconst | primaryTitle |

|---|---|

| tt0000001 | Carmencita |

| tt0000002 | Le clown |

title_ratings:

| tconst | averageRating |

|---|---|

| tt0000001 | 5.7 |

| tt0000002 | 5.4 |

One title has one rating. Connected by tconst.

ORDER BY + LIMIT (rankings)

How can I get the top 10 highest rated popular (100k+ votes) titles?

| tconst | averageRating | numVotes |

|---|---|---|

| tt4574334 | 8.6 | 1504453 |

| tt9253284 | 8.6 | 255970 |

| tt7221388 | 8.4 | 237080 |

| tt5834204 | 8.3 | 290989 |

| tt31806037 | 8.1 | 252695 |

| tt10919420 | 8.0 | 727012 |

| tt1869454 | 8.0 | 121327 |

| tt1751634 | 7.6 | 204936 |

| tt7335184 | 7.6 | 354235 |

| tt7462410 | 7.2 | 161539 |

3️⃣ Tidy Data

Tidy Data Principle

One table represents one thing.

Ask of every table: what does a single row describe?

If the answer changes mid-table, you probably need another table.

Unit of Analysis

Each table has a unit of analysis. In our example:

title_basics: Each row describes a title (movie, TV series, episode)

title_ratings: Each row describes a title’s rating

title_principals: Each row describes an actor’s role in a title

Only JOIN When Needed

You won’t always need to join tables. It all depends on the question you are asking.

Question: “How many titles per type?” → Query

title_basicsonlyQuestion: “Average rating per title type?” → JOIN

title_basicswithtitle_ratingsQuestion: “Which actors appear in highest rated titles?” → JOIN all three

Query design: use minimum tables needed.

4️⃣ pd.merge() and SQL JOIN

🤔 How to grab the title names?

Back to our example. How can I get the top 10 highest rated popular (100k+ votes) titles?

| tconst | averageRating | numVotes |

|---|---|---|

| tt4574334 | 8.6 | 1504453 |

| tt9253284 | 8.6 | 255970 |

| tt7221388 | 8.4 | 237080 |

| tt5834204 | 8.3 | 290989 |

| tt31806037 | 8.1 | 252695 |

| tt10919420 | 8.0 | 727012 |

| tt1869454 | 8.0 | 121327 |

| tt1751634 | 7.6 | 204936 |

| tt7335184 | 7.6 | 354235 |

| tt7462410 | 7.2 | 161539 |

🤔 How to grab the title names? (cont.)

If we have this DataFrame:

.isin() checks whether each tconst in df_titles appears inside the short list of df_top_ratings.

Why filtering is not enough

The output would look like this:

| tconst | primaryTitle | titleType | |

|---|---|---|---|

| 87 | tt10919420 | Squid Game | tvSeries |

| 340 | tt1751634 | The Sandman | tvSeries |

| 354 | tt1869454 | Good Omens | tvSeries |

| 862 | tt31806037 | Adolescence | tvMiniSeries |

| 5186 | tt4574334 | Stranger Things | tvSeries |

| 5204 | tt5834204 | The Handmaid’s Tale | tvSeries |

| 5240 | tt7221388 | Cobra Kai | tvSeries |

| 5242 | tt7335184 | You | tvSeries |

| 5246 | tt7462410 | The Wheel of Time | tvSeries |

| 5305 | tt9253284 | Andor | tvSeries |

.isin()works, but you have to manage masks manually.- We still want the rating columns and the titles in one DataFrame.

- This is the moment to learn how to join tables in pandas.

Introducing pd.merge()

on="tconst"tells pandas which column matches between tables.how="inner"keeps only the rows that exist in both tables.- Result: ratings + human-readable title in one DataFrame.

The output

This is more like how we want to see the data.

| tconst | averageRating | numVotes | primaryTitle | titleType | |

|---|---|---|---|---|---|

| 0 | tt4574334 | 8.6 | 1504453 | Stranger Things | tvSeries |

| 1 | tt9253284 | 8.6 | 255970 | Andor | tvSeries |

| 2 | tt7221388 | 8.4 | 237080 | Cobra Kai | tvSeries |

| 3 | tt5834204 | 8.3 | 290989 | The Handmaid’s Tale | tvSeries |

| 4 | tt31806037 | 8.1 | 252695 | Adolescence | tvMiniSeries |

| 5 | tt10919420 | 8.0 | 727012 | Squid Game | tvSeries |

| 6 | tt1869454 | 8.0 | 121327 | Good Omens | tvSeries |

| 7 | tt1751634 | 7.6 | 204936 | The Sandman | tvSeries |

| 8 | tt7335184 | 7.6 | 354235 | You | tvSeries |

| 9 | tt7462410 | 7.2 | 161539 | The Wheel of Time | tvSeries |

Merge parameters cheat sheet

| Parameter | Meaning |

|---|---|

left, right |

DataFrames you want to combine |

on |

Column present in both DataFrames |

left_on, right_on |

Use when join column names differ |

how |

"inner", "left", "right", "outer" |

suffixes |

Rename overlapping columns (('_rating', '_info')) |

This is the same logic you’ll use for MP2’s TfL + ONS merges if you choose to use a database.

SQL JOIN equivalent

Everything above can be run directly inside SQLite as well:

pd.read_sql() will return the merged DataFrame without needing pandas-side merge().

5️⃣ Creating a SQLite Database

You do not have to build your own database for ✍️ Mini Project 2, but you will for the 📦 Final Project. These slides demonstrate part of the process of creating a SQLite database.

How these tables were created

In Part 1 I showed how we describe a table, what we call the schema.

If you decide to define your own tables (optional for ✍️ Mini Project 2, required later), this is exactly how the IMDb schema looks in SQL:

Schema designed first. Then data populated.

Creating the SQLite file

Once those statements exist you can write them to a .db file like this:

Populating IMDb Tables

If this data was in DataFrames, you’d populate like this:

By the way, be careful with the if_exists parameter.

Group Question

Why separate ratings from titles? What problems does one table create?

Think about what happens if a rating changes. Or if we want to add more information about titles.

Tomorrow’s Lab

In tomorrow’s 💻 W08 Lab, you’ll:

- Practice SQL queries progressively (SELECT → WHERE → GROUP BY)

- Write pandas equivalents to verify understanding

- Independently create plot_df using SQL by lab end

Bonus: Schema Design

Schema Design Pattern (Optional)

Want to see how to design your own schema? Here’s the pattern:

CREATE TABLE journeys (

journey_id INTEGER PRIMARY KEY,

postcode_origin TEXT NOT NULL,

postcode_destination TEXT NOT NULL,

total_duration INTEGER

);

CREATE TABLE steps (

step_id INTEGER PRIMARY KEY,

journey_id INTEGER NOT NULL,

step_distance INTEGER,

step_travel_mode TEXT,

FOREIGN KEY (journey_id) REFERENCES journeys(journey_id)

);Key Takeaways

Today you learned:

- ✅ Why organisations normalise data

- ✅ How to design schemas

- ✅

pd.merge()with normalised data - ✅ Basic SQL queries

Remember: Normalised storage enables flexible analysis. JOIN when your question requires it.

Questions?

Resources:

- 📓 Lecture notebook (downloadable on Nuvolos)

- 💻 W08 Lab tomorrow

- 💬 Post questions in

#helpon Slack - 📅 Attend drop-in sessions

Looking ahead: 🖥️ W09 Lecture more SQL practice and renewed focus on data visualisation.

![]()

LSE DS105A (2025/26)