sequenceDiagram

participant Browser

participant Server

Browser->>Server: HTTP Request

Server-->>Browser: HTTP Response

🗓️ Week 02

From APIs to Web Scraping

DS205 – Advanced Data Manipulation

26 Jan 2026

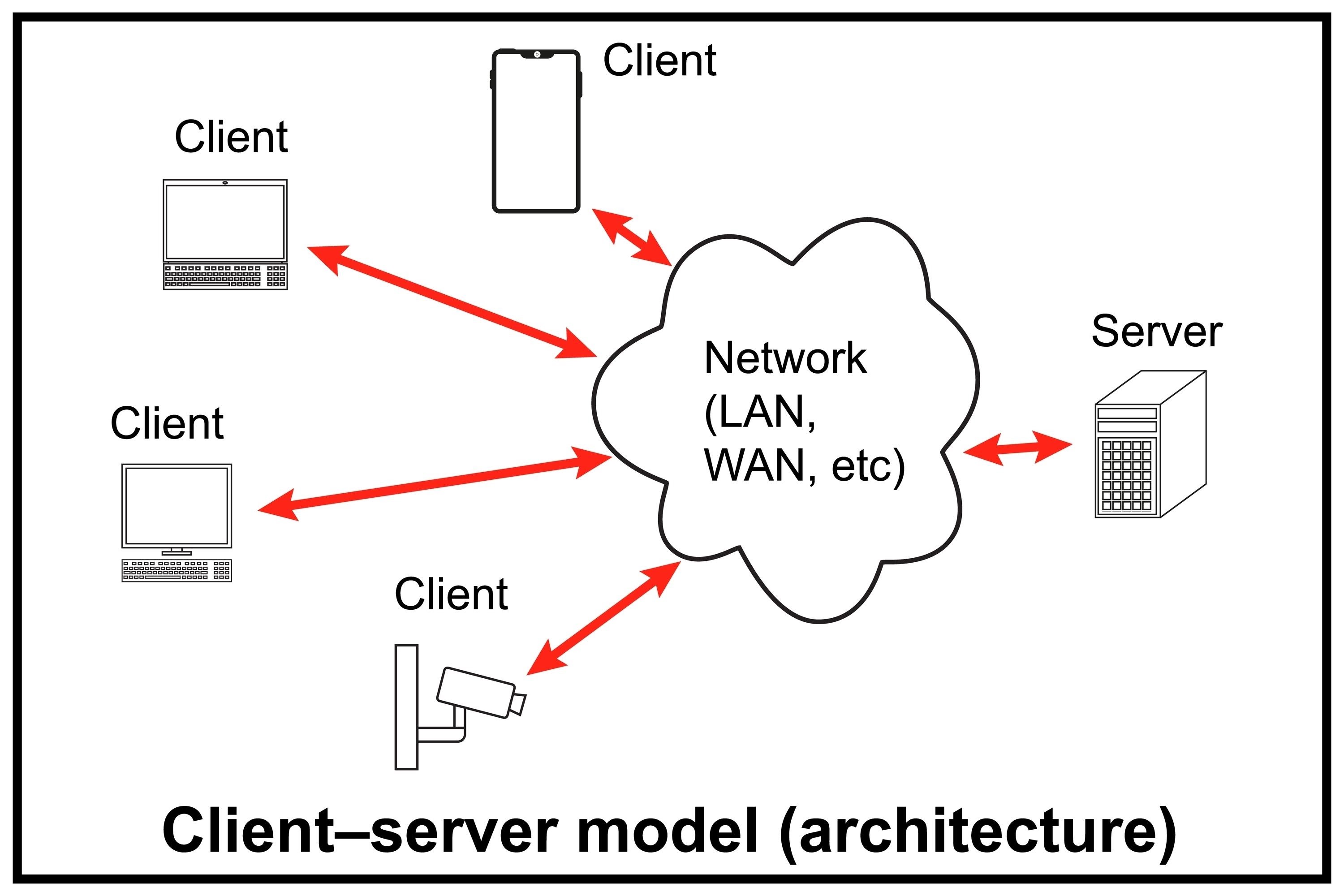

The Internet and the Web

The Internet vs The Web

The Internet

- Cables, routers, and protocols that allow devices to communicate with each other (TCP/IP)

- The infrastructure

The Web

- When most of us say “the Internet”, we most likely mean “the Web”

- An application that runs on top of the Internet

- Built on HTTP and HTML

How computers find each other

- Every device connected to the Internet has an IP address.

- Note though that a device typically has an internal IP address (e.g. on your home network) and an external IP address (e.g. on the Internet).

- Find out your external IP address by running a command on the terminal:

hostname -Ion Linux/macOS, oripconfigon Windows. - Or on the Web, go to https://whatismyipaddress.com/.

- There is a system called DNS that maps names like

wikipedia.orgto the corresponding IP addresses so you don’t have to remember them.

How did the Web come to be?

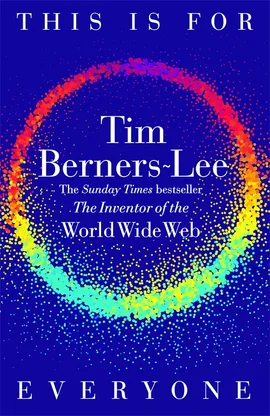

" The most influential inventor of the modern world, Sir Tim Berners-Lee is a different kind of visionary. Born in the same year as Bill Gates and Steve Jobs, Berners-Lee famously shared his invention, the World Wide Web, for no commercial reward. Its widespread adoption changed everything, transforming humanity into the first digital species. Through the web, we live, work, dream and connect.

In this intimate memoir, Berners-Lee tells the story of his iconic invention, exploring how it launched a new era of creativity and collaboration while unleashing a commercial race that today imperils democracies and polarizes public debate. As the rapid development of artificial intelligence heralds a new era of innovation, Berners-Lee provides the perfect guide to the crucial decisions ahead – and a gripping, in-the-room account of the rise of the online world.”

(Synopsis from the publisher) Berners-Lee, T. (with Witt, S.). (2025). This is for everyone. Macmillan.

HTTP Request and Response

- One application that runs on top of the Internet is HTTP (HyperText Transfer Protocol).

- HTTP allows you to send requests and receive responses from other computers on the Internet.

💡 A Web browser sends many requests for one page, one for each resource (HTML, CSS, JavaScript, images, fonts, etc.).

HTTP Response Codes

HTTP responses come with ‘status codes’ that indicate whether the request was successful or not. Typical ones you will encounter are:

- 200 OK

- 404 Not Found

- 500 Internal Server Error

You can find a full list here.

Why requests.get() Is Not a Browser

requests.get()

- One request

- No JavaScript

- One HTML response

Browser

- Dozens of requests

- Runs JavaScript

- Builds the page you see

HTML, CSS, JavaScript

The main components of a web page are built with these three languages:

HTML

Structure and meaning

CSS

Appearance and layout

JavaScript

Behaviour and interactivity

HTML describes the structure of a web page

- HTML stands for HyperText Markup Language

- Just like Markdown, it is a markup language (a language that describes the structure of content)

- It labels content as headings, lists, and links

Markdown and HTML

👉 When you write Markdown then you render it on VS Code or on a browser, that is because a software has converted the Markdown to their HTML equivalent.

| Markdown | HTML |

|---|---|

**Bold** |

<b>Bold</b> |

_Italic_ |

<i>Italic</i> |

# Heading |

<h1>Heading</h1> |

- List item |

<ul><li>List item</li></ul> |

[Link](https://example.com) |

<a href="https://example.com">Link</a> |

CSS controls how elements look

- CSS stands for Cascading Style Sheets

- It defines colours, fonts, spacing, and layout for HTML elements

- CSS uses selectors to target HTML elements

Why selectors matter for scraping

The same selector syntax that CSS uses to style elements is what we use to find elements when scraping. If you can select it for styling, you can select it for extraction.

CSS Selectors: Your scraping vocabulary

| Selector | Meaning |

|---|---|

h1 |

All <h1> elements |

.product |

Elements with class="product" |

#price |

The element with id="price" |

div.product |

<div> elements with class product |

ul li |

<li> elements inside <ul> |

a[href] |

<a> elements that have an href attribute |

Try it in DevTools

Open the Console tab and run:

This returns all elements matching that selector. The same logic powers Scrapy’s response.css() method.

JavaScript makes pages interactive

- JavaScript (JS) runs in the browser after the page loads

- It can fetch new data, update content, and respond to user actions

- Many modern sites load content after the initial HTML arrives

The scraping problem

If content is loaded by JavaScript, it won’t appear in the HTML that requests.get() returns. You’ll get an empty container where the content should be.

This is why sometimes in web scraping we need to use tools that truly run a browser, like the Python package Selenium, to scrape the content.

Static vs Dynamic Content

Static (Scrapy works)

- Content is in the initial HTML

- What you see in the HTML source you get from

requests.get()matches what you see on screen - Examples: Wikipedia, many news sites, government portals

Dynamic (may need Selenium)

- Content loaded by JavaScript after page renders

- The HTML source you get from

requests.get()shows empty containers or loading spinners - Examples: Single-page apps, infinite scroll, some e-commerce sites

Web Scraping is Reverse Engineering

Reverse Engineering a Webpage

- When a developer builds a webpage: they write HTML to structure content and CSS to make it look good. They’re thinking about humans viewing the page in a browser.

- When you scrape that page: you’re working backwards. You inspect the HTML, figure out the patterns the developer used, and write code to extract the data they wrapped in those patterns.

The developer’s intent:

They used class="price" so CSS could style it green and bold, for example:

£2.50

Your intent:

You use that same class to find and extract the value.

The code inside the .css() method is a CSS selector that finds the element with the class price and extracts the text content of that element.

robots.txt: A gentleman’s agreement

Website owners can publish a file at /robots.txt that tells crawlers which parts of the site they’d prefer not to be scraped.

This is a request, not a technical barrier. Your scraper can ignore it. Whether you should ignore it is an ethical question.

Our approach in DS205

- We respect

robots.txtwhere it exists - We add delays between requests (don’t hammer servers)

- We scrape public data for educational purposes

- We don’t redistribute scraped data commercially

A polite scraper is less likely to get blocked.

Why Web Scraping?

Data Collection at Scale

- Automated gathering of data from websites when developers do not provide APIs

- Ability to collect large volumes of data quickly and systematically

- Essential for research requiring comprehensive web data analysis

Benefits and Advantages

- Cost-effective alternative to manual data collection

- Reproducible and systematic data gathering process

- Real-time monitoring and updates of web content

- Ability to create unique datasets for competitive advantage

Real-World Applications

![]() Market Research

Market Research

![]() Academic Research

Academic Research

![]() Financial Analysis

Financial Analysis

![]() Job Market Analysis

Job Market Analysis

![]() News Monitoring

News Monitoring

For more use cases, read Chapter 3 of Ryan Mitchell (2024). Web Scraping with Python (3rd ed.). O’Reilly Media, Inc. Icons are by Flat Icons - Flaticon

Did you say web scraping for job search?

Here is a tutorial of someone who used web scraping to search for jobs.

👤 On this same vein: a former DS205 student used web scraping to build a platform to find entry-level finance jobs. I have invited him to come and share his experience with you (Week 07-08).

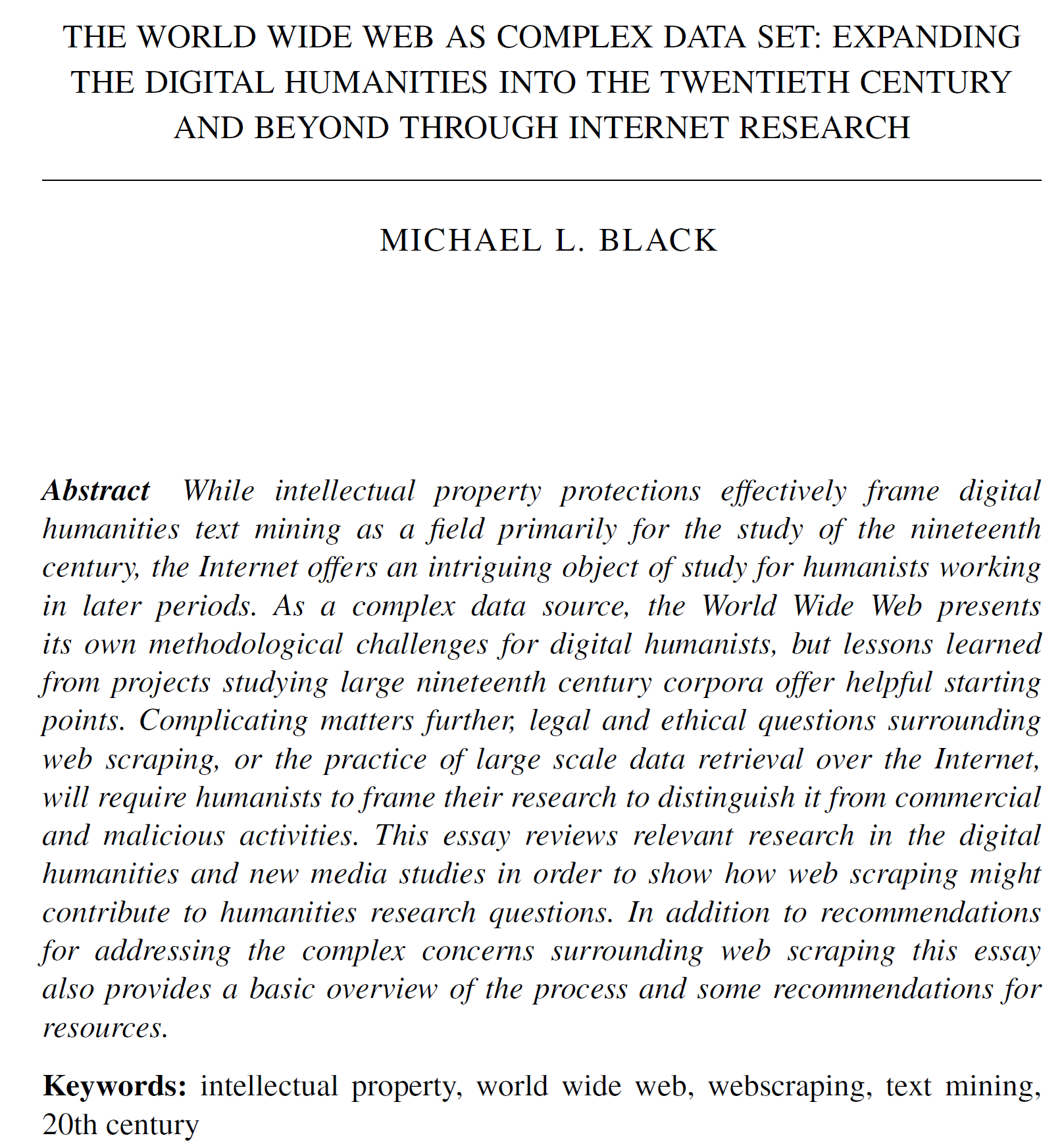

The Web as a Dataset

👈🏻 This paper from the field of digital humanities offers a valuable perspective of how to think about the Web in terms of archival and research purposes.

While published several years ago and originating outside data science, its core concept (viewing the Web as a structured dataset rather than an unorganized mass of information) matches our approach to web scraping in this course and perhaps data science applications of web scraping more broadly.

Black, M.L. (2016). The World Wide Web as Complex Data Set: Expanding the Digital Humanities into the Twentieth Century and Beyond through Internet Research. Int. J. Humanit. Arts Comput., 10, 95-109.

Web Scraping in Practice

👨💻 I will be working on the W02-NB01-Lecture-Scrapy.ipynb notebooks available on Nuvolos and for download on the 🖥️ W02 Lecture page.

What’s Next?

This Week

💻 Tomorrow’s Lab

You’ll practise scraping a UK supermarket website using the techniques from today’s lecture.

The lab notebook includes instructions for setting up a conda environment with the packages you’ll need.

Come prepared to use both Scrapy and Selenium. Some websites require one, some require the other. Part of the skill is figuring out which.

✍️ Problem Set 1

Instructions released after tomorrow’s lab.

I won’t impose a particular file structure just yet. But as you start to write code for your first problem set this week, keep asking yourself:

“How easy would someone else find it to follow my code and understand my file organisation?”

That question will matter more than you might expect!

We will build collective understanding about how we want our repositories to look like and how we want to organise our code.

Recommended Reading for this week

- Ryan Mitchell (2024). Web Scraping with Python (3rd ed.). O’Reilly Media, Inc. Chapters 1-3 and 8.

- Berners-Lee, T. (with Witt, S.). (2025). This is for everyone. Macmillan.

![]()

LSE DS205 (2025/26)