🗓️ Week 01

Welcome to the Course + Food Data Exploration

DS205 – Advanced Data Manipulation

19 Jan 2026

Welcome to DS205!

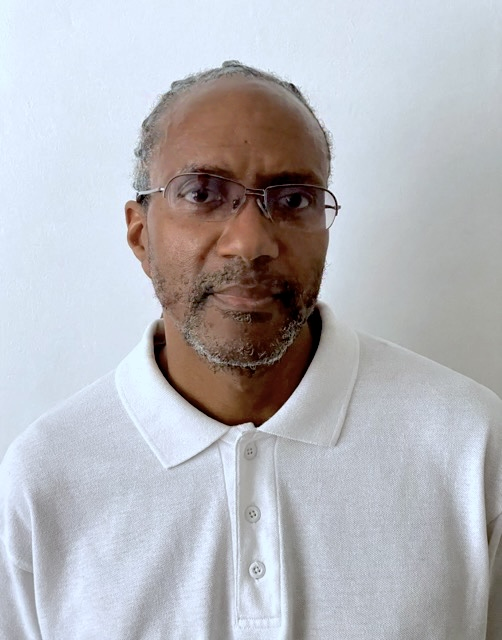

Course Lead

Current Focus:

- PhD in Computer Science

- Experienced in software engineering, data science and data engineering

- Leading DS105 and DS205 course development

- Investigating the impact of GenAI impact on higher education (

![]() GENIAL project)

GENIAL project)

Office Hours:

Wednesdays, 2:00 pm - 5:00 pm

Book via StudentHub

Recent Recognition:

LSESU Teaching Award for Feedback & Communication (2023)

Teaching Support

Administrative Support

Kevin Kittoe

Teaching & Assessment Administrator (DSI)

ADMINISTRATIVE SUPPORT

Contact Kevin (📧 DSI.ug@lse.ac.uk) for:

- Course access issues

- Assignment submissions

- Extension requests

- Administrative queries

Key Information:

- All extension requests must follow LSE’s extension policy

- Email response time: 24-48 hours working days

- Include ‘[DS205]’ in email subject lines

What can you expect to learn in DS205?

Why this course exists:

- To incorporate more advanced concepts that could not fit in DS105.

- To build production-ready solutions for real-world problems.

- Prepare you for professional-level software development for data manipulation.

🥅 Intended Learning Outcomes

By the end of this course, you should be able to:

- Use appropriate Python packages (

numpy,scipy,pandas, etc.) to automate and optimise data cleaning and data processing workflows. - Build APIs using FastAPI for structured data retrieval.

- Develop scalable web scraping workflows with the Scrapy and Selenium packages.

- Proficiently use GitHub workflows for professional collaboration and version control.

- Apply NLP techniques for retrieval of information from text data.

- Apply appropriate pre-trained deep learning models from the HuggingFace library to unstructured data.

- Collaborate effectively on shared codebases and contribute to projects with a real-world impact.

✍️ Assessment Structure

| 20% | Individual |

✍️ Problem Set 1: Web Scraping & API Development |

Release: Week 02 Due: ⏰ Week 06 (4 weeks!) |

| 40% | Individual |

✍️ Problem Set 2: RAG System Implementation |

Release: ~Week 07 Due: ⏰ Week 10 (~20 days) |

| 40% | Group Work |

👥 Final Project: RAG System Development 📦 a real capstone project you will develop with/for the TPI Centre |

Release: Week 11 Due: ⏰ 21 May 2026 (~2 months!) |

Build as you learn: In the 💻 Weekly Labs from W02-W04, your goal will be to adapt what you would have learned in the Monday lecture, only to a different data source.

💼 What’s at Stake When You Write Code?

Think of your code as a ‘public good’ rather than a personal project.

Throughout this course, you’ll write code knowing that someone else will need to understand it, use it, and build upon it. This is to mirror real-world software development of data products and data pipelines where code is read far more often than it’s written.

Aim for code that is:

- Neat and concise

- Self-explanatory

- Well-documented (when not self-explanatory)

All of your assignments (even individual ones) will have a peer component

You’ll experience this cycle repeatedly:

- You will write code for yourself, knowing an unknown peer will inherit it (I’ll randomly allocate pairs)

- You will inherit code from a peer and complete their work

- You will learn in practice what makes code maintainable through direct experience

- We write and update a CODE_OF_CONDUCT throughout the course to absorb these lessons and make them explicit.

What kind of data will we be working with?

This year, we’re exploring two thematic arcs:

🥗 Weeks 01–05:

Real Food vs

Ultra-Processed Food - Start with familiar, accessible data from an API (Open Food Facts)

- Collect unstructured data from the Web (web scraping principles)

- Write data pipelines that are reproducible and well-documented.

🌍 Weeks 07–11:

Food Producers’ Corporate Sustainability Assessments - Progress to highly unstructured data (corporate statements, reports, etc.)

- Extract relevant information from unstructured data using NLP techniques

- Work with data used and produced by our collaborators at the TPI Centre.

Pre-Requisites

To take this course, you must have taken DS105, ST101, EC1B1 or equivalent (for General Course students)

I expect you to already know:

- Python data types

(int,float,str,bool,list,dict) - Python collections (

list,dict) - Flow control (

if,for,while) - Custom functions (

def) - Essential data manipulation with

pandas

(indexing, slicing, filtering, grouping, aggregating, some reshaping) - How to use the Terminal/Command Line

- How to use Jupyter Notebooks

What would be great if you already knew:

(but we will cover them in the course)

- The

requestspackage to collect data from the Internet - The essentials of Git/GitHub for version control

(repository, commit, push, pull, clone, branch, merge, some conflict resolution, etc.)

📑 Key Information

📟 Communication

Slack is our main point of contact. The invitation link will be available on Moodle.

- 📧 Email: Reserved for formal requests (extensions, appeals)

- 👥 Office Hours: Book via StudentHub

- 🆘 Drop-in Support: See course website for details

Don’t like your laptop for coding?

We have a dedicated cloud environment on ![]() Nuvolos

Nuvolos

Visit the Nuvolos - First Time Access to learn how to get access to the DS205 environment.

Read the syllabus for week-by-week information on how we will cover the course content and assessments.

Coffee Break ☕

![]()

After the break:

- A exploratory data analysis (EDA) of food data

- Systematic data inspection (live coding demo)

- Setting up a GitHub repository for your course notes

- How we will use “vibe coding” tools in this course

- What awaits you in the 💻 W01 lab

Setting up your coding environment

We will be writing a lot of code throughout this course and we will be using VS Code and Jupyter notebooks for most of our work. We have a dedicated Nuvolos workspace for you to use. However, if you prefer to work locally on your own machine, you will have to make sure you have a few tools installed.

Development Environment

You have two options for your coding environment:

Option 1: Nuvolos Platform

(Strongly recommended)

🎯 ACTION POINT:

Access our ![]() Nuvolos - First Time guide.

Nuvolos - First Time guide.

This cloud environment comes pre-configured with all required tools. It also includes an AI code editor: GitHub Copilot (similar to OpenAI’s Codex, Cursor, Claude Code, and Google’s Antigravity).

Option 2: Local Setup

(Prone to bugs we won’t be able to help with)

You will need to install the following tools:

GitHub CLI (command line interface) or GitHub Desktop (graphical user interface)

Git (installed and configured)

Initial dependencies for the course:

📁 GitHub Repository Setup

Why Version Control Matters

Establishing good habits early prepares you for:

- Collaborative Problem Sets (peer collaboration model)

- Professional software development workflows

- Tracking your learning progress

- Organised course notes and reflections

Your Personal Repository

Create a repository for course notes:

💡 TIP: Try to use Markdown to write your notes. Choose a pattern of filename convention that works for you. Here are two potential examples:

ds205-notes/

├── README.md

├── week01/

│ ├── lecture-notes.md

│ ├── lab-reflections.md

│ └── questions.md

├── week02/

└── [subsequent weeks]ds205-notes/

├── README.md

├── w01-lecture-notes.md

├── w01-lab-reflections.md

├── w01-questions.md

├── w02-lecture-notes.md

├── w02-lab-reflections.md

├── w02-questions.md

└── [subsequent weeks]Getting Started

- Create repository on GitHub (private recommended)

- Clone locally:

git clone https://github.com/YOUR_USERNAME/ds205-notes.git - Create folder structure

- Initial commit and push

👨🏻💻 Live Coding

The Notebook

We’ll work through: W01-NB01-Lecture-Open-Food.ipynb (find it on Nuvolos). Today’s demonstration shows the complete pipeline from data collection to interactive visualisation:

- Data Collection: Collect 1000+ bread products from Open Food Facts API

- Systematic Inspection: Best practices when inspecting unfamiliar data

- Feature Engineering: Transform nested JSON to analysis-ready format

- Advanced Analysis: UMAP clustering reveals nutritional patterns

- Interactive Visualisation: Plotly visualisation with NOVA classification

NOTE: I don’t expect you to already know what UMAP is (we’ll talk about it in Week 08) but I expect you to be able to understand most of the code I’ll show.

Pay close attention when I’m demonstrating and take note of any code snippets or Python concepts that you don’t understand.

What You’ll See

- How pagination works for large datasets

- Systematic data inspection methodology

- Feature extraction from nested structures

- Preview of Week 08+ techniques (dimensionality reduction)

- Interactive visualisations

I will skip a few details because they will be covered in the lab tomorrow.

Preparing for the Lab

What Awaits You

- Hands-on practice with Open Food Facts API

- Systematic data inspection exercises

- DataFrame manipulation and analysis

- Visualisation with seaborn

Questions?

- Reach out on Slack if you need help!

- Book office hours with me (on StudentHub) if you are unsure of your Python skills.

![]()

LSE DS205 (2025/26)