🗓️ Week 04

Introduction to Web Scraping

DS205 – Advanced Data Manipulation

10 Feb 2025

1️⃣ Introduction to Web Scraping

10:03 – 10:15

Why Web Scraping?

Data Collection at Scale

- Automated gathering of data from websites when developers do not provide APIs

- Ability to collect large volumes of data quickly and systematically

- Essential for research requiring comprehensive web data analysis

Benefits and Advantages

- Cost-effective alternative to manual data collection

- Reproducible and systematic data gathering process

- Real-time monitoring and updates of web content

- Ability to create unique datasets for competitive advantage

Real-World Applications

![]() Market Research

Market Research

![]() Academic Research

Academic Research

![]() Financial Analysis

Financial Analysis

![]() Job Market Analysis

Job Market Analysis

![]() News Monitoring

News Monitoring

Icons by Flat Icons - Flaticon

Did you say web scraping for job search?

The Web as a Dataset

👈🏻 This paper from the field of digital humanities offers a valuable perspective of how to think about the Web in terms of archival and research purposes.

While published several years ago and originating outside data science, its core concept (viewing the Web as a structured dataset rather than an unorganized mass of information) matches our approach to web scraping in this course and perhaps data science applications of web scraping more broadly.

Black, M.L. (2016). The World Wide Web as Complex Data Set: Expanding the Digital Humanities into the Twentieth Century and Beyond through Internet Research. Int. J. Humanit. Arts Comput., 10, 95-109.

A Taxonomy of What you Find on the Web

| Strata | Definition | Example(s) |

|---|---|---|

| Web Element | The most basic components of the web, considered either from the user’s perspective or in the encoded markup languages used by browsers to render the web | Images, text, tables, buttons, hyperlinks, and their representations in HTML/CSS |

| Web Object | A combination of web elements nested in a container element that functions as a single component of a larger document | Comment box, tweet, submission form, browser-based game, JavaScript app, news feed |

| Web Page | A collection of web elements and/or objects presented to users as a complete document | Blog entry, journal article, Wikipedia entry |

| Web Site | A collection of web pages representing an online publication, resource, community, organisation, company, university, or data repository | adho.org, theguardian.com, illinois.edu, fsf.org, data.gov |

| Web Sphere | Web activity related to an abstract theme or event that spans multiple sites and/or genres of literate activity | Social media, blogging, memes, viral videos, online journalism, political campaigns, online activism |

| Web as a Whole | Concepts that ‘transcend’ the web itself, including the technological infrastructure that supports the web | Digital media theory, histories of the Internet, new media ecology, web programming languages, Internet protocols (TCP/IP) |

Adapted from Black (2016).

2️⃣ How Websites are Structured

10:15 – 10:40

Let’s take a look at the building blocks of Web Elements.

- HTML

- CSS

- The notion of the Document Object Model (DOM)

HTML (HyperText Markup Language)

- HTML is the standard markup language for creating web pages.

- It defines the structure of a web page, of how the content is organised.

Here’s what a simple HTML document looks like:

How your browser renders the HTML

- The browser reads the HTML document from top to bottom

- It creates a tree-like structure called the DOM (Document Object Model)

- Each HTML element becomes a node in this tree

- The browser then:

- Parses the HTML structure

- Applies any CSS styling

- Executes JavaScript (if any)

- Renders the final webpage visually

🖥️ Live Demo: Watch me as I render that simple HTML document in my browser and show you the DOM (right-click on the page and select “Inspect” to see the DOM)

HTML Building Blocks

Each element is defined by a start tag (e.g., <p>) and usually an end tag (e.g., </p>). Elements can contain text, other elements, or both.

Some key HTML elements include:

- Headings (

<h1>to<h6>) - Paragraphs (

<p>) - Links (

<a>) - Images (

<img>) - Lists (

<ul>,<ol>,<li>) - Tables (

<table>,<tr>,<td>) - Forms (

<form>,<input>,<button>)

HTML Elements (Headings)

Headings are used to demarcate the hierarchy of the content on a page.

Becomes:

- Main Heading

1.1 Subheading

1.1.1 Sub-subheading

HTML Elements (Text)

Here are some of the most common HTML elements for representing text.

<p>This is a paragraph.</p>

<b>This is bold text.</b>

<i>This is italic text.</i>

<a href="https://lse.ac.uk">This is a link.</a>Becomes:

HTML Elements (Lists)

Lists are used to present ordered or unordered collections of items.

HTML Elements (Other inline elements)

Images are represented by the <img> tag.

Becomes:

![]()

Links are represented by the <a> tag (anchor).

Becomes:

HTML Elements (Divs)

You will inevitably encounter HTML elements that do not have a specific semantic meaning. This is the case for the <div> element. It exists to group elements together and style them as a unit. Think of it as a box that can contain other elements.

Becomes:

This is a paragraph.

This is another paragraph.

(I highlighted the <div> element in blue to make it easier to see. In practice, you would not see the border.)

HTML Elements (Spans)

The <span> element is used to style a specific part of a text. It is a generic inline container for phrasing content, which does not inherently represent anything. It can be used to group elements for styling purposes (this will make sense in a moment).

<p>In this paragraph, we have <span style="color: #ED1C24;">this span</span>

and <span style="color: #0C79A2;">this span</span>.</p>Becomes:

In this paragraph, we have this span and this span.

CSS (Cascading Style Sheets)

CSS is the language we use to style the HTML elements.

- Change the color of the text

- Change the size of the text

- Change the background color of the text

- Change the font of the text

- And more! (it’s not just about text)

CSS works by selecting the HTML elements you want to style and then applying the styles to those elements.

💡 THIS WILL BE VERY IMPORTANT FOR WEB SCRAPING

How CSS works (All matching elements)

We can apply CSS to all matching elements at once:

Which would render all

<h1>elements in blue

How CSS works (Specific elements)

We can apply CSS to specific elements by selecting them by their id or class:

Here, the

#specialis the id of the element we want to style. Only the element with this id will be styled. Also, only one element can have a given id.

Example of an id

The source:

The result:

This is a regular paragraph.

This is a special paragraph.

How CSS works (Classes)

Another way to style elements is by using classes. Classes can be applied to multiple elements in the same document.

In CSS, the

.is used to select elements by their class but in HTML, theclassattribute is used to select elements by their class.

Example of a class

The source:

<p>Nothing special here.</p>

<p class="special">I am special!</p>

<p>I contain a <span class="special">special something</span>!</p>The result:

Nothing special here.

I am special!

I contain a special something!

How CSS works (inline styles)

We can also apply CSS to specific elements by using inline styles.

<div style="color: #ED1C24; border: 1px solid #ED1C24; padding: 0.5em; border-radius: 0.5em;" > <p>I am inside a very unique div!</p> <p style="color: #0C79A2;">I override the div's color!</p> </div>Here, the

styleattribute is used directly on the element.The result:

I am inside a very unique div!

I override the div’s color!

The Document Object Model (DOM)

The DOM is a tree structure that represents the HTML elements of a web page.

It’s fun to modify the DOM to change the appearance of the page! (I will demonstrate)

🧐 This proves that screenshots are not necessarily proof of anything.

So What?!

Web scraping is essentially a reverse engineering process. You pay attention to how the webdevelopers designed, structured and styled the website and then you use that knowledge to extract the data you need.

3️⃣ Selecting elements by their HTML and CSS

10:40 – 11:00

I will show you how to use CSS selectors directly in the browser to select the elements you want to scrape. I will use the Inspector to do this. You can do that with any browser.

🖥️ Live Demo

🍵 Quick Coffee Break

11:00 – 11:10

After the break:

- Can we scrape anything we want from the Web? Should we? Legal and ethical considerations

- How AI companies ignore all of this and scrape everything anyway

- How to actually scrape a website in Python (sneak peek of 💻 W04 Lab)

4️⃣ Ethical and Industry Perspectives

11:10 – 11:45

Can we scrape anything we want? Should we?

Legal Framework

General Data Protection Regulation (GDPR) and California Consumer Privacy Act of 2018 (“CCPA”) enforces privacy rights. You can’t scrape personally identifiable information (PII) freely from the Web without consent.

- Things like names, email addresses, phone numbers, etc.

EU AI Act brings more stringent requirements specifically for AI systems.

Is what you are scraping copyrighted? It might be protected by the Digital Millennium Copyright Act (“DMCA”).

Regulation is patchier in the US.

If you want to make a business out of web scraping, consult a lawyer. This is not my field of expertise!

A Framework for Web Scraping Ethics

| Access Type | Retrieval Type | Definition | Recommendation |

|---|---|---|---|

| Hard Open | Explicitly supported | Explicitly allows for automated/programmatic retrieval by including a data API to facilitate access | Follow any guidelines and restrictions described in the API’s documentation pages |

| Soft Open | Informally permitted | Does not include a data API but could support screen scraping. No page on the site explicitly states that web scraping is forbidden | Look for robots.txt and follow any guidelines defined therein. If no robots.txt is provided, follow your best judgment about which parts of the site should be excluded. Use a safe retrieval rate |

| Soft Closed | Potentially malicious | Does not password protect content or specify clear restrictions in robots.txt but explicitly states on a ‘Terms of use’ page that web scraping is forbidden or restricted | The site owner may not have considered academic research when developing a web scraping policy. Contact the owner and ask for permission. Alternatively, check for copies of the website in a web archiving project that supports automated retrieval like the Internet Archive |

| Hard Closed | Potentially malicious | Content is password protected or subject to some other security check | Exclude from project. Some services that host password protected data, like JSTOR, may be willing to make it available to researchers through a security agreement |

Adapted from Black (2016).

Technical Controls

There are a few things you can do, as a coder, if you want to make your web scraping more compliant:

- Check the Terms of Service of the website you are scraping.

- Check the

robots.txtfile to see if the website allows scraping (more on this in the next slide) - Impose rate limits on your requests. Sleep between requests.

- Use a

User-Agentstring to identify yourself

👉🏻 Although not technically webscraping, take a look at Wikimedia’s API Etiquette for a good example of how to do this. We all love our free Wikipedia, we don’t want to mistreat it!

Sample robots.txt file

This is how a robots.txt file looks like (with explanations):

The above means that the * user agent (any browser and any bot) is allowed to scrape everything except for the /ecommerce/basket and /admin/ directories. It also sets a crawl delay of 10 seconds. That is, if the bot wants to scrape more than one page, it should wait 10 seconds between each request.

A robots.txt to restrict certain bots

# An example robots.txt file

User-agent: Googlebot

Allow: /

User-agent: ChatGPT-User

User-agent: GPTBot

Disallow: /

User-agent: *

Disallow: /secret/In this example, Googlebot is allowed to crawl all URLs on the website, ChatGPT-User and GPTBot are disallowed from crawling any URLs, and all other crawlers are disallowed from crawling URLs under the /secret/ directory.

This example was taken from:

Liu, E., Luo, E., Shan, S., Voelker, G. M., Zhao, B. Y., & Savage, S. (2024). Somesite I Used To Crawl: Awareness, Agency and Efficacy in Protecting Content Creators From AI Crawlers. arXiv preprint arXiv:2411.15091.

Another thing about robots.txt

They are not enforceable. It’s not a legal contract.

AI companies have been ignoring them anyway.

Case for Discussion I: News Publishers v. AI Companies

The Controversy

- Perplexity AI accused of “content theft”

- Summarized Forbes articles without clear attribution

- Part of broader trend (NYT v. OpenAI, etc.)

Key Questions for Discussion

- Is transformation of content enough to justify its use?

- Does driving traffic back justify using content?

- How can small publishers compete if AI can freely redistribute their work?

Read the two Forbes articles about it:

- Sarah Emerson. (June 7, 2024 Friday). Buzzy AI Search Engine Perplexity Is Directly Ripping Off Content From News Outlets. Forbes.com. (login with your LSE email)

- Randall Lane. (June 11, 2024 Tuesday). Why Perplexity’s Cynical Theft Represents Everything That Could Go Wrong With AI. Forbes.com. (free access)

Example of a similar case (NY Times v. OpenAI)

‘The New York Times’ takes OpenAI to court. ChatGPT’s future could be on the line. NPR, January 14, 2025. By Bobby Allyn.

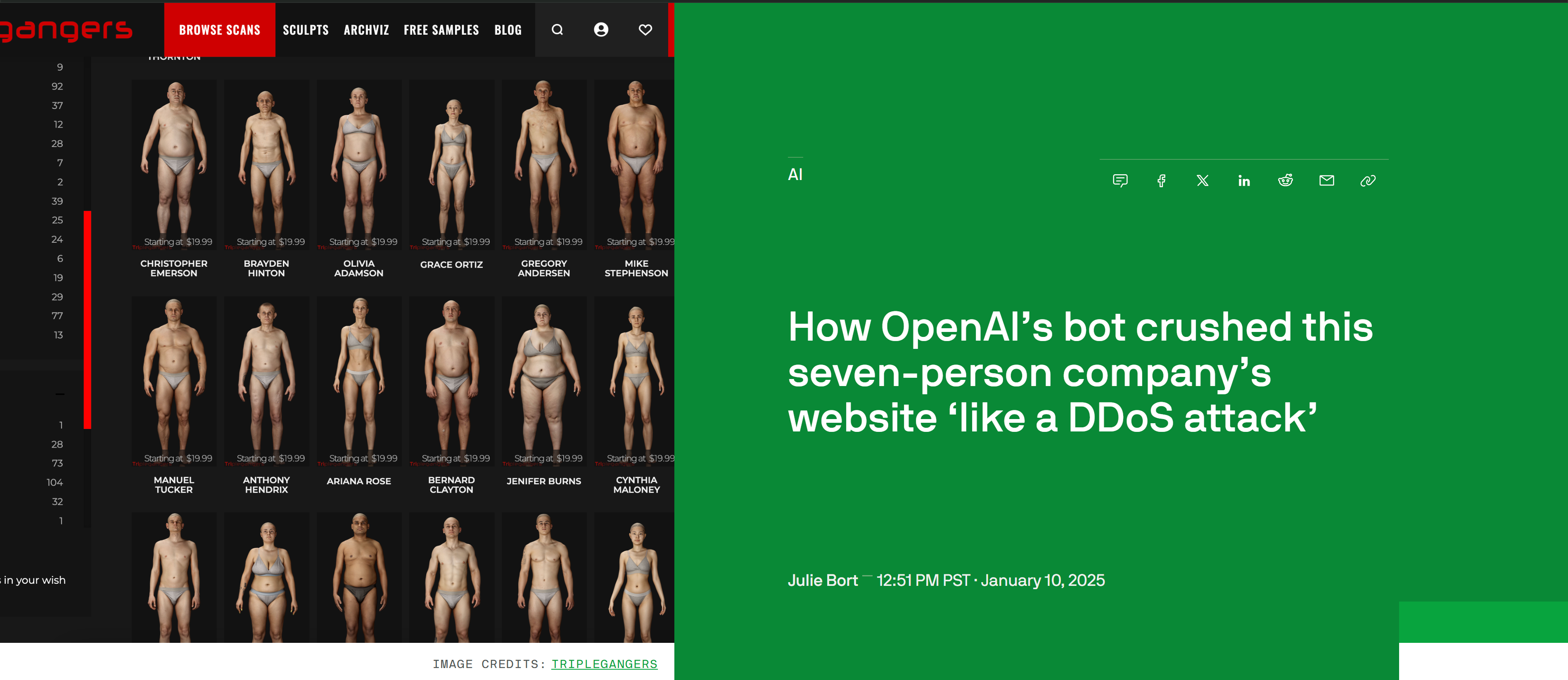

Case for Discussion II: Small Websites v. AI Companies

The Incident

- OpenAI’s bot overwhelmed small company’s website

- Unintentional but devastating impact

- Highlighted power imbalance in web scraping

Discussion Points

- Should AI companies be liable for scraping damage?

- Is it fair for small sites to shoulder protection burden?

- How to balance AI training needs with website stability?

Read the article:

“How OpenAI’s bot crushed this seven-person company’s website ‘like a DDoS attack’” TechCrunch, January 10, 2025. By Julie Bort.

Industry Responses to AI-Powered Data Collection

- Twitter/X introduced massive API restrictions once ChatGPT became popular

Ivan Mehta. (March 29, 2023). Twitter announces new API with only free, basic and enterprise levels. TechCrunch. (free to access)

- Reddit introduced a new API in 2023 that limits the amount of data you can scrape

Mike Isaac. (April 20, 2023 Thursday). Reddit Wants to Get Paid for Helping to Teach Big A.I. Systems. The New York Times - International Edition. (login with your LSE email)

- Growing trend of data licensing

Now what about this one?

5️⃣ What’s Next?

11:45 – 12:00

While AI-powered scraping tools exist (a quick GitHub search will show you a few open-source ones like ScraperAI and ScrapeGraphAI), we’ll focus on manual web scraping in the next few weeks.

Learning the fundamentals helps us understand how web scraping works under the hood.

💻 W04 Lab

Tomorrow you will:

📝 W04-W05 Formative Exercise

Practice writing and iterating on code to improve your programming skills.

Reward: to gain valuable coding experience!

Thank you!

THE END

![]()

LSE DS205 (2024/25)