🗓️ Week 07

Machine Learning I

DS101 – Fundamentals of Data Science

27 Feb 2023

⏪ Recap

- A model is a mathematical representation of a real-world process, a simplified version of reality.

- We saw examples of a linear regression model.

- It contains several assumptions that need to be met for the model to be valid.

- Social scientists have been using linear regression models for decades.

⏪ Recap: Linear Regression

We also saw how linear regression models are normally represented mathematically:

The generic supervised model:

\[ Y = \operatorname{f}(X) + \epsilon \]

is defined more explicitly as follows ➡️

Simple linear regression

\[ \begin{align} Y = \beta_0 +& \beta_1 X + \epsilon, \\ \\ \\ \end{align} \]

when we use a single predictor, \(X\).

Multiple linear regression

\[ \begin{align} Y = \beta_0 &+ \beta_1 X_1 + \beta_2 X_2 \\ &+ \dots \\ &+ \beta_p X_p + \epsilon \end{align} \]

when there are multiple predictors, \(X_p\).

Note

- As a well-studied statistical technique, we know a lot about the properties of the model.

- Researchers can use this knowledge to assess the validity of the model, using things like confidence intervals, hypothesis testing, and many other model diagnostics.

Limitations of Linear Regression Models

The typical linear model assumes that:

- the relationship between the response and the predictors is linear.

- the error terms are independent and identically distributed.

- the error terms have a constant variance.

- the error terms are normally distributed.

Important

Barely any real-world process is linear.

Making Predictions

We often want to use a model to make predictions

- either about the future

- or about new observations.

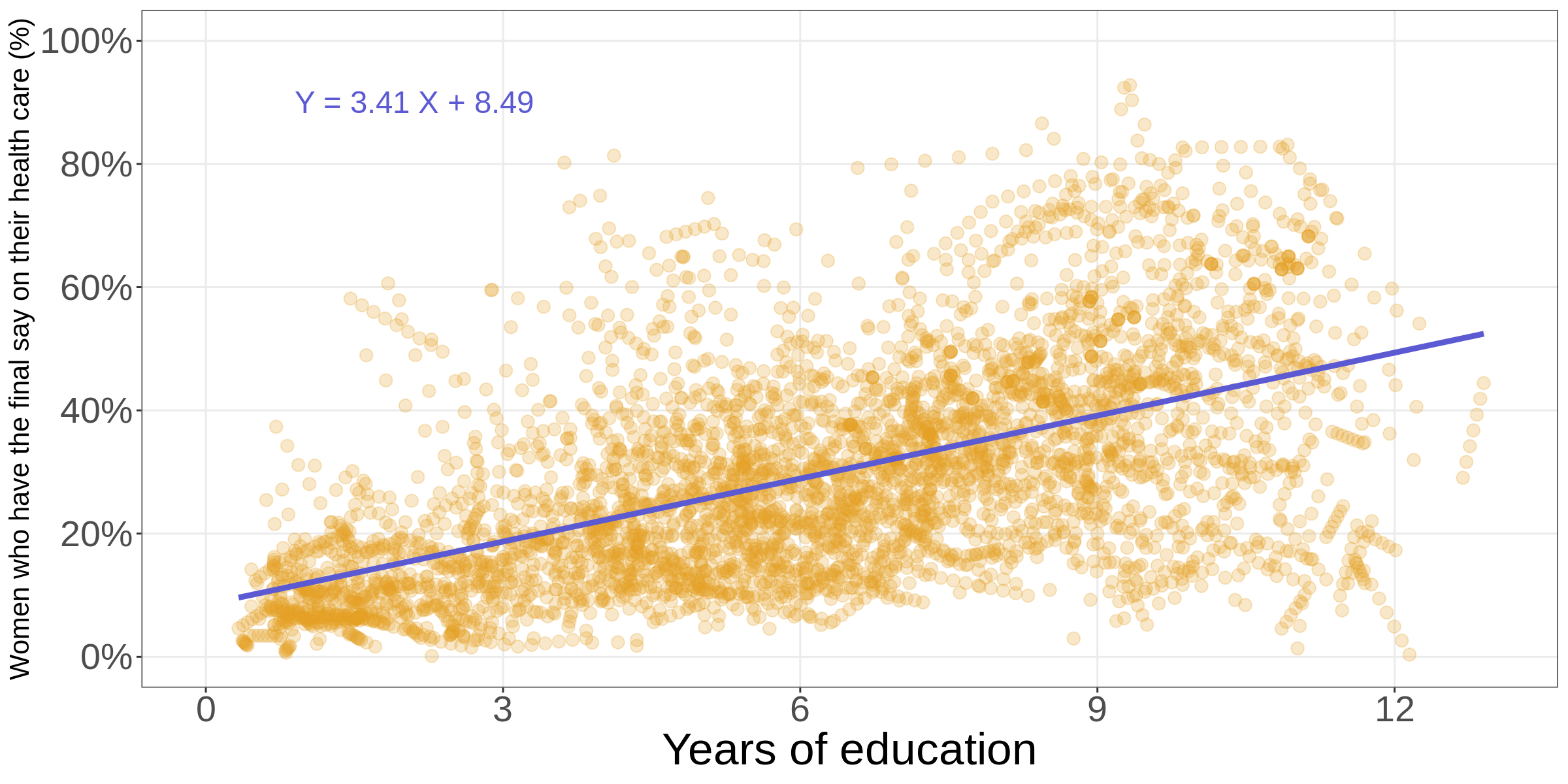

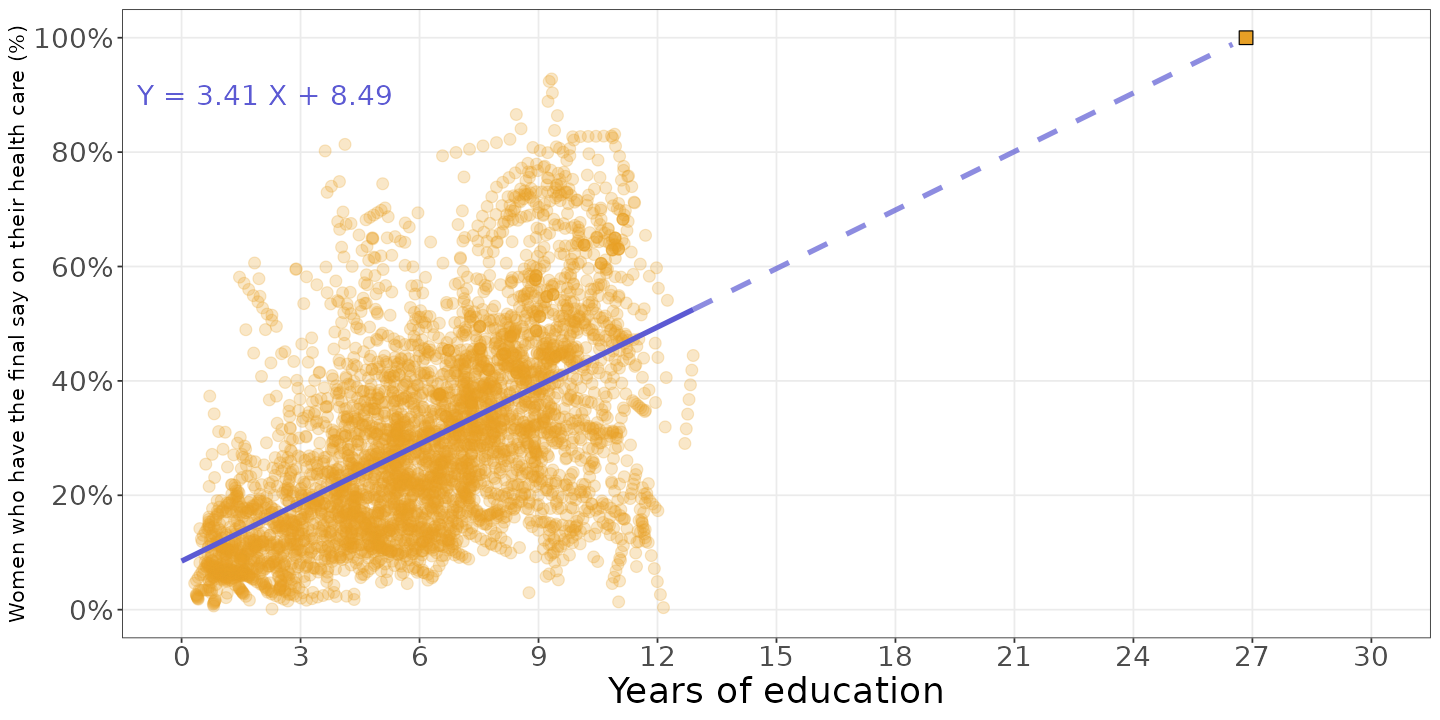

⏪ The simple linear model from W05

Let’s revisit the simple linear model from W05:

What happens when we extrapolate?

Under what conditions would this model predict a decision power of 100%?

The thing about predictions

- We can use a model to make predictions about the future.

- We can also use a model to make predictions about new observations.

- But we need to be careful about the assumptions that we make about the data.

Note

We will focus a lot more on predictions from now on.

This is because Machine Learning, in practice, is all about making predictions.

What is Machine Learning?

A definition

Machine Learning (ML) is a subfield of Computer Science and Artificial Intelligence (AI) that focuses on the design and development of algorithms that can learn from data.

How it works

How it works

- The process is similar to the regular algorithms (think recipes) we saw in W03.

- Only this time, the ingredients are data.

INPUT (data)

⬇️

ALGORITHM

⬇️

OUTPUT (prediction)

Types of Machine Learning

Supervised

Learning

- Each data point is associated with a label.

- The goal is to learn a function that maps the data to the labels.

(🗓️ Week 07)

Unsupervised

Learning

- There is no such association between the data and the labels.

- The focus is on similarities between data points.

(🗓️ Week 08)

Supervised Learning

Supervised Learning

If we assume there is a way to map between X and Y, we could use SUPERVISED LEARNING to learn this mapping.

Supervised Learning

- The algorithm will teach itself to identify changes in \(Y\) based on the values of \(X\)

- A dataset of labelled data is required

- Prediction: presented with new \(X\), the algorithm will be able to predict how \(Y\) would be like

Supervised Learning

- If \(Y\) is a numerical value, we call it a regression problem.

- If \(Y\) is a category, we call it a classification problem.

Supervised Learning

- There are countless ways to do that; each algorithm does it in its own way.

- Here are some names of basic algorithms:

- Linear regression

- Logistic regression

- Decision trees

- Support vector machines

- Neural networks

One Practical Example

Suppose you want to be alerted when politicians spend their additional allowances unlawfully: travel costs, catering functions, stationery and postage costs, etc…

How would you approach this problem?

Image sources: Flickr | UK Parliament & Receipts Receipt Pay | Pixabay

What is in the receipt?

- Date of the transaction

- Amount of money spent

- Description of the transaction

- Category of the transaction

- Address of the merchant

All of this information constitutes our input.

What about the output?

- The output is something you would normally want to predict or attempt to explain 1

- Also referred to as the label, “ground truth”, target or dependent variable, or simply \(Y\)

- Most commonly it is a numerical or categorical variable

Structure of dataset

| Receipt ID | Number of items | Average value per item | Total Value | Distance from constituency | … | SUSPICIOUS |

|---|---|---|---|---|---|---|

| #24321234 | 3 | £ 10.47 | £ 78.00 | 200 Miles | … | No |

| #24321235 | 1 | £ 100.00 | £ 100.00 | 100 Miles | … | Yes |

| #24321236 | 2 | £ 50.00 | £ 100.00 | 50 Miles | … | No |

| #98755645 | 5 | £ 20.00 | £ 100.00 | 10 Miles | … | No |

| #24321236 | 2 | £ 50.00 | £ 100.00 | 50 Miles | … | No |

| … | … | … | … | … | … | … |

Side story

There was an open source crowdfunded project to perform exactly this, using data from the Brazilian congress.

Side story

Source: https://serenata.ai/en/

Side story

Source: https://serenata.ai/en/

Time for coffee ☕

After the break:

- Example of supervised learning algorithms

- Self-supervised learning

Algorithms

The Logistic Regression model

Consider a binary response:

\[ Y = \begin{cases} 0 \\ 1 \end{cases} \]

We model the probability that \(Y = 1\) using the logistic function (aka. sigmoid curve):

\[ Pr(Y = 1|X) = p(X) = \frac{e^{\beta_0 + \beta_1X}}{1 + e^{\beta_0 + \beta_1 X}} \]

Source of illustration: TIBCO

The Decision Tree model

The Support Vector Machine model

The Support Vector Machine model

Say you have a dataset with two classes of points:

The Support Vector Machine model

The goal is to find a line that separates the two classes of points:

The Support Vector Machine model

It can get more complicated than just a line:

Neural Networks

Image from Stack Exchange | TeX

Deep Learning

- Stack a lot of layers on top of each other, and you get a deep neural network.

- Forget about interpretability!

Attention

- The Transformer architecture is a deep learning model that uses attention to learn contextual relationships between words in a text.

- It has revolutionized the field of Natural Language Processing (NLP).

What’s next?

What’s next?

- 🗓️ Week 08: Unsupervised learning

- 🗓️ Week 09: More on unstructured data

- You will also explore how to measure the performance of your models

- 🗓️ Week 10: We’ll think deeper about what it means to predict something

- We’ll also talk about fairness and bias in machine learning

References

LSE DS101 2022/23 Lent Term (archive)